Welcome @khobragade.pranjal7,

please try to check other topics with the same error first. There are a lot and also described what information is needed to help you.

e.g.:

@leex279 @aliasfox thank you,

I have followed all the steps, and I can see Ollama is running in the browser. I’m able to use Ollama, but it’s slow. I want to continue using Claude 3 Sonnet New, which I was using earlier in bolt.diy.

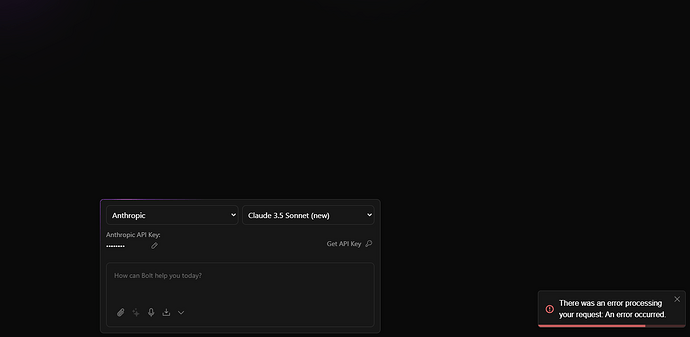

Anthropic was working fine for the past two days, but after my API keys expired, I created a new account for Anthropic and updated the new API key in the .env file. However, now, whenever I try using Claude 3 Sonnet New, I get this error: “Request Failed: There was an error processing your request: An error occurred.”

Try to use the “(old)” one and not the new one, as it not performans well with bolt.diy at the moment.

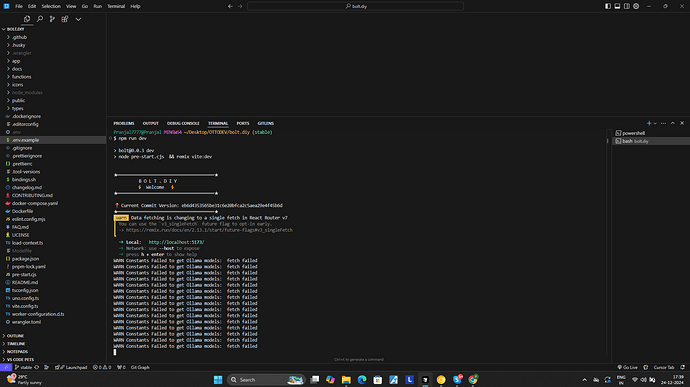

Other then that => do you see an error in the terminal/shell where you startet bolt?

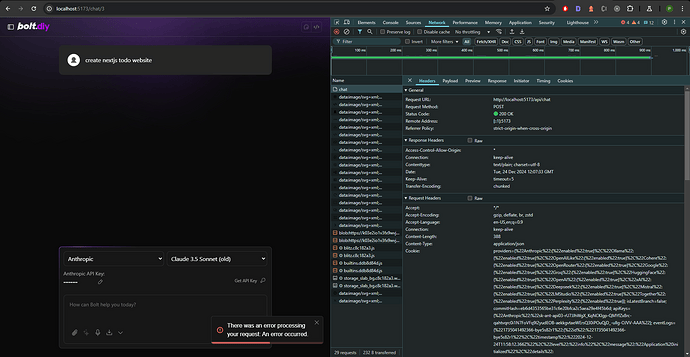

@leex279 check attached image i have tried with old version as you can see api call and version in screenshot also am not getting any errors in the terminal where am running bolt.

@leex279 , I have also tried to clone the repository again and followed all the steps, but I’m still encountering the same error on my Windows system.

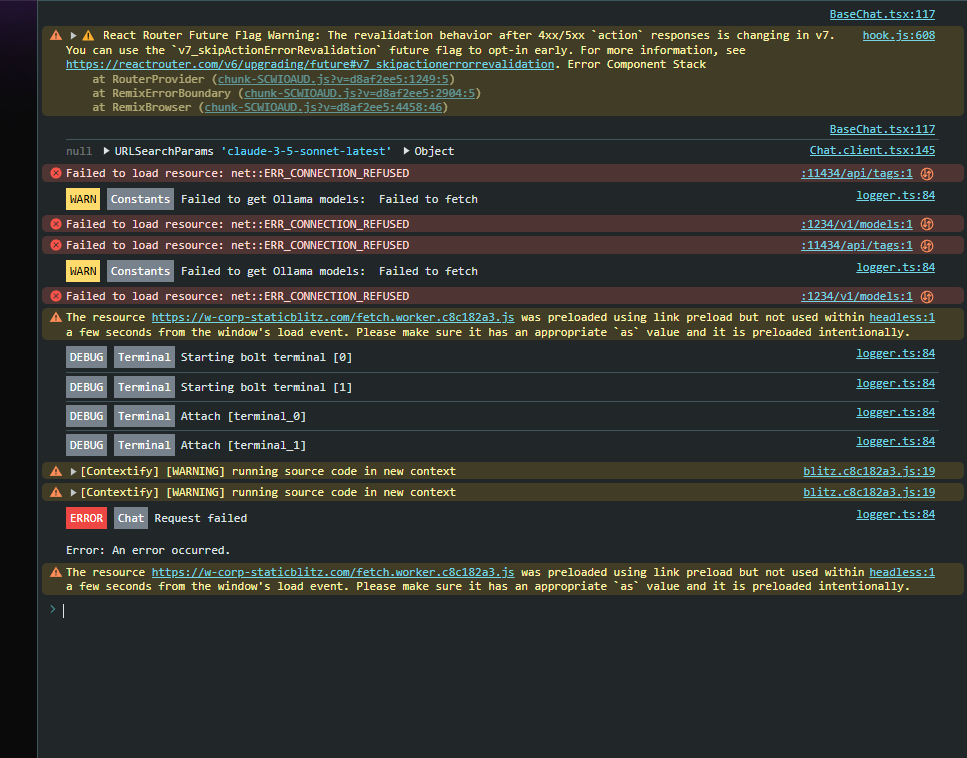

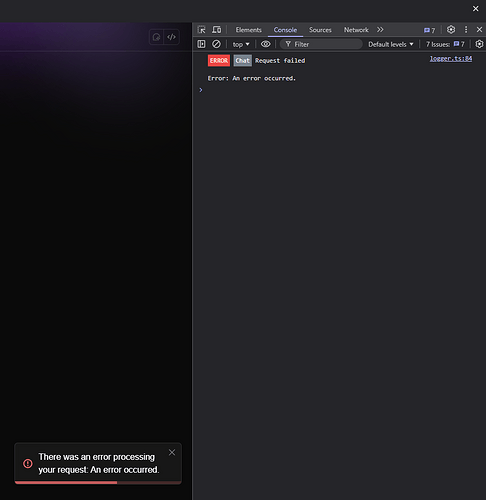

@leex279 this error am getting in console after sending message from claude 3 sonnet old

@khobragade.pranjal7 thanks for the infos.

I testet myself and could reproduce. I am also not able to use Antrophic at the moment.

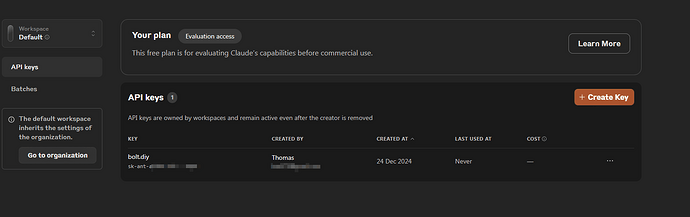

@aliasfox @thecodacus maybe we got a problem/bug here? I also see that in the Backend of Antrophic the request has not arrived:

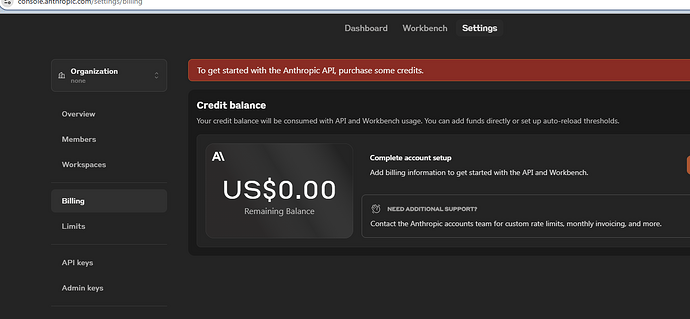

Ah wait, you need to do billing infos to use the API. I did not do that as I dont use this provider:

@khobragade.pranjal7 did you provide billing infos so you can use the api?

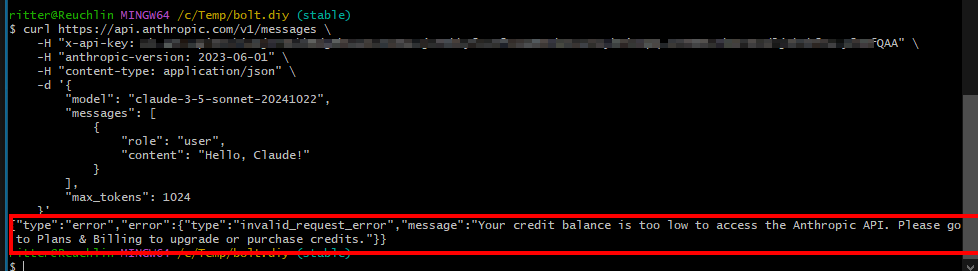

Verified it via shell:

curl https://api.anthropic.com/v1/messages \

-H "x-api-key: $ANTHROPIC_API_KEY" \

-H "anthropic-version: 2023-06-01" \

-H "content-type: application/json" \

-d '{

"model": "claude-3-5-sonnet-20241022",

"messages": [

{

"role": "user",

"content": "Hello, Claude!"

}

],

"max_tokens": 1024

}'

@aliasfox @thecodacus cant we output such errors from the providers in the terminal, so the user has more information about whats going on?

@leex279 thank you so much i purchasing some credit and again trying.

I just got on for the day, but that’s what I was thinking. Thought they would only work with credits. Were you ever able to get Anthropic or Openrouter to work without it? The Free Openrouter models never really worked for me. You can always use HuggingFace though, completely free.

@aliasfox @leex279 @thecodacus can we integrate vertex ai provider so by using projectId and region we can use anthropic models like claude and more from google.

same here.

will look into this… I am also annoyed that the error is not surfacing into the terminal and just throwing a generic message.

Same here. All I did was add my APIs keys and clicked on one the default chat/prompts. “Build a todo app in React using Tailwind” and got that same error.

@getkwikr247 where did you add the keys? if you added them to .env file, while running, you need to manually restart bolt.diy (npm run dev).

If you put it in the UI, reload with CTRL+R or F5 and then try.

If not working at all, make sure your API Keys are working without bolt (verfify with curl or invoke-webrequest in linux/windows terminal).

I dont think its a bolt problem. Its just bad that bolt does not show the correct errors, which would help more, but with fixes this, this would not fix the error at all. You got a problem with the api key / provider / model.

Interestingly enough, I also randomly tried it with OpenBolt.dev and with deepseek and anthropic API Keys and also got the same error.

I’m not sure if it has anything to do with the latest/recent versions of Bolt.diy.

We’re going to try and install an older version locally.

Ok, openbolt.dev => dont know what version there is, it´s also nothing official from this community/opensource project/team. Just to clarify that and make sure no one thinks that.

Other then at => as I said, I would recommend verifying it outside of bolt, if not done already, cause it makes no sense to debug/reinstall etc. further, if just your keys/providers are not working well.

Okay. Thanks, we’ll try that.

Maybe we need notice/disclaimer for this in the UI… Commercial companies like DeekSeek, Anthropic, OpenAi, etc. require setting up payment methods and having credits. This is not a Bolt.diy issue!

Try using Openrouter who provides free credits, free models, and a huge model library (including DeekSeek-V3). Or use HuggingFace, which is free, Google AI Studio for Gemini Flash 2.0 (exp-1206) Free, GitHub (Azure) Free for 4o & Mistral models, etc.