Thank you!

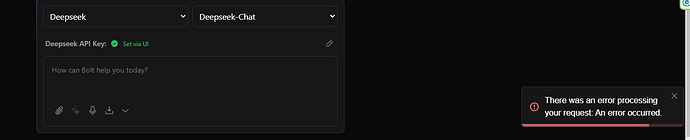

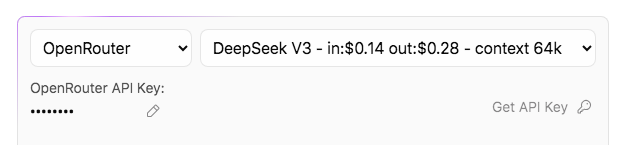

I can confirm that I tried OpenRouter > DeepSeek-V3 and that API Key worked.

I think some form of notification/disclaimer would be helpful so people can have a better idea of what could be causing the error, because I too didn’t signup for a paid account for DeepSeek or Anthropic.

Nice

Agree, but it´s not that easy as always, cause there are so many different things/painpoint of the users depending on their knowledge and if there are to many disclaimers its also not good. The other things is to add it to the FAQ and Docs, but I know from supporting here a lot, that 90% of people also dont read the docs and faq fully.

So the best thing, just to make sure the correct errors from the Providers will pass through bolt to the user, so its clear what the error is.

I both agree and disagree here. I think there is a technical solution to this (because it comes up so often). Maybe we need a check for credits to make the error clearer or a flag set for each provider so that a disclaimer sub-heading is shown or popup agreed to. Maybe both, lol.

I think passing through the errors from the provider is mostly sufficient, cause if you try to request them directly, you got mostly everytime an error which says whats wrong. I dont think a saw ever an error from an provider which was just generic and not explained on their docs.

I looked at this issue back in the day. Problem is that error you see is error that OpenRouter returns. It does not return real reason.

What this means is that we can only guess and list all possible reasons like:

- are you using free model? you run out of free quota may be?

- Are you using payed model? Do you have credits in your account?

And these two are for OpenRouter and here may be more.

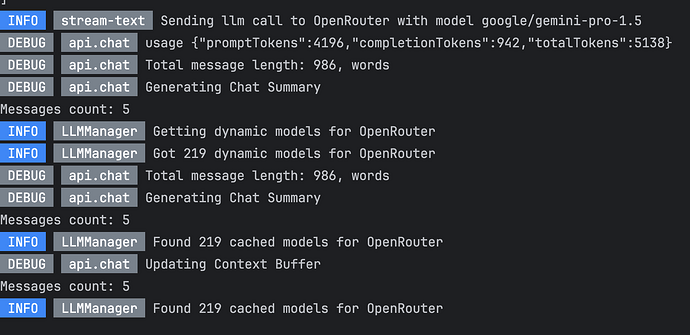

@wonderwhy.er with the current main branch there should be a detailed error in terminal. @thecodacus pushed this features last week to main.

Well for OpenRouter this is what I see in UI/Network side

3:“Custom error: Invalid JSON response”

Same error show in Popup

While in console for running server I see

So not sure what you mean

Ok depends maybe on what you are doing. I get this for example:

INFO LLMManager Got 219 dynamic models for OpenRouter

INFO stream-text Sending llm call to OpenRouter with model deepseek/deepseek-chat

DEBUG api.chat usage {"promptTokens":0,"completionTokens":0,"totalTokens":0}

INFO api.chat Reached max token limit (8000): Continuing message (1 switches left)

@slimyohan42 take a look at your terminal logs. there should be an more detailed error.

If this is a importet bigger project, make sure you enabled “context optimization” in settings.

Other then that make sure you have credits in deepeek, as the API is not free.