Hi all.

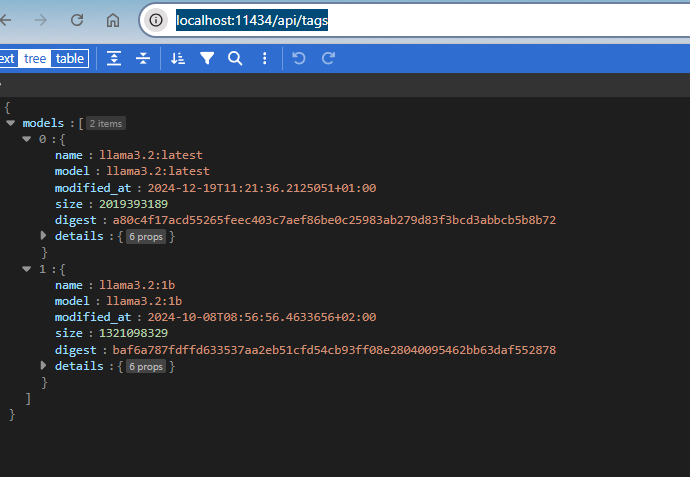

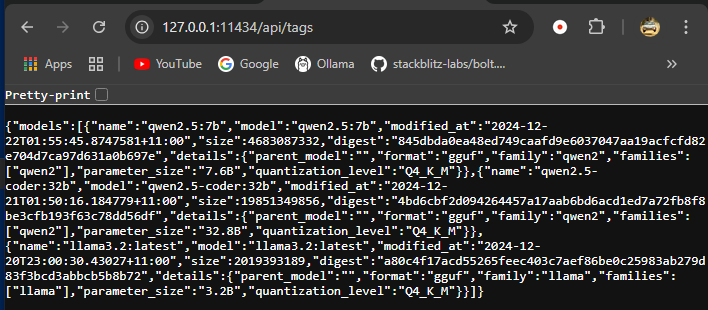

After abandoning Bolt.diy on my Macbookpro M1 because it didn’t have enough memory, I’ve re-purposed my gaming PC as a Bolt.diy stand-alone machine. Bought 64m DDR4 memory replacing the sad 16M PC4 previous. To run Qwen-32B you need at least 21M without overheads. I thought, let’s just get it done.

That said. I had help previously and realised there is no one guide that can help someone as there’s always little issues that can pop up at different steps of getting things up and running. So I thought I’d try and take everything I’ve learned and build a more complete guide with all the intricacies that may come into play at any step and any tips and tricks to sorting them as you go. You’ll see what I mean hopefully - there will be a high-level view with a few key steps, then deep-dive into issues that can be encountered along the way.

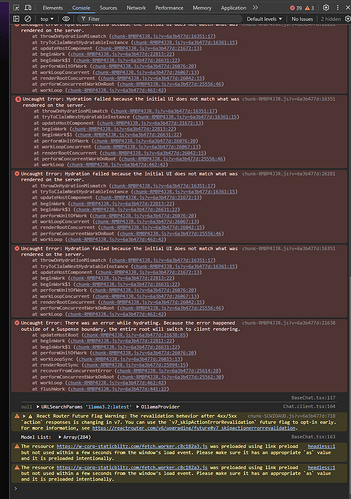

Oh, and I still have some issues needing resolving too I’m sure I’ll clear that up quickly though.

FYI - support gave me the following guide which doesn’t have enough detail to get up and running…

Following principals for a Desktop build…

= = = = = = = = = = = = = = = = = = =

Installing GIT

= = = = = = = = = = = = = = = = = = =

Use the windows installer and ensure comand line is ‘ticked’ as an option for ease of use.

Assuming a standard build using the C drive

c:/Program files/Git

After it’s installed from Powershell or Command line you can enter the following to se if it’s working pro[perly and accessable in multiple locations…

PS C:\Windows\system32> git --version

Should return like…

git version 2.47.1.windows.1

= = = = = = = = = = = = = = = = = = =

Install Node (for software package management)

= = = = = = = = = = = = = = = = = = =

Trust me, get used to this as it’s a good idea. Even if you’re not a developer, just live a little…

Go to your project folder you want to work from going forward. I chose an alternate drive to save space on the system drive.

d:/coding/projects/

Use the command line to make your life a little easier than the old Windows GUI process - like I said - live a little…

Open Windows Power Shell and navigate to your folder…

= = = = = = = = = = = = = = = = = = =

Cloning Bolt to your machine

= = = = = = = = = = = = = = = = = = =

From Git by entering the following…

git clone -b stable GitHub - stackblitz-labs/bolt.diy: Prompt, run, edit, and deploy full-stack web applications using any LLM you want!

go there…

cd d:/coding/projects/bolt.diy

Edit the following filename changing it from

.env.example to .env.local

NOTE: - currently working on this - Edit the bottom of the file and make an update for the ‘context length’ - I’ve tried 6144 because using the 32B version of QWEN2.5, I think this should be enough memory.

Example Context Values for qwen2.5-coder:32b

DEFAULT_NUM_CTX=32768 # Consumes 36GB of VRAM

DEFAULT_NUM_CTX=24576 # Consumes 32GB of VRAM

DEFAULT_NUM_CTX=12288 # Consumes 26GB of VRAM

DEFAULT_NUM_CTX=6144 # Consumes 24GB of VRAM

DEFAULT_NUM_CTX=

= = = = = = = = = = = = = = = = = = =

Installing PNPM

= = = = = = = = = = = = = = = = = = =

pnpm install

After installation you can run the development server but you must be in your working directory of Bolt.diy because the command looks for the package.json file and if you look inside you’ll see there’s a line called ‘dev’ - this line holds details as to how the server is to run. More on that later…

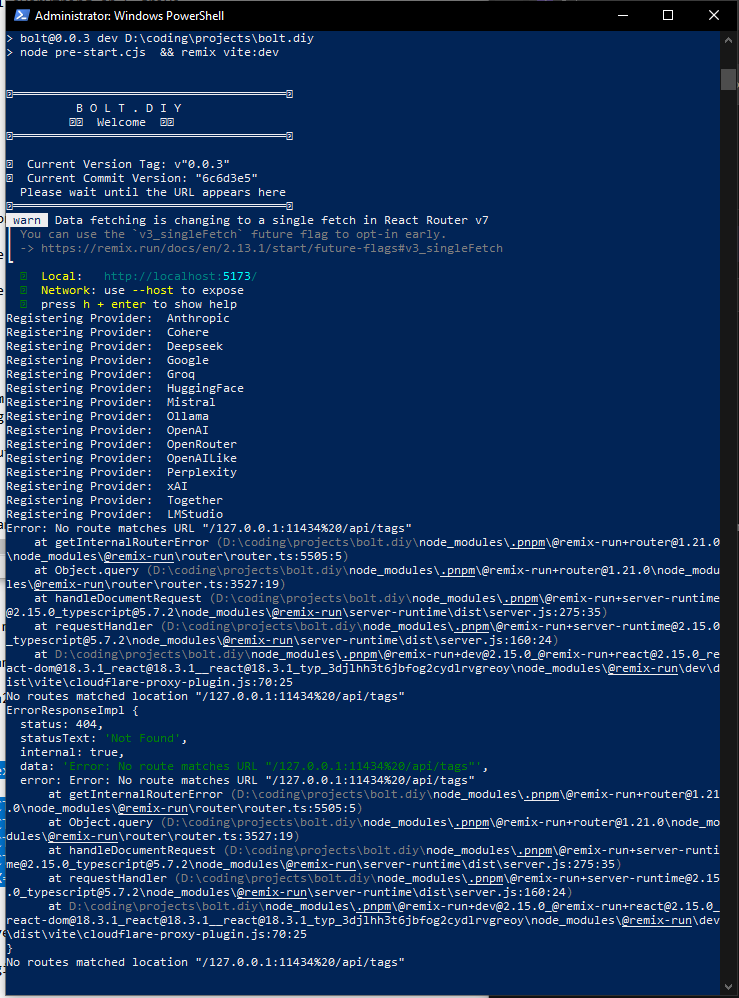

pnpm run dev

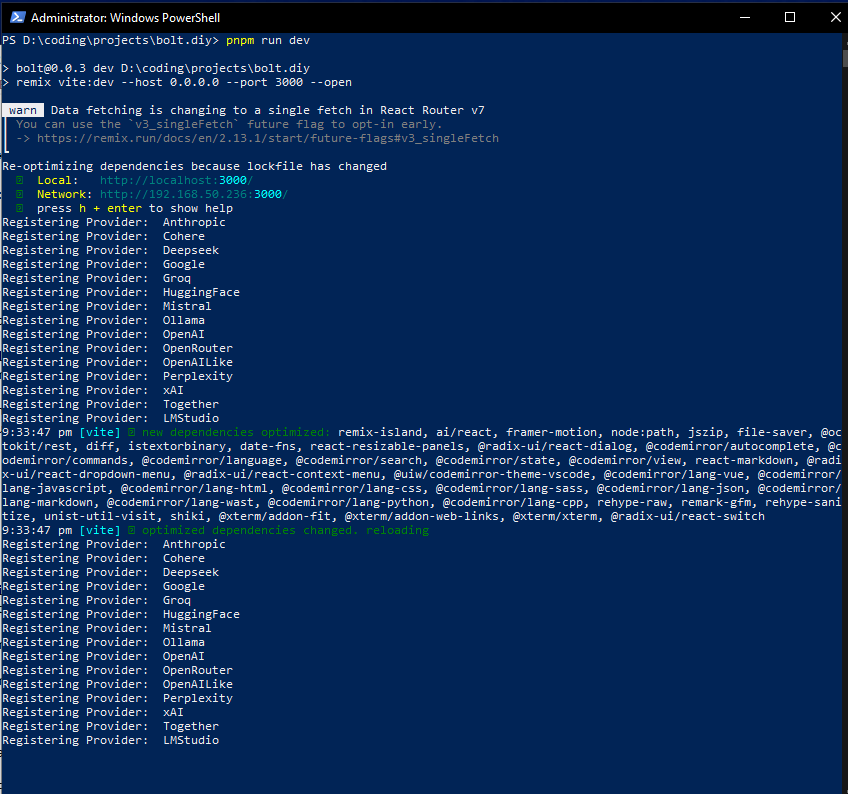

PS D:\coding\projects\bolt.diy> pnpm run dev

bolt@0.0.3 dev D:\coding\projects\bolt.diy

node pre-start.cjs && remix vite:dev

★═══════════════════════════════════════★

B O L T . D I Y

![]() Welcome

Welcome ![]()

★═══════════════════════════════════════★

![]() Current Commit Version: eb6d4353565be31c6e20bfca2c5aea29e4f45b6d

Current Commit Version: eb6d4353565be31c6e20bfca2c5aea29e4f45b6d

★═══════════════════════════════════════★

warn Data fetching is changing to a single fetch in React Router v7

┃ You can use the v3_singleFetch future flag to opt-in early.

┃ → remix/docs/start/future-flags.md at remix@2.13.1 · remix-run/remix · GitHub

┗

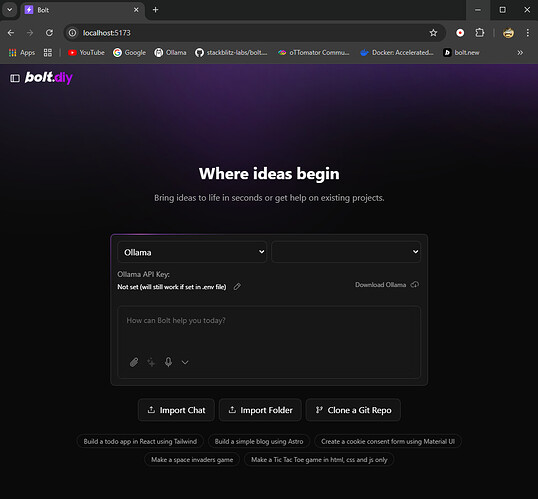

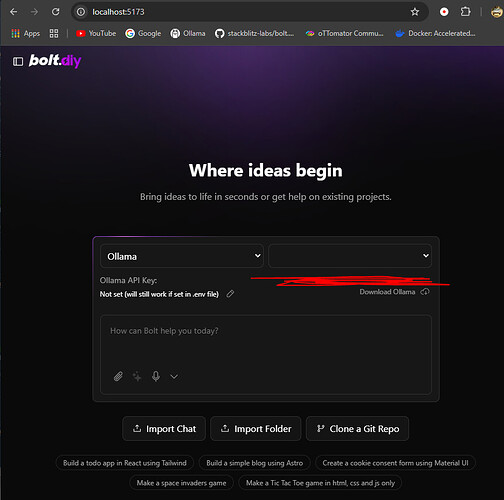

➜ Local: http://localhost:5173/

➜ Network: use --host to expose

➜ press h + enter to show help

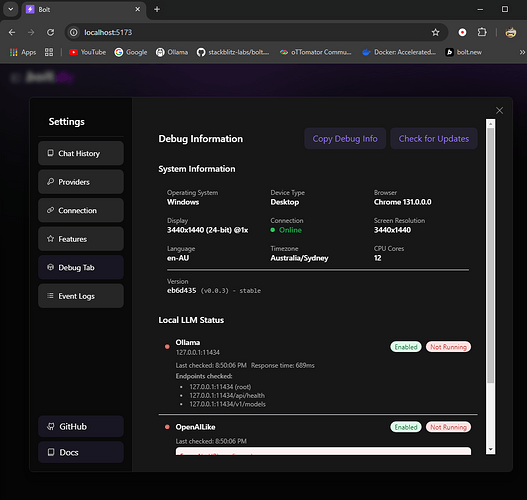

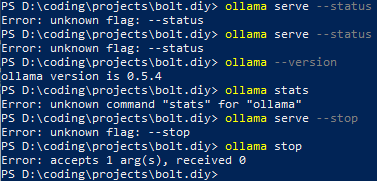

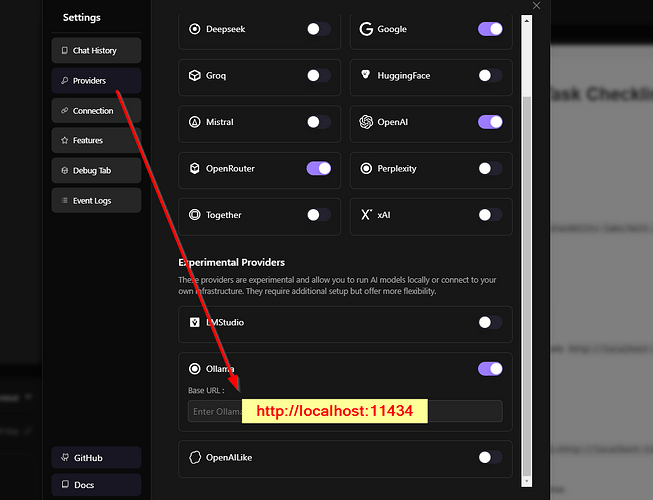

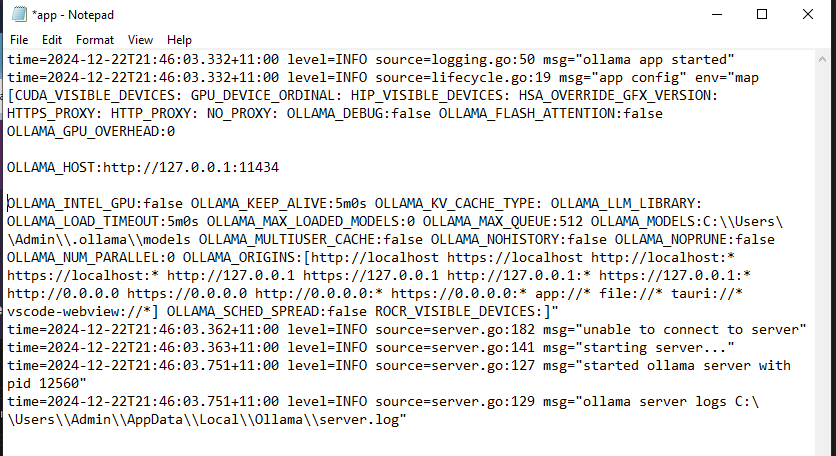

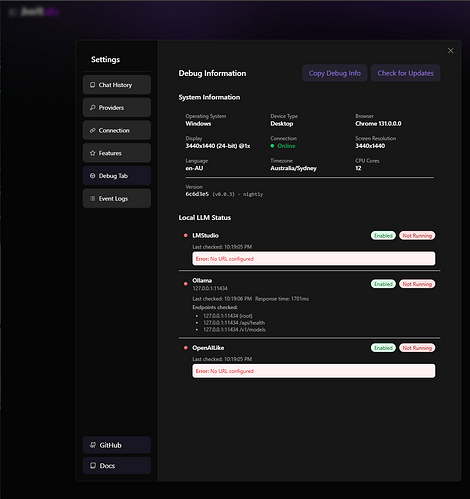

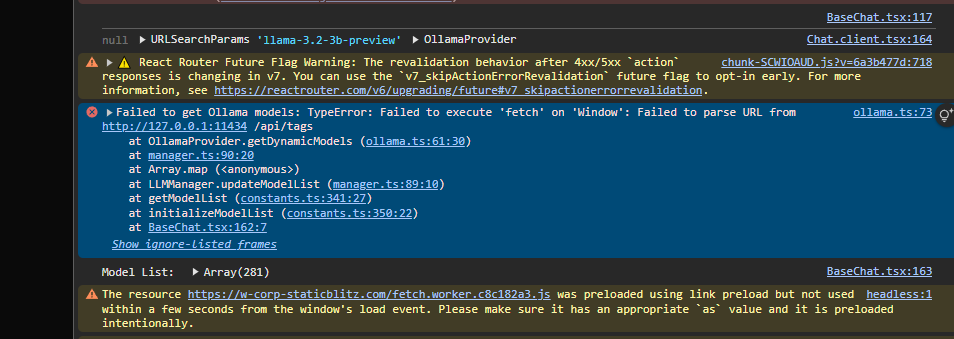

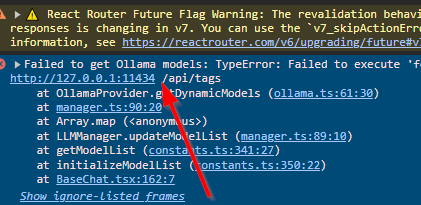

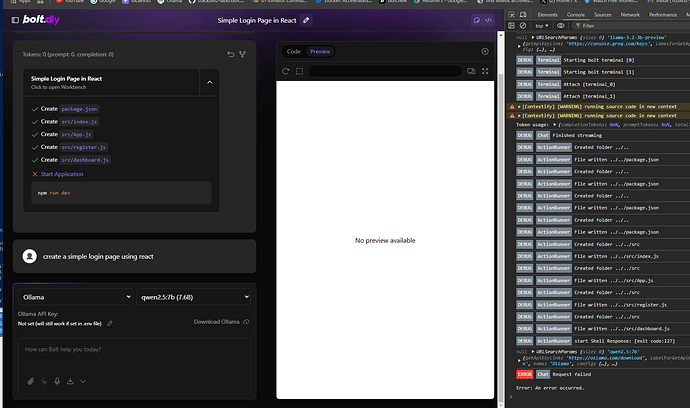

WARN Constants Failed to get Ollama models: fetch failed

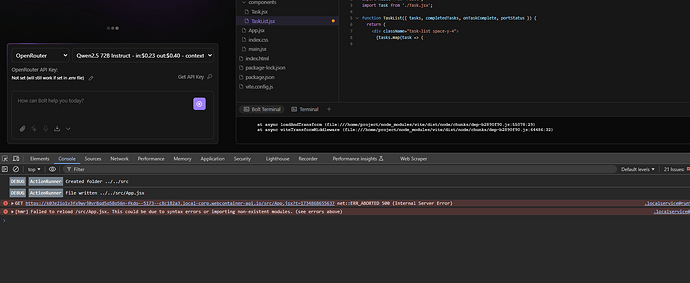

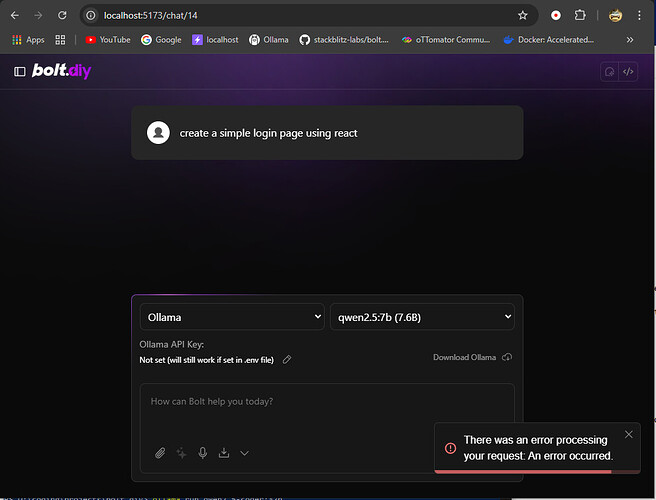

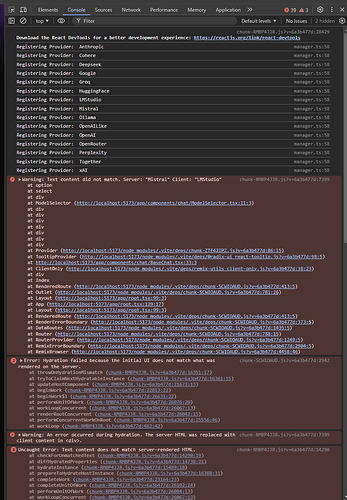

This is the error I’m currently getting - this is a work in progress.

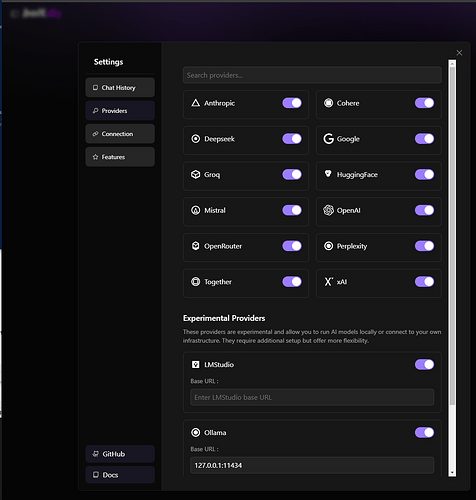

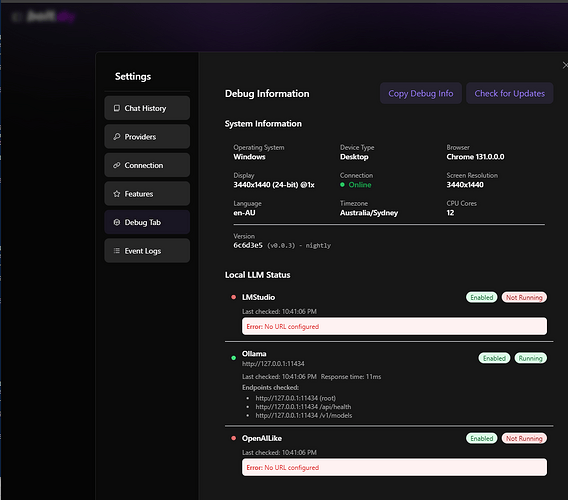

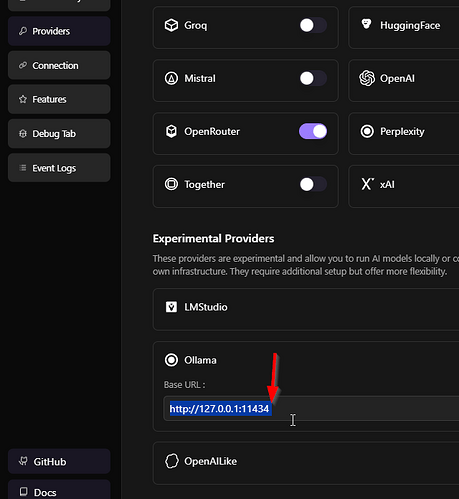

Also - entered Ollama server location and shows it’s not running ?

Please is there someone that can help me through this and possibly build a proper guide as the ones at the top of this don’t supply enough details to get up and running.