Hi,

I came across oTToDev on Youtube and was fascinated. Tried to install, but API key is not loading.

I followed the guide and installed the latest commit in Docker using npm helper scripts. I have Ollama installed on another server, and entered the URL as OLLAMA_API_BASE_URL in .env.example, and renamed it to .env.

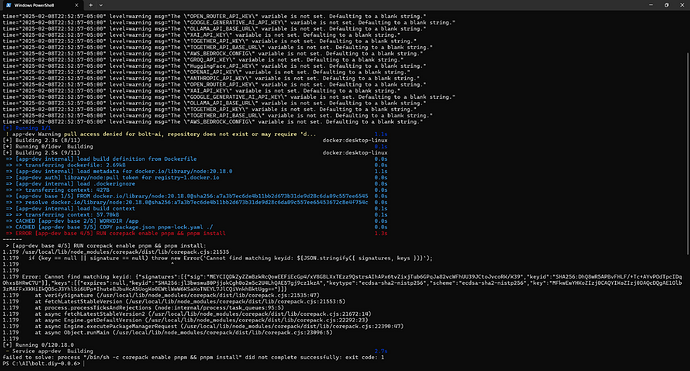

After the build, I got the following error when running the image with docker compose:

env file /installpath/bolt.new-any-llm/.env.local not found: stat /installpath/bolt.new-any-llm/.env.local: no such file or Directory

I renamed .env to env.local, and I got the system up and running. But the API key is not set in the app.

I took the image down in docker, and changed docker-compose.yaml to point to .env instead of env.local, and renamed the file back to .env. Ran the image once more in docker, but still no access to the API.

I’ve built both development and production, with no luck. (Am I wrong to assume that dev and prod points to .env in different ways? Looks like it in docker-compose.yaml where the file is not mentioned in the dev segment.)

The warning messages from the Youtube video WARN[0000] The "GROQ_API_KEY" variable is not set. Defaulting to a blank string. is not to be seen in my install process, even though I have many environmentals that have not been set. I should have seen those warnings when running docker compose, but they do not show up.

Ollama is up and running. It’s also in use with Open WebUI, connecting from another server, pointing to the Ollama URL.

I’ve seen similar posts, and with one suggesting to install an earlier commit. I know only how to install the latest commit, and need pointers if I’m to install an earlier commit.

Would love to get this up and running and be a part of this community. Please help.