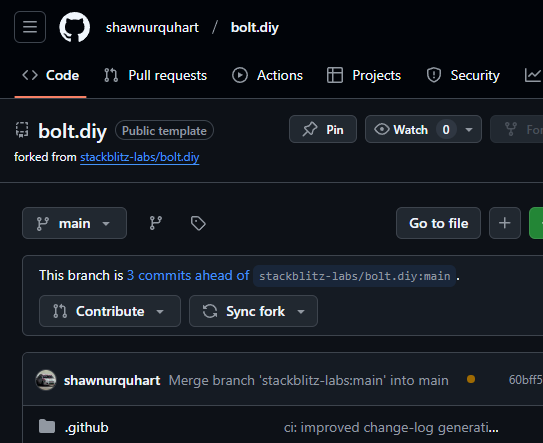

Hi Bolt-ers…

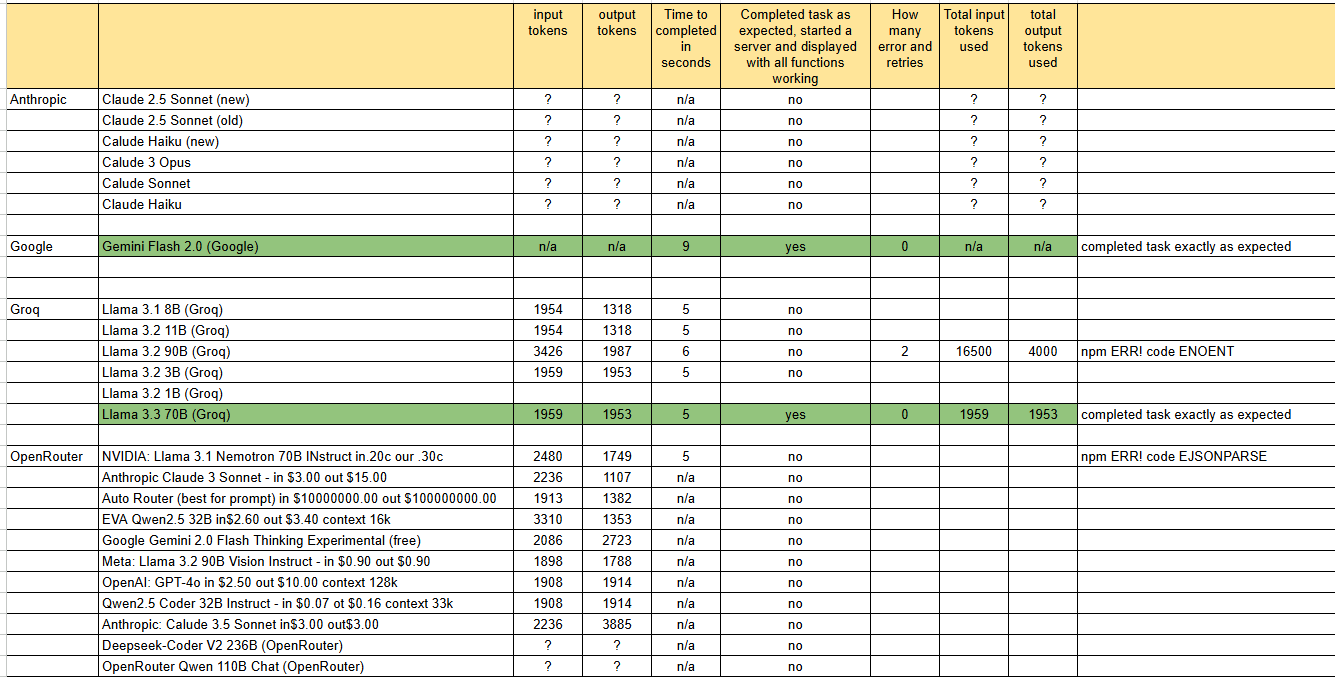

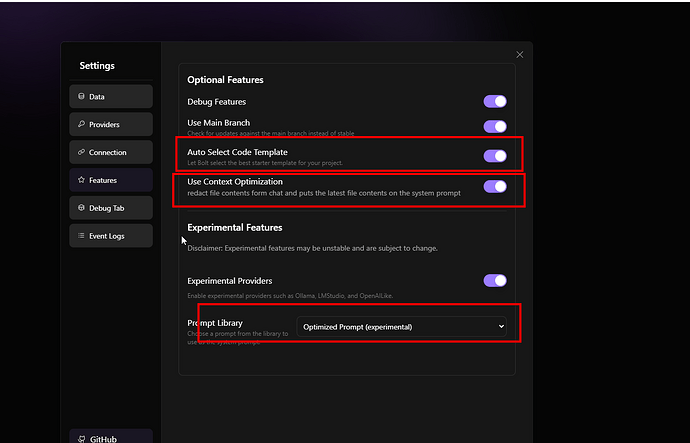

Been playing around with finding the sweet spot when trialing a slightly harder prompt. This I found worked first time in Bolt.new but have had very little success in Bolt.diy depending on the provider/language model combination. So I thought I’d put this into a table and display the results - this will be a WIP so please be gentle on me. If you have some good input, I’d appreciate that too.

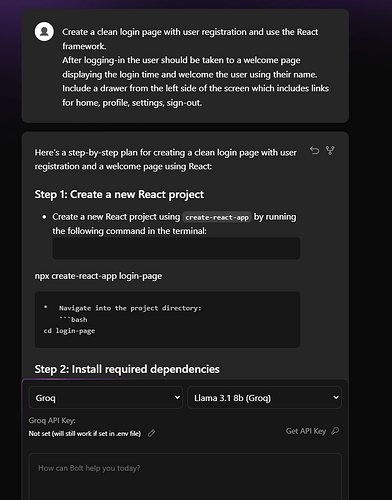

My standard prompt is as follows…

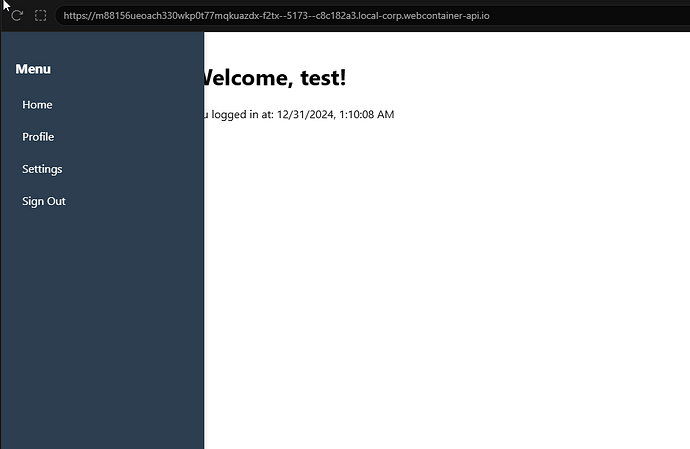

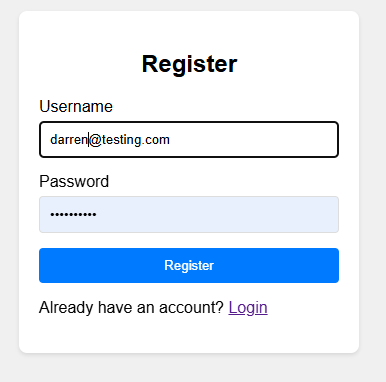

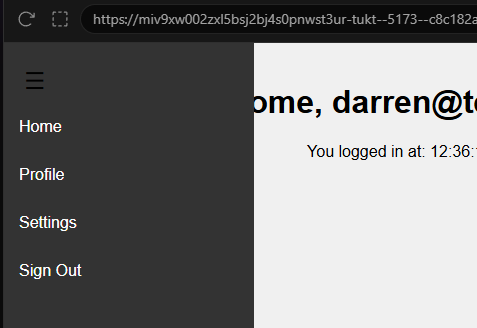

Create a clean login page with user registration and use the React framework.

After logging-in the user should be taken to a welcome page displaying the login time and welcome the user using their name.

Include a drawer from the left side of the screen which includes links for home, profile, settings, sign-out.

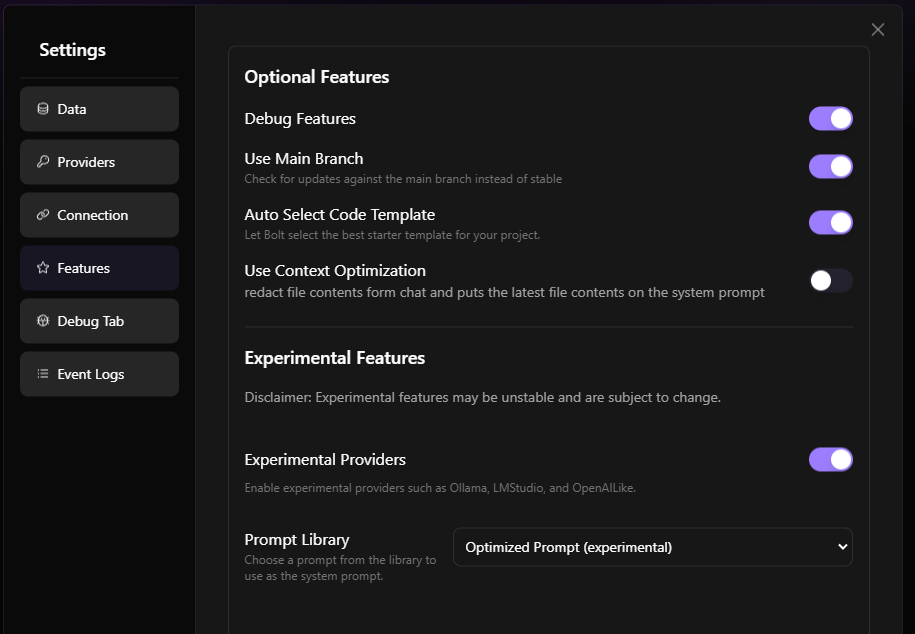

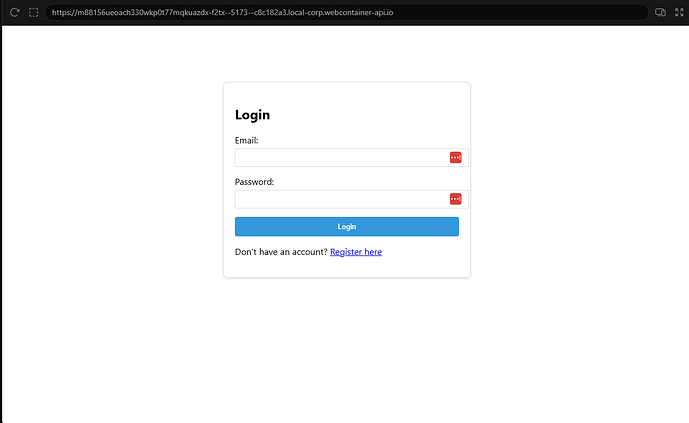

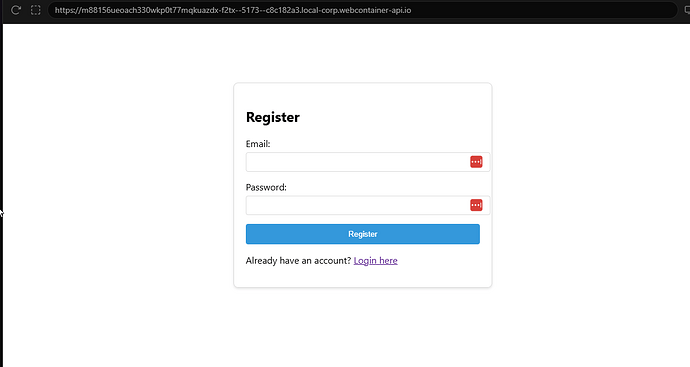

Screenshots of outcome where applicable…

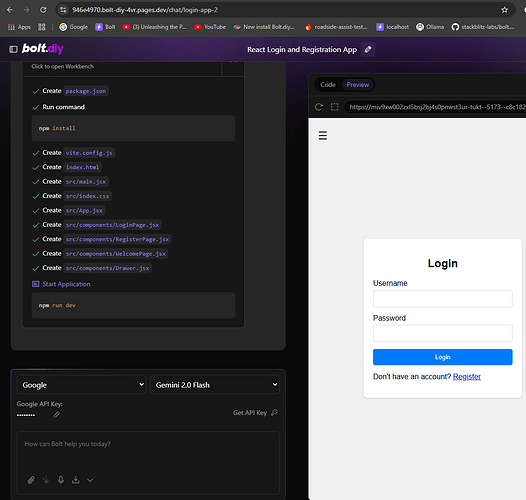

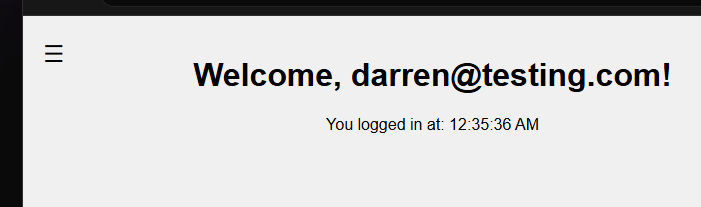

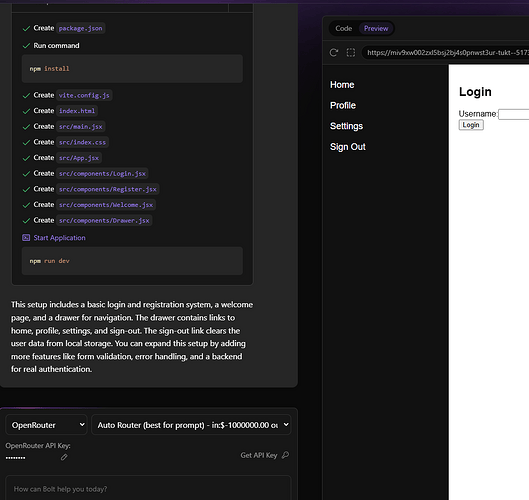

Google - Gemini 2.0 Flash

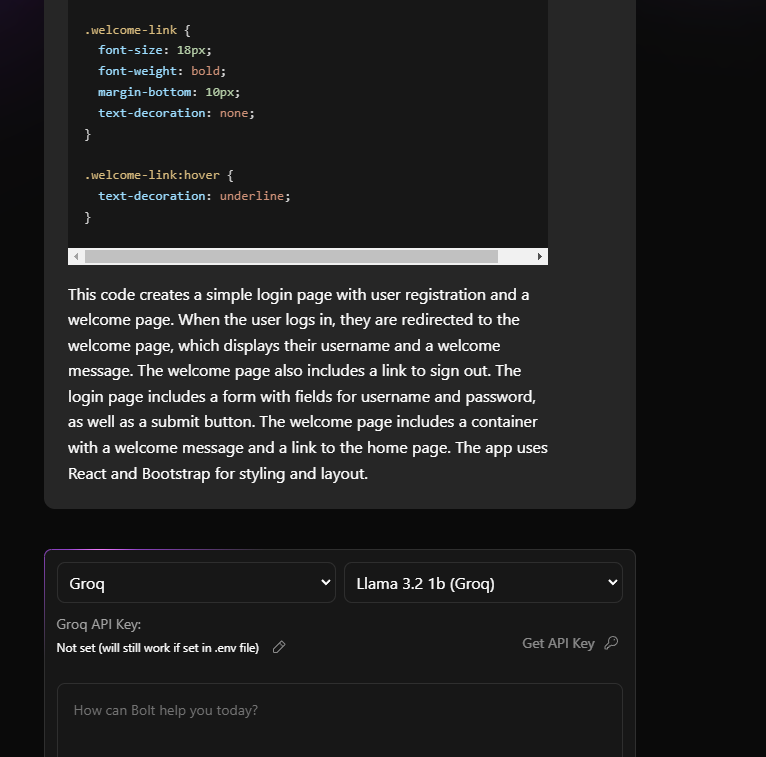

Some output from Bolt for context also.

Okay, I will create a login page with user registration using React, along with a welcome page that displays the login time and user’s name. Additionally, I’ll include a drawer from the left side with navigation links.

Here’s the plan:

1. Project Setup: Initialize a new React project using Vite.

2. Authentication Logic: Implement basic authentication logic using local storage to store user data.

3. Login and Registration Components: Create separate components for login and registration forms.

4. Welcome Page: Create a welcome page component that displays the user’s name and login time.

5. Drawer Component: Create a reusable drawer component for navigation.

6. Routing: Set up routing to navigate between login, registration, and welcome pages.

7. Styling: Add basic styling for a clean look.

Now, let’s start with the implementation.

Not a bad outcome ![]()

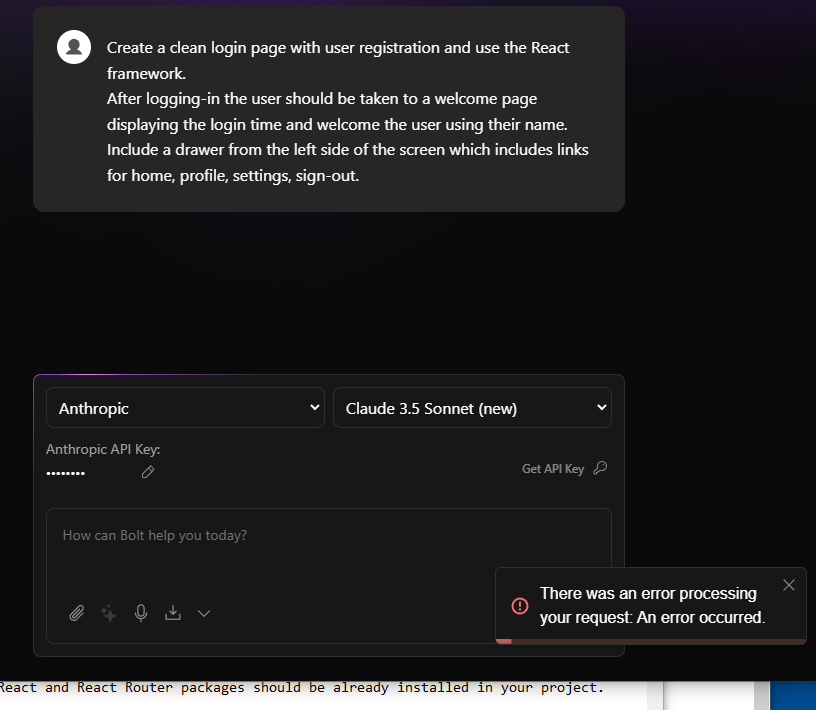

Anthropic - Claude 3.5 Sonnet (new)

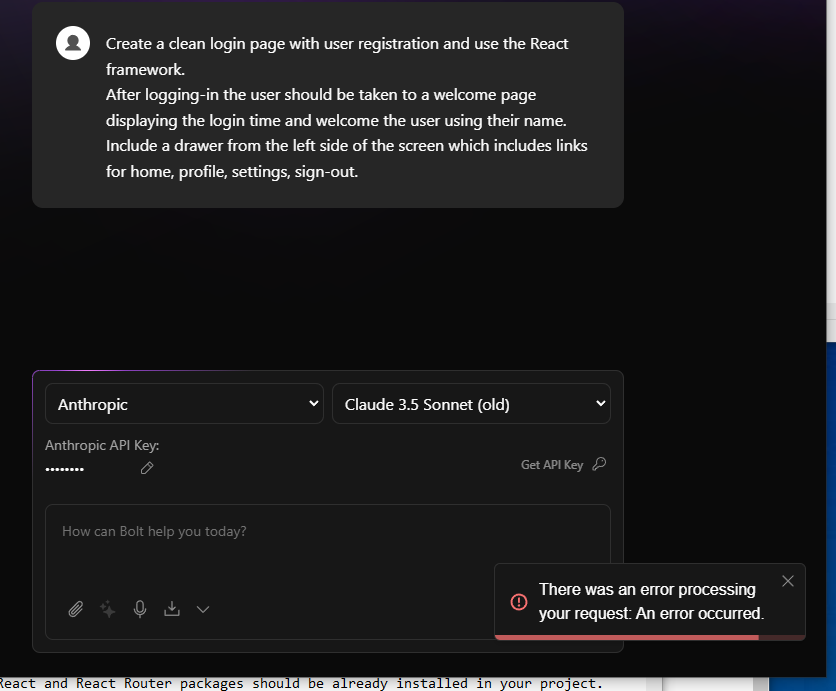

Anthropic - Claude 3.5 Sonnet (old)

Got the same for Claude 3 Haiku (new) and the other 3.

Groq - Llama 3.1 8B

Writes the code but won’t attempt running commands to setup the server and display.

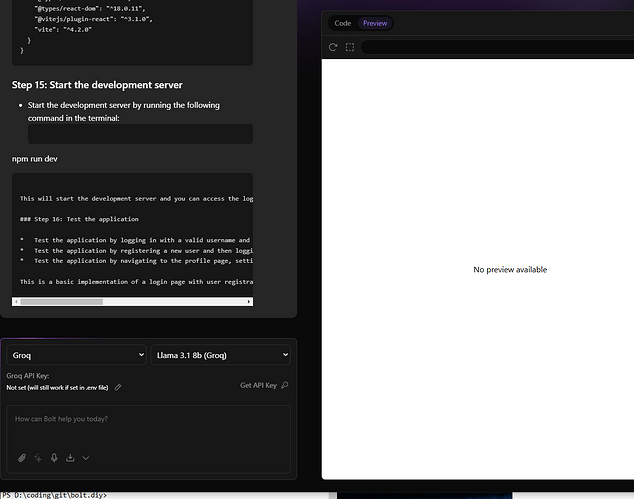

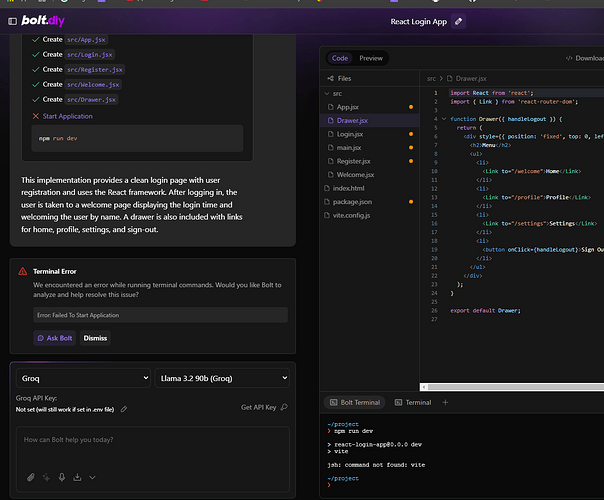

Groq - Llama 3.2 90B

Similar but failed only at executing npm and setting up the environment - might be an easy fix and worth further experimentation although this option is heavy on tokens so therefore expensive considering what you’re getting is not magic.

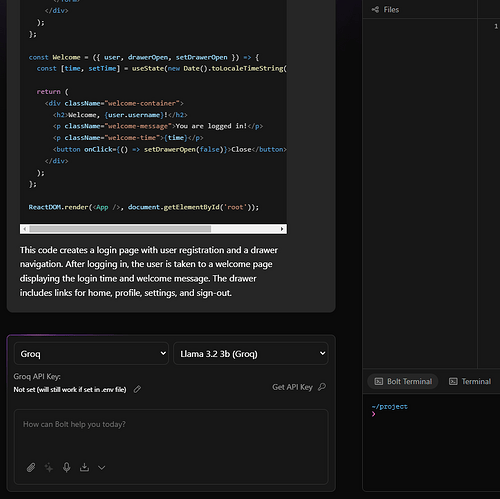

Groq - Llama 3.2 3B

Similar - gives the files but nothing else…

Groq - Llama 3.2 1B

Yeah - don’t waste your time…

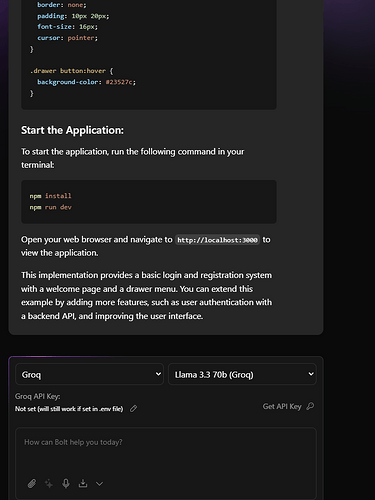

Groq - Llama 3.3 70B

Much the same but at least you get some advice on running a local server also. LOL.

OpenRouter - Anthropic Claude 3 Sonnet - in $3.00 out $15.00

Don’t bother…

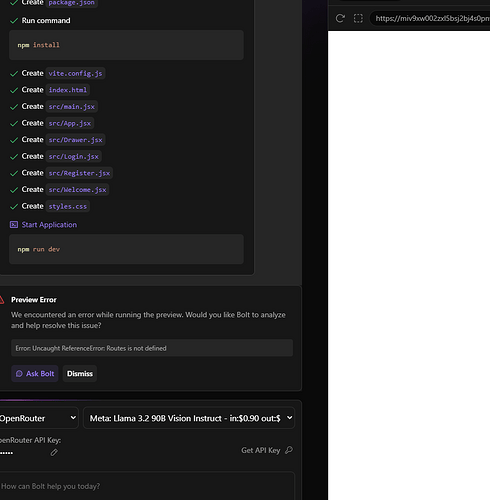

OpenRouter - Auto Router (best for prompt) in $10000000.00 out $100000000.00

I don’t know what all the $$$$$ is about because the actual cost I just checked on my OpenRouter account and it was minimal and essentially the same as the one before…but it almost delivered.

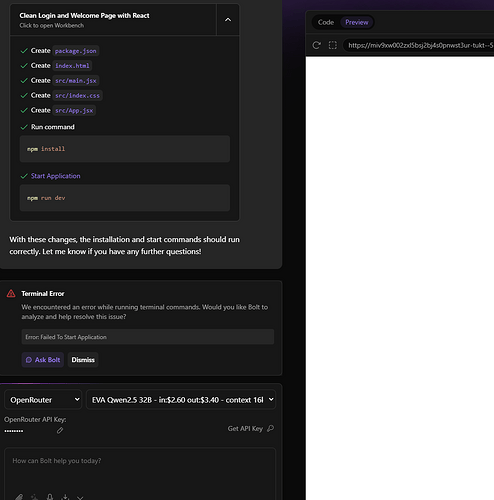

OpenRouter - EVA Qwen2.5 32B in$2.60 out $3.40 context 16k

Lets see if someone can serve a Qwen 2.5 32B LLM and actually produce the goods…nope

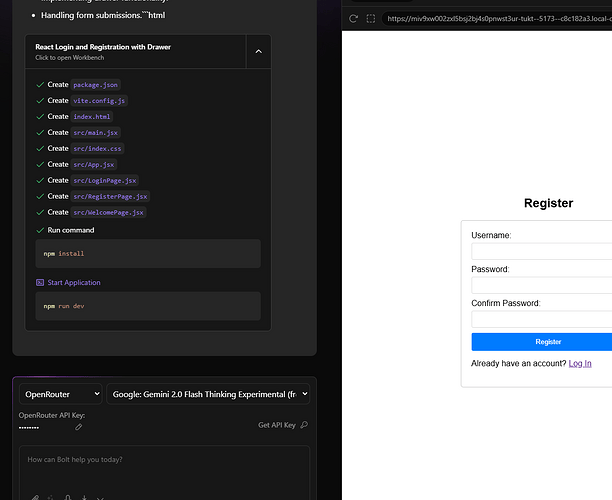

OpenRouter - Google Gemini 2.0 Flash Thinking Experimental (free)

Nearly but not quite. Operationally it doesn’t work but a little effort and it’d be there.

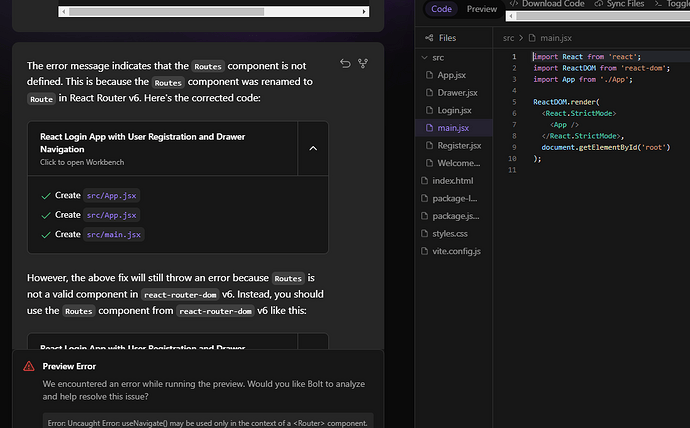

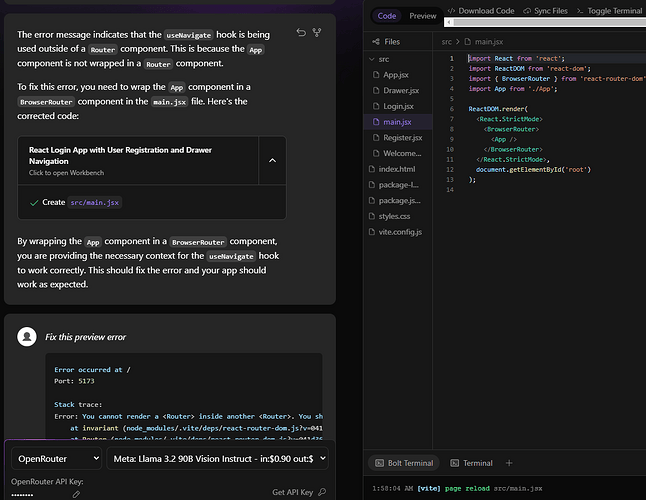

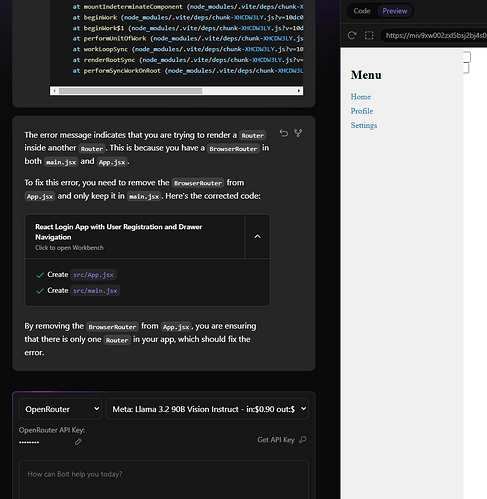

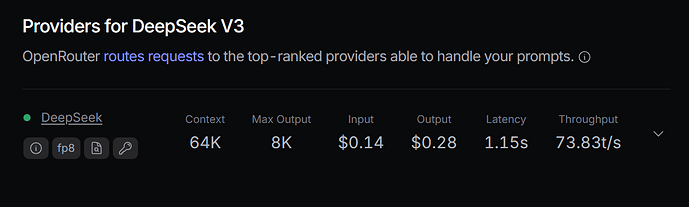

OpenRouter - Meta: Llama 3.2 90B Vision Instruct - in $0.90 out $0.90

Most of the way there but failed.

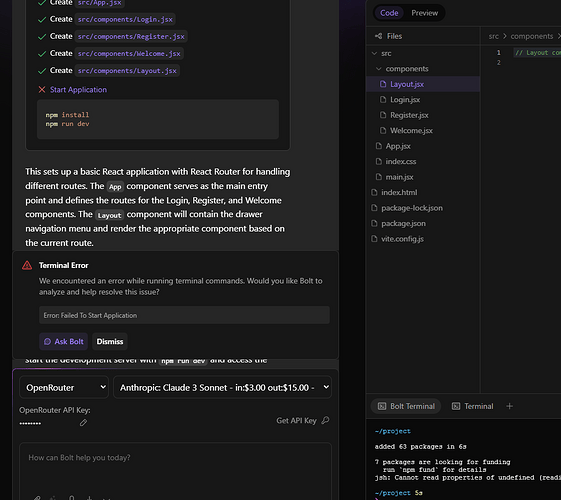

Interesting to see some of the errors as they come out…

It has worked out the error but failed on the initial build component - almost there though.

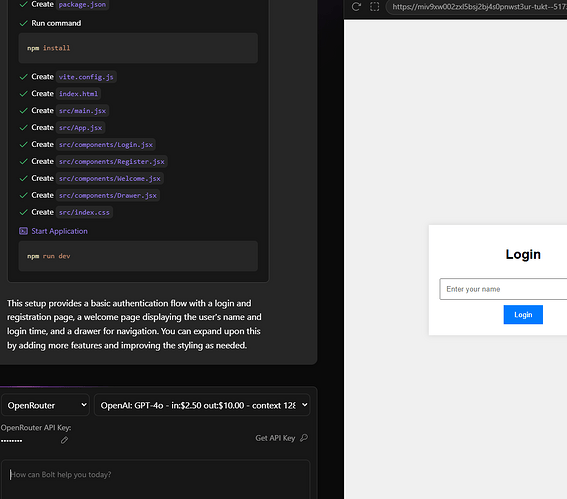

OpenRouter OpenAI: GPT-4o in $2.50 out $10.00 context 128k

Got most of the way there but missing operational components.

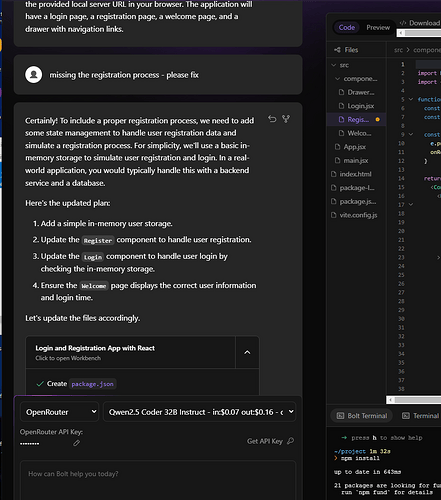

OpenRouter Qwen2.5 Coder 32B Instruct - in $0.07 ot $0.16 context 33k

Nearly there on first try…underwhelming tho.

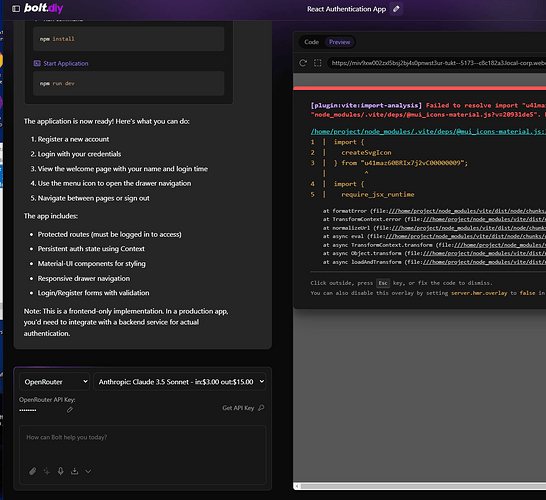

OpenRouter - Anthropic: Calude 3.5 Sonnet in$3.00 out$3.00

Note quite.

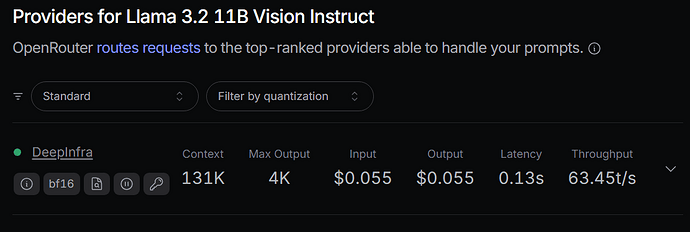

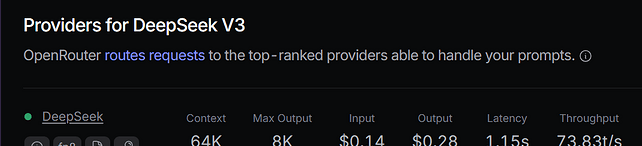

OpenRouter - Deepseek-Coder V2 236B (OpenRouter)

Yeah - I expected more. ![]()

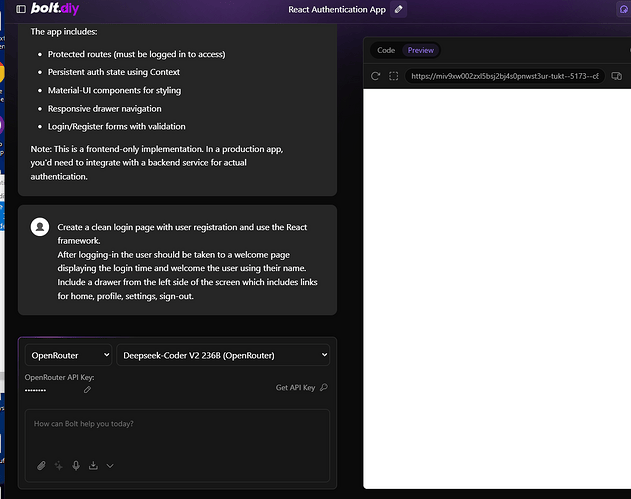

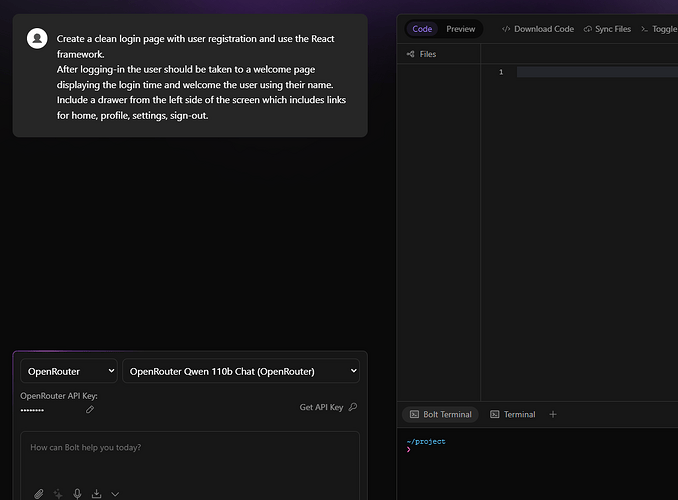

OpenRouter - OpenRouter Qwen 110B Chat (OpenRouter)

Nothing but tumble-weeds…

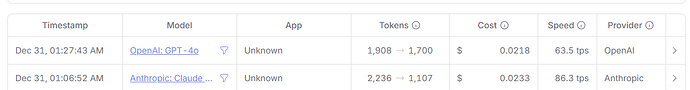

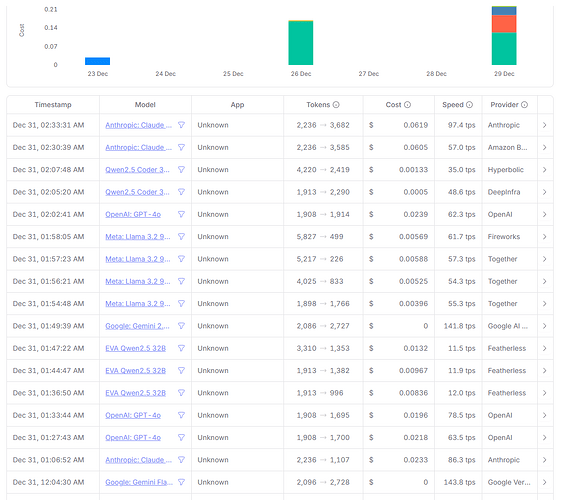

And a quick look at my OpenRouter traffic and token usage.

Yeah, it seems like a lot of stuffing around - but I hope this helps a few people out there. So many of these combinations provide very little in return. I’d love to get some feedback from anyone that’s had better results. It would have been more enjoyable to hit my head against the wall for 4hrs. But, that would have just woken everyone up. Haha.

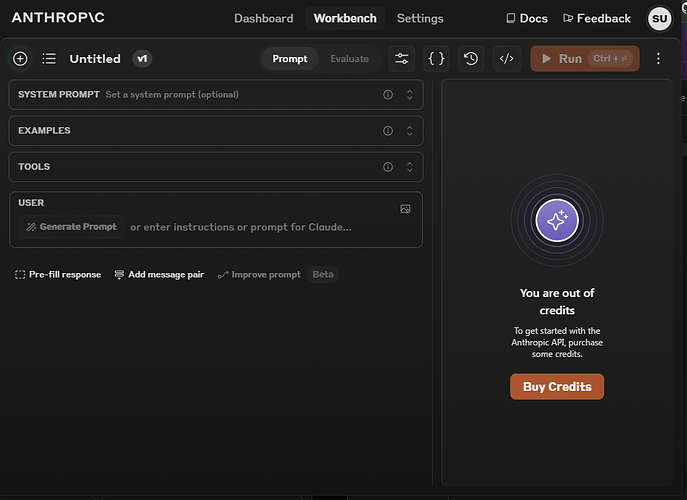

Actually I may have had some errors due to running out of Anthropic credit - bugger. I didn’t go back to check.

And I’d been trying to keep a spreadsheet on outcomes. It’s not as accurate as I would have hoped but the detail isn’t as important as the final outcome.

Anyway. I really hope this helps a few out there. Post your best results below please.

I think for my best results it’s actually Google and Gemini Flash 2.0 right now as the clear winner…

All the best everyone and hope you all get something positive out of using Bolt.diy - It’s going to be amazing if used correctly. ![]()

![]()