[NOTE: This post will be updated will more details over the week, and I will be making a video on common issues soon!]

Since everyone is running bolt.diy with different systems and providers, and also because bolt.diy is still in its infancy, there are certainly many people who run into issues getting it up and running and working as expected!

Before posting an issue that you have, please read through these common issues and how to dive into them a bit deeper:

- “There was an error processing this request”

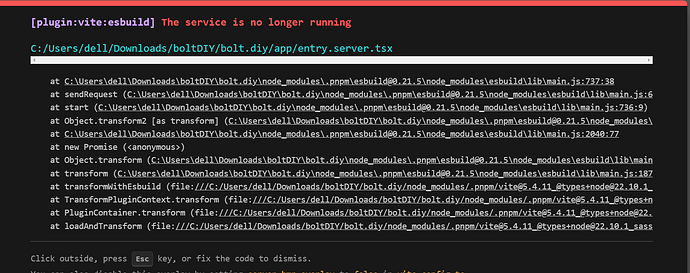

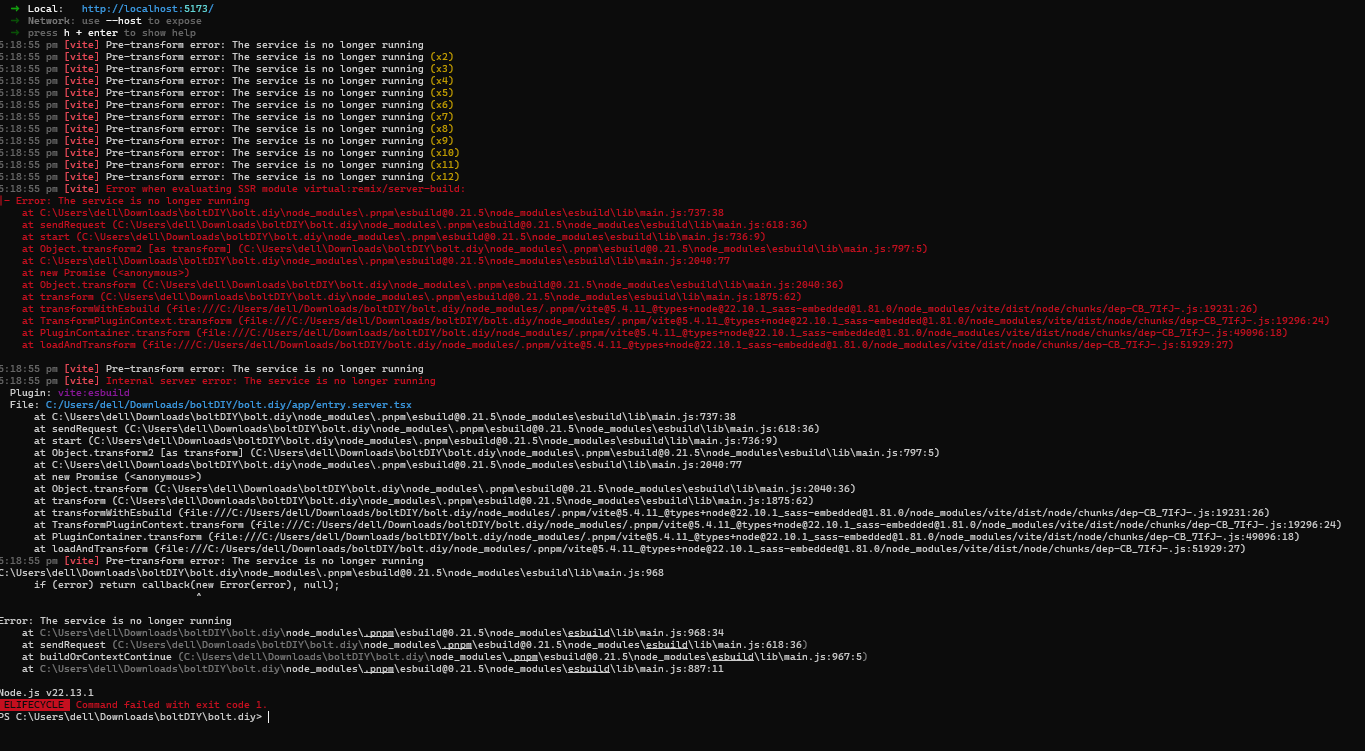

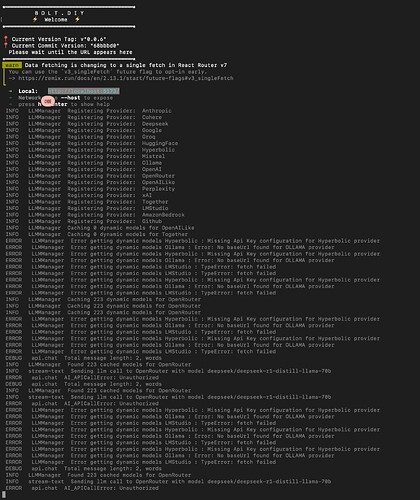

If you see this error within bolt.diy, that is just the application telling you there is a problem at a high level, and this could mean a number of different things. To find the actual error, please check BOTH the terminal where you started the application (with Docker or pnpm) and the developer console in the browser. For most browsers, you can access the developer console by pressing F12 or right clicking anywhere in the browser and selecting “Inspect”. Then go to the “console” tab in the top right.

I’m not giving a solution per say here, but you will almost certainly see a more helpful error message in one of these two places!

- x-api-key header missing

I’ve seen this error a couple times and for some reason just restarting my Docker container has fixed it. This seems to be Ollama specific. Another thing to try is try to run bolt.diy with Docker or pnpm, whichever you didn’t run first. If anyone can provide more details on this one specifically I would appreciate it!

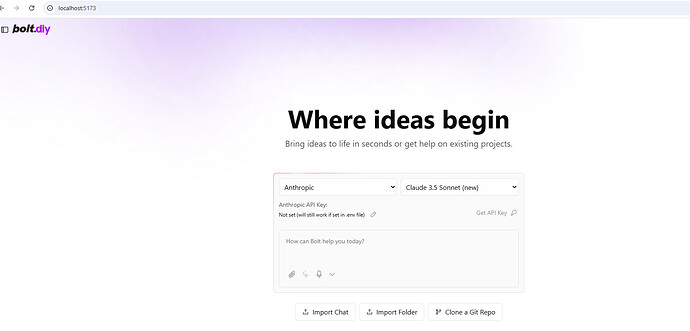

- Blank/no preview

I promise you that we are constantly testing new PRs coming into bolt.diy and the preview is core functionality, so the application is not broken! When you get a blank preview or don’t get a preview, this is generally because the LLM hallucinated bad code or incorrect commands. We are working on making this more transparent so it is obvious! Sometimes the error will appear in developer console too so check that as well.

For best models to get blank previews the least amount possible, use the old Claude 3.5 Sonnet, GPT-4o, Gemini 2.0, Qwen 2.5 Coder 32b, or DeepSeek Coder V2.

- Everything works but the results are bad

As much as the gap is quickly closing between open source and massive close source models, you’re still going to get the best results with the very large models like GPT-4o, Claude 3.5 Sonnet, and DeepSeek Coder V2 236b. This is one of the big tasks we have at hand - figuring out how to prompt better, use agents, and improve the platform as a whole to make it work better for even the smaller local LLMs!

- Received structured exception #0xc0000005: access violation

If you are getting this, you are probably on Windows. The fix is generally to update the Visual C++ Redistributable: