hey, make sure you got the correct api key and also got credits bought on this provider. OpenAI, Deepseek etc. are not free. you need to buy credits.

You can use google for free, as I show in my install videos for bolt.diy on youtube.

hey, make sure you got the correct api key and also got credits bought on this provider. OpenAI, Deepseek etc. are not free. you need to buy credits.

You can use google for free, as I show in my install videos for bolt.diy on youtube.

Hey, thanks for responding. But i have the api key (i think the right one) for example on the vs code extension openrouter api works. I used this api code

I have a similar issue to @MarkAslaksen where it states the same error message: There was an error processing your request: Custom error: Failed after 3 attempts. Last error: Internal Server Error"

The powershell console has the follow output following the chat submission:

INFO LLMManager Found 3 cached models for Ollama

INFO stream-text Sending llm call to Ollama with model llama2:latest

DEBUG Ollama Base Url used: http://127.0.0.1:11434

ERROR api.chat AI_RetryError: Failed after 3 attempts. Last error: Internal Server Error

When I inspect the page I see the following output in the console:

I am running this system on Windows 11 with Ollama running on the same machine. I have tested to see if Ollama is running, and I received the relevant response that it is up and ready. I have pointed the Ollama_Base_URL to the default (testing both localhost and 127.0.0.1 and the port 11434), I have used my computers IP address, 0.0.0.0 and all yield issues. I have set Ollama’s environment variable OLLAMA_HOST to 0.0.0.0 which was a fix presented on a reddit post I came across. I also set the variable to my pc’s IP address which was another suggested fix that definitely did not work. It is also worth mentioning that Bolt is not running through docker on my computer.

I am very new to this so if I may ask that you put any responses in layman’s terms to help me get on track? I do have some technical understanding however by others’ standards I am a noob.

I have followed 3 different guides to help me install this, from the official one on Github and the following one below:

After many reinstalls and attempting many possible fixes I have managed to find based on research, I am utterly lost as to what is causing this issue. It’s the same issue each time irrespective of how I installed Bolt.

Any assistance in this regard will be truly appreciated.

hi @Arbiter,

take a look at Videos / Tutorial / Helpful Content

There is also a video from me how to use bolt.diy with ollama on windows. If this is not working and you still got problems please open a seperate topic in [bolt.diy] Issues and Troubleshooting to investigate the problems.

Hi @leex279,

Thank you! I will have a look. I appreciate the prompt reply. If I don’t come right I will make a specific post.

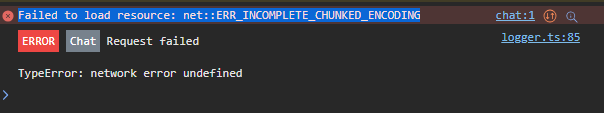

Getting " Failed to load resource: net::ERR_INCOMPLETE_CHUNKED_ENCODING" in my devtools, in terminal getting INFO stream-text Sending llm call to Groq with model llama-3.3-70b-versatile

DEBUG api.chat usage {“promptTokens”:null,“completionTokens”:null,“totalTokens”:null}

cant seem to find any info on these anywhere

@leex279 hi can u help me with this

@ayazat did you check my youtube video for installing and still got this problem?

If not, watch it please and if you still got problems, open a seperate topic to investigate depper => [bolt.diy] Issues and Troubleshooting

yup i did 3 time and did everything from the starts

@leex279 Do you suggest that I delete everything and start over, knowing that I have done it before and I have the same problem

Open a new topic please to have it in a seperate topic, just your case. doesnt match here.

Alright thank you00000

Are you actually installing bolt.diy in windows or is this being installed on ubuntu that is running in wodows? Im not an expert but i have ubuntu installed from another project. So i ended up i stalling it on ubuntu. I did this before finding your videos. Should i remove it from ubuntu before following all the staps in the video?

This is native windows in my case. You can run it directly on Ubuntu as well with nearly the same. I did a video for macOS as well, which should fit 1:1 for Ubuntu as well:

@akzy1991 I’m not sure your " Failed to load resource: net::ERR_INCOMPLETE_CHUNKED_ENCODING" issue is resolved. Recently, I’ve set DIY and got this issue. For me, the Anti-Virus is the problem. There was a loopback IP 127.0.0.1 configuration and the communication was blocked by the antivirus Network Threat Protection. Although this is not a network threat, it was blocked. After changing the configuration, it is working successfully.

Just to be sure … if you can’t access your bolt.diy other than localhost.

Try running it like with the --host argument to expose your server:

pnpm run dev --host

HEY THERE! im new to bolt.diy, ive tried to install it locally, but it doesnt, so i decided to host it on Cloudflare. When i type in prompt. it just gives me answer liek chatgpt. and doesnt open the code or preiew section, and nor does it contain smth. so, not sure, what to do?