@wonderwhy.er

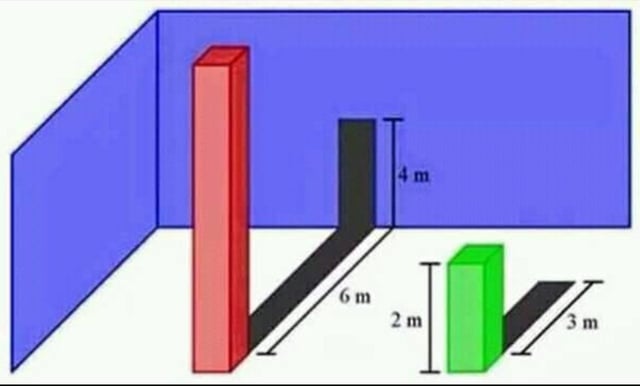

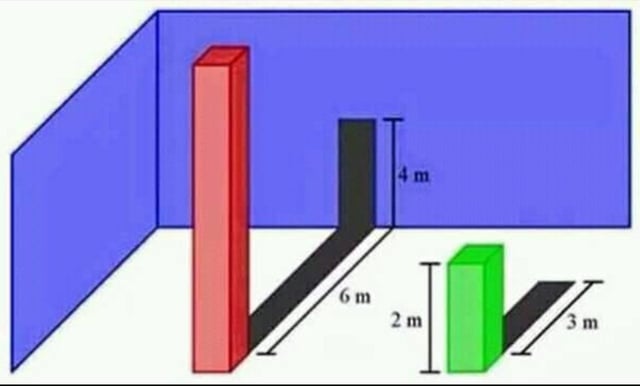

I just watched your latest video on “O1 Model First Impressions” and it was interesting. I tried it with a few vision models in oTToDev. Several said they couldn’t answer the question despite being vision models. Llama failed (various) but what’s interesting is Gemini Pro Vision 1.0 gave the correct answer, but it seemed more like a random guess because the reason it gave was awful. Just thought it was interesting.

If anyone wants to, please try this yourself!

Yeah, its interesting problem.

Its good illustration of how these LLMs are smart and stupid at the same time. As if they are on a spectrum.

Worst part, if you do not have cheap way to validate their work you can’t be sure they are right…

So I would be very careful trusting its answers with images unless you have a way to test it for cheap.

Tricky…

And yes, I saw new Google Gemini 1206 and few other models succeed at times. It also depends on prompt. If you hint it enough some of them solve it.

But you may never know with problem you can’t solve if it got it right that way… For complex problems ChatGPT is a tricky tool to use.

Interesting that you tested in bolt

I did not yet, curious to experiment more in that direction

1 Like

Have you heard of AWS Guardrails for Amazon Bedrock? It uses “Contextual Grounding” and it might just be the answer. I plan on testing this through an n8n hybrid workflow to possibly reduce or eliminate hallucinations altogether. Probably wishful thinking but should be interesting and possibly promising.

Resources:

Context Grounding from UiPath is now Production Ready!!

Use contextual grounding check to filter hallucinations in responses - Amazon Bedrock

Guardrails for Amazon Bedrock can now detect hallucinations and safeguard apps built using custom or third-party FMs | AWS News Blog

Yeah, j geard of it, sounded interesting but had no time to take a look.

If you get to it do share.

Will do, once I test it out. But I am pretty new to n8n, so still crash coursing through it.