xkeshav

December 26, 2024, 7:10am

1

Having

Ubuntu 24.04

Node 22

Set Anthropic API keys and Open AI Keys in *.env.local and later changed the filename to .env and below how it has been set

OPENAI_API_KEY=sk-proj-xxxxx

ANTHROPIC_API_KEY=sk-ant-api03-xxxxxx

forked the bolt.diy and run npm install and npm run dev,

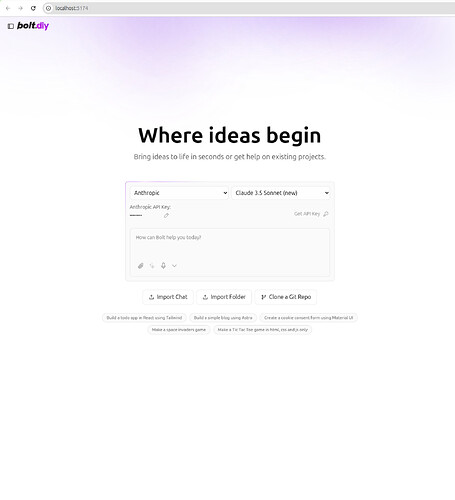

UI opens as below

moreover, from UI ,

Open settings and disabled all other Provider except Open AI and Anthropic

set API keys for Anthropics API from UI too.

it gives vIte error with tooManyCalls and also when main page loads.

some API call happens which is failing

now when i ask something it gives Vite error

so my question is

Do I need to run something on port 1234? I have search it try to change but that causing more errors , so revertback

you can ignore these errors, these are just warnings. will be removed in the next release.

what is that error?

are you able to get some response from the AI?

what command are you using to run start bolt?

leex279

December 26, 2024, 9:14am

3

Welcome @xkeshav ,

please take a look here: Videos / Tutorial / Helpful Content

There are a few youtube videos from dustin and me on how to install it. Maybe take a look first there and come back with open questions.

1 Like

xkeshav

December 27, 2024, 7:19pm

4

Thanks, I went through video but it deos not help much. I want to understand do I need to download Ollama or how does this local LLM works? why it is calling localhost:1234

leex279

December 27, 2024, 7:42pm

5

@xkeshav sounds like you missing some basics here. I would recommend you watch some tutorials about Ollama itself, before you try to integrate with bolt. You should be able to run models and test prompts in terminal with ollama before you go on.

Example:

1 Like