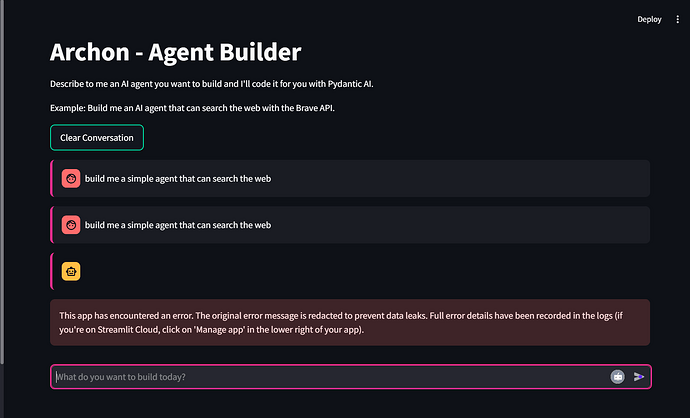

Whenever I try to give a query, I get error like this

In the terminal it shows this

16:57:25.363 advisor_agent run prompt=build me a simple agent that can search the web

INFO:openai._base_client:Retrying request to /chat/completions in 0.415305 seconds

16:57:25.369 preparing model and tools run_step=1

16:57:25.371 model request

INFO:httpx:HTTP Request: POST https://openrouter.ai/api/v1/chat/completions "HTTP/1.1 200 OK"

2025-05-16 22:27:26.050 Uncaught app execution

Traceback (most recent call last):

File "C:\Users\hebba\AppData\Local\Programs\Python\Python313\Lib\site-packages\streamlit\runtime\scriptrunner\exec_code.py", line 88, in exec_func_with_error_handling

result = func()

File "C:\Users\hebba\AppData\Local\Programs\Python\Python313\Lib\site-packages\streamlit\runtime\scriptrunner\script_runner.py", line 579, in code_to_exec

exec(code, module.__dict__)

~~~~^^^^^^^^^^^^^^^^^^^^^^^

File "D:\Downloads\Research\Archon\Archon\streamlit_ui.py", line 114, in <module>

asyncio.run(main())

~~~~~~~~~~~^^^^^^^^

File "C:\Users\hebba\AppData\Local\Programs\Python\Python313\Lib\asyncio\runners.py", line 195, in run

return runner.run(main)

~~~~~~~~~~^^^^^^

File "C:\Users\hebba\AppData\Local\Programs\Python\Python313\Lib\asyncio\runners.py", line 118, in run

return self._loop.run_until_complete(task)

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~^^^^^^

File "C:\Users\hebba\AppData\Local\Programs\Python\Python313\Lib\asyncio\base_events.py", line 719, in run_until_complete

return future.result()

~~~~~~~~~~~~~^^

File "D:\Downloads\Research\Archon\Archon\streamlit_ui.py", line 93, in main

await chat_tab()

File "D:\Downloads\Research\Archon\Archon\streamlit_pages\chat.py", line 81, in chat_tab

async for chunk in run_agent_with_streaming(user_input):

...<2 lines>...

message_placeholder.markdown(response_content)

File "D:\Downloads\Research\Archon\Archon\streamlit_pages\chat.py", line 36, in run_agent_with_streaming

async for msg in agentic_flow.astream(

...<2 lines>...

yield msg

File "C:\Users\hebba\AppData\Local\Programs\Python\Python313\Lib\site-packages\langgraph\pregel\__init__.py", line 2007, in astream

async for _ in runner.atick(

...<7 lines>...

yield o

File "C:\Users\hebba\AppData\Local\Programs\Python\Python313\Lib\site-packages\langgraph\pregel\runner.py", line 527, in atick

_panic_or_proceed(

~~~~~~~~~~~~~~~~~^

futures.done.union(f for f, t in futures.items() if t is not None),

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

timeout_exc_cls=asyncio.TimeoutError,

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

panic=reraise,

^^^^^^^^^^^^^^

)

^

File "C:\Users\hebba\AppData\Local\Programs\Python\Python313\Lib\site-packages\langgraph\pregel\runner.py", line 619, in _panic_or_proceed

raise exc

File "C:\Users\hebba\AppData\Local\Programs\Python\Python313\Lib\site-packages\langgraph\pregel\retry.py", line 128, in arun_with_retry

return await task.proc.ainvoke(task.input, config)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\hebba\AppData\Local\Programs\Python\Python313\Lib\site-packages\langgraph\utils\runnable.py", line 532, in ainvoke

input = await step.ainvoke(input, config, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\hebba\AppData\Local\Programs\Python\Python313\Lib\site-packages\langgraph\utils\runnable.py", line 320, in ainvoke

ret = await asyncio.create_task(coro, context=context)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "D:\Downloads\Research\Archon\Archon\archon\archon_graph.py", line 140, in advisor_with_examples

result = await advisor_agent.run(state['latest_user_message'], deps=deps)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\hebba\AppData\Local\Programs\Python\Python313\Lib\site-packages\pydantic_ai\agent.py", line 298, in run

model_response, request_usage = await agent_model.request(messages, model_settings)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\hebba\AppData\Local\Programs\Python\Python313\Lib\site-packages\pydantic_ai\models\openai.py", line 151, in request

return self._process_response(response), _map_usage(response)

~~~~~~~~~~~~~~~~~~~~~~^^^^^^^^^^

File "C:\Users\hebba\AppData\Local\Programs\Python\Python313\Lib\site-packages\pydantic_ai\models\openai.py", line 206, in _process_response

timestamp = datetime.fromtimestamp(response.created, tz=timezone.utc)

TypeError: 'NoneType' object cannot be interpreted as an integer

During task with name 'advisor_with_examples' and id 'e083cd40-1749-d35d-84c8-ffb3b999595d'

I tried solving it but I couldn’t solve it.