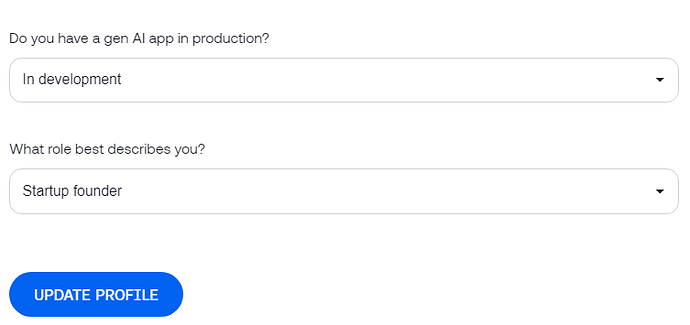

Hi! Via a video of Matthew Berman on youtube I was made aware of together.ai. Similar to openrouter.ai they wrap models. Because I used up the 10$ credits of openrouter and wanted to play with R1 I registered. Simply to try it…

To my surprise I got greeted with 100$ free credits and an api key.

So I extended my ai_provider_helper to support PureOpenAi, OpenRouter, TogetherAi

from langchain_openai import ChatOpenAI

from os import getenv

from enum import Enum

#from langchain_together import ChatTogether

class AiWrapperProvider(Enum):

PureOpenAi = 10

OpenRouter = 20

TogetherAi = 30

def get_ai_provider(model: str = "openai/gpt-4o", provider: AiWrapperProvider = AiWrapperProvider.OpenRouter) -> ChatOpenAI:

"""

Returns an instance of ChatOpenAI based on the specified provider.

:param model: The model to use (default: "openai/gpt-4o").

:param provider: The AI wrapper provider to use (default: OpenRouter).

:return: ChatOpenAI instance.

"""

if provider == AiWrapperProvider.PureOpenAi:

model = model.replace("openai/", "")

return ChatOpenAI(model=model)

elif provider == AiWrapperProvider.OpenRouter:

return ChatOpenAI(model=model,

openai_api_key=getenv("OPENROUTER_API_KEY"),

openai_api_base=getenv("OPENROUTER_BASE_URL"))

elif provider == AiWrapperProvider.TogetherAi:

return ChatOpenAI(model=model,

openai_api_key=getenv("TOGETHER_API_KEY"),

openai_api_base=getenv("TOGETHER_BASE_URL"))

else:

raise ValueError("Invalid AI wrapper provider specified.")

In your .env file you need:

TOGETHER_API_KEY=PuT-It-HeRe

TOGETHER_BASE_URL=https://api.together.xyz/v1

You might also need to pip install langchain-together package

This is the llm initializer for openrouter changes to:

llm = get_ai_provider(model="openai/gpt-4o", provider=AiWrapperProvider.OpenRouter)

And this is deepseek with TogetherAi:

llm = get_ai_provider(model="deepseek-ai/DeepSeek-R1", provider=AiWrapperProvider.TogetherAi)

I eventually ran into the error:

deepseek-ai/DeepSeek-R1 is not supported for JSON mode/function calling

… which I need for my project so I didn’t deep dive but thought maybe interesting to some. They do offer a json mode of some sort but I think it is now called structured output so I think they might be outdated but not sure.

Sidenote: Here are the docs on how to integrate them via langchain package addon Integrations but it behaved the same with my implementation above and that one is closer to OpenRouter.