I Topup Deepseek and have token, If I create new project it works fine.

Also I have two project in GitHub, I clone the Git Ripo without problem and my first project work fine and I can edit it with Deepseek.

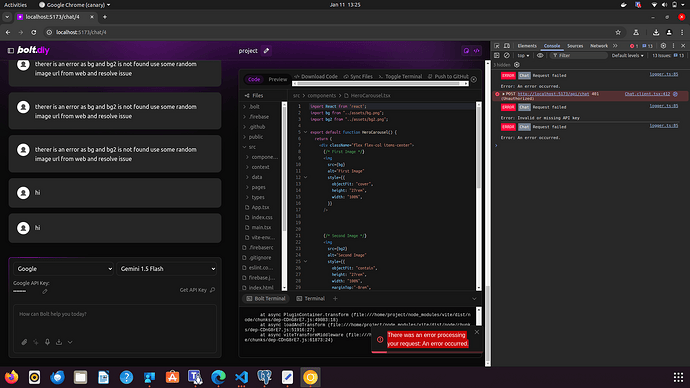

But when I clone another Project, It builds with out problem but any prompt I use I get error “There was an error processing your request - An error occurred”. There is no error in Terminal Console. in Console of browser I just get “ERROR Chat Request failed”.

Also I import that project from folder and get exactly same error.

By the way Happy New Year to all and Thanks for your Great Work

Welcome @ushic,

happy new year.

My guess is that your second project is to big for deepseek regarding context size. So it gives you an error.

You could verify testing out Google Gemini 2.0 with a huge context size (it´s free at the moment, just choose google as provider and click “Get API Key”

Thanks for your fast reply. I test Google Gemini 2.0 too now but same error.

I don’t think my project is too big, I create it with Bolt.new. any other way to find out what the problem is may be?

Hm, ok

Can you provide the project to me, so I can test? You can also PM it to me instead posting public, if you dont want.

PS: maybe try deleting the .bolt folder before import.

I delete .bold folder but still same issue.

How can I PM you ?

Ok, just click on my profile image here and click “Message”

Ok so far with help of @leex279

I send him my project and the project is OK and he can change it without problem.

I try to import it directly from Git but still same issue for me.

I try to reload page after insert API Key still same issue.

I try Chrome, Firefox and Edge still same issue.

It works fine if I create new chat

it work fine when I import another project from Git

I import same project from it’s source folder after remove .bolt folder still same issue

So far same issue exist in this specific project both with Google and Deepseek

We also testet with yours @aliasfox : https://bolt-stable.cyopsys.com/

Working for me as well but not for him. Very Strange.

(I can sent you the git link in PM if you like to test)

Hi,

any update on this issue? I start another project but after few days suddenly it starts to give me same error, I also check it with Deepseek and Gemini 2.0. I also check same project with https://bolt-stable.cyopsys.com/ and same issue.

I don’t know if it related to project size because it’s not too big also even if I ask something general that not need much token like “Who are you?” I get same error

Ok, but then its most likely you reached the rate limites of the provider.

If this happens, can you please use verify by prompting the provider directly with an CURLand see what response you get.

Ok I will check it now, but at same time I can use same API key and same LLM in new chat without problem

hmm ok, then maybe its not a rate limit. It´s very strange. @thecodacus maybe you have an idea?

have you prompted with images in bolt.new ?

No I don’t. also after import it to bolt.diy it works fine for some days

Hello sir

i have same problem, i can start a new chat using Deepseek API on bolt.diy and it works without any problem, but when I load my folder (witch I ve created on bolt.new) it shows me same erreur and when I switch to Gemini 2.0 flash it respond, HELP please.

@Unbackedly there is nothing we can do/help. Deepseek is just not able to handle the project size / tokens are sent to it.

So at the moment you can just use Gemini then. There are some optimizations planned for the next weeks/months, which maybe help, but at the moment this is the status.

Hello!

Not sure if you’re still experiencing this same problem, but I believe I found a solution. I was having the exact same problem then came here and searched for a fix.

I saw that @leex279 mention he was able to see your project just fine. That made me think it was a cache issue. I’m afraid of clearing my cache in fear of losing all of my projects though lol

So I just downloaded the code, then reuploaded it into a brand new chat and it started working again for me…

Just a quick workaround!

Hi @thejohnwjohnson,

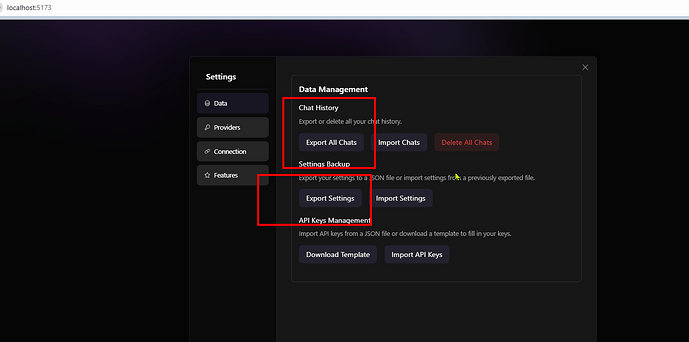

thanks for the post, yes this help. Regarding your Cache/Projects => Just export the whole chat history in settings and you are good to go ![]()

Hi @leex279 i’ve used different llm models but getting same error with everyone during normal chat it is resposning but after loading a folder and then chat according to my project it is start giving error There was an error processing your request: An error occurred.

also getting the same error on the same which you gave link earlier

@adityagangwar516 I think your project is to big to work with bolt at the moment.

You could try Gemini 2.0 Flash, which has more context size, but if this also not working, its to big and bolt cannot handle it at the moment.

In the future this will get better when DIFFs etc. are implemented, but right now its sending the whole project, which is to much.