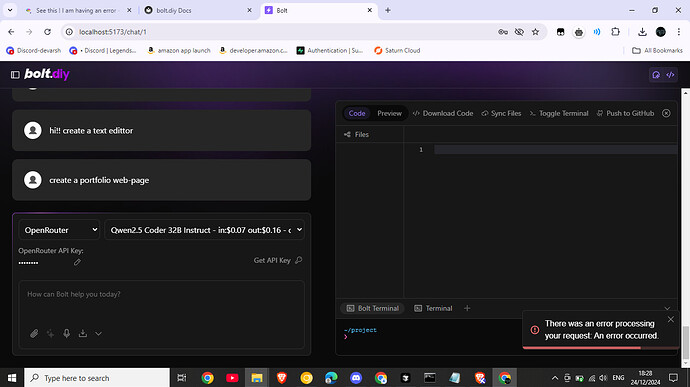

can anyone help me please , help me

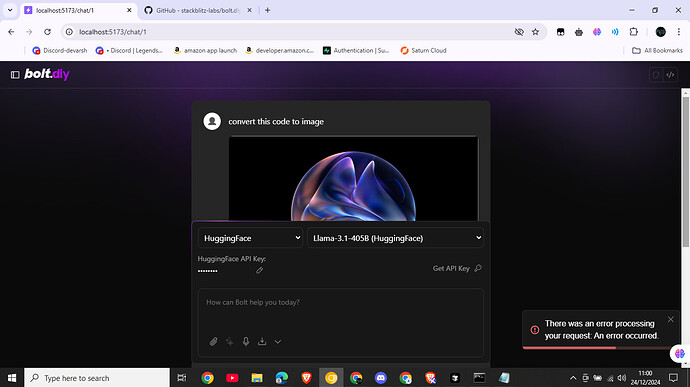

I am not able to access it properly

Are you using the stable branch?

Also, I never was able to get Llama3.1-405B working, so you may just want to try another model, like Qwen2.5-72B-Instruct as a sanity check.

what is stable branch .!!??

i tried Qwen2.5-72B-Instruct from openrouter but still same error

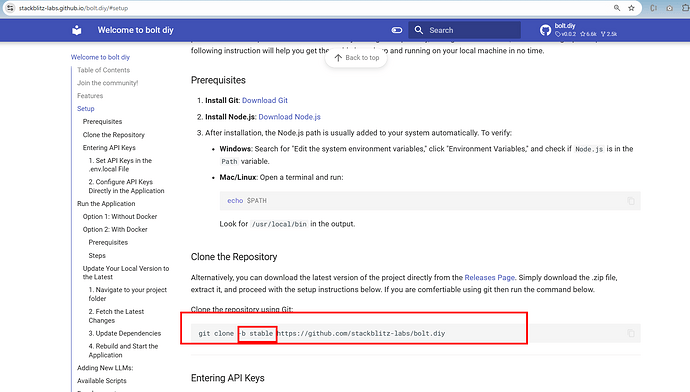

There are several branches in git. the “main” brach has the most recent changes, but is not always stable and ment more for testing and advanced users which also want to contribute to the project, give feedback to the latest development etc.

The “stable” branch is a well testet state and should be used by most users.

As described in the docs, you should clone the stable branch:

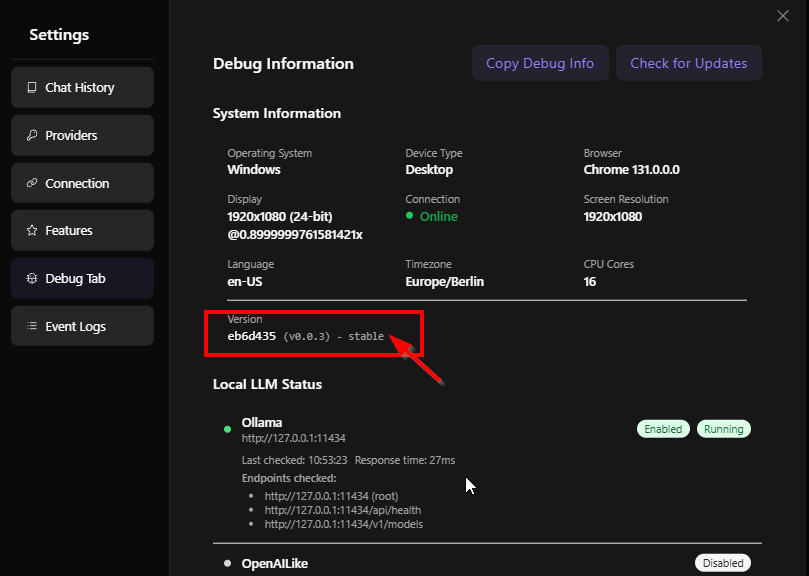

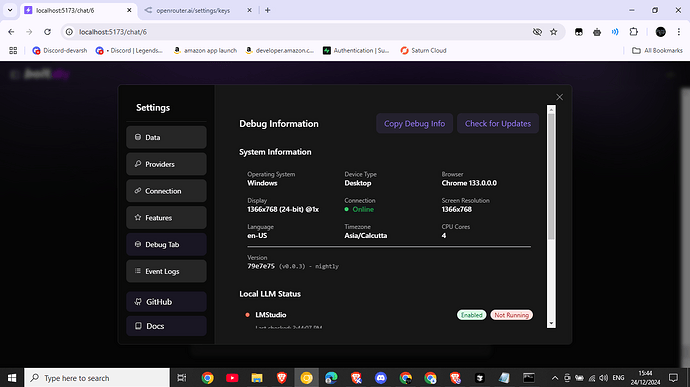

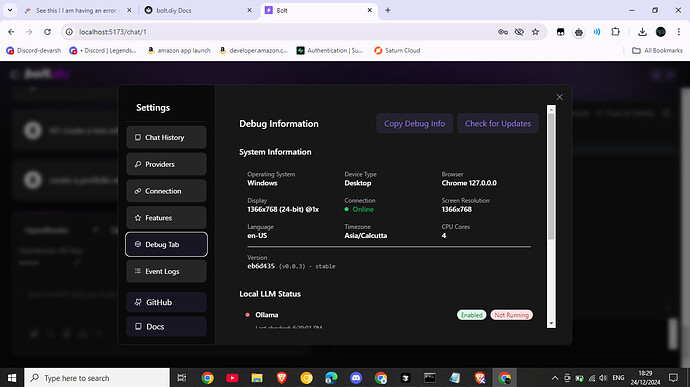

You can verify what your setup is within “Settings => Debug Tab”:

(If you dont see debug tab, you have to enable it in the “Features”)

can anyone answer i am not able to use bolt.diy since much time

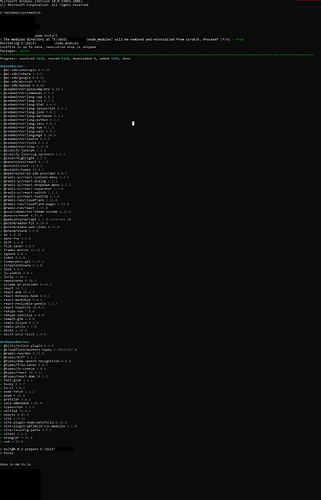

bro!! i ran simply git clone https://github.com/stackblitz-labs/bolt.diy.git and then cd bolt.diy , npm install -g pnpm , pnpm install and then pnpm run dev

I answered this above. You did not clone the repo as mentioned in the docs to use the stable branch. See my screenshots.

Clone stable branch:

git clone -b stable https://github.com/stackblitz-labs/bolt.diy

When already on the main, you can change the branch with:

git checkout stable && pnpm install && pnpm run dev

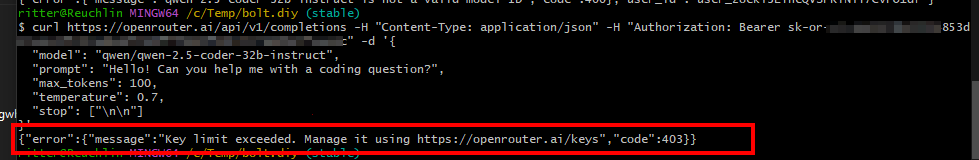

Can you please verify it outside of bolt, so we are sure your openrouter api key is working.

If I test e.g. with my account which has no credit at the moment, I get an error:

curl https://openrouter.ai/api/v1/completions -H "Content-Type: application/json" -H "Authorization: Bearer <YOUR API KEY HERE>" -d '{

"model": "qwen/qwen-2.5-coder-32b-instruct",

"prompt": "Hello! Can you help me with a coding question?",

"max_tokens": 100,

"temperature": 0.7,

"stop": ["\n\n"]

}'

@aliasfox @thecodacus same as mentioned for Antropic in the other topic => We should output these errors in the terminal/shell

I told you guys this would cause issues, lol. I still think we should switch the logic. We should have main (stable/prod), and testing (unstable, staging, whatever). All other branches are basically ‘dev’.

That’s all I use and I went through a bunch of credits yesterday, all using the stable branch. And I tested main with a few PR’s. But I can test again.

I agree, currently the Vercel AI library is handling this api call al thought i can see there is a error handling added around the function, but its still not trowing these error to catch.

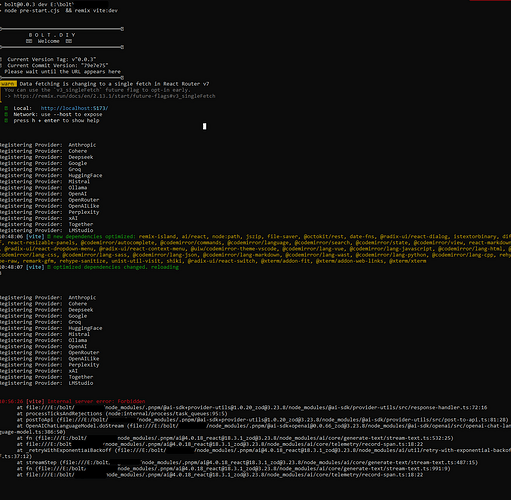

sorry! to disturb you guys, but instead of openrouter , i tried HF but still same error but this time there is something in terminal —

bolt@0.0.3 dev E:\bolt\bolt.diy

node pre-start.cjs && remix vite:dev

★═══════════════════════════════════════★

B O L T . D I Y

![]() Welcome

Welcome ![]()

★═══════════════════════════════════════★

![]() Current Commit Version: eb6d4353565be31c6e20bfca2c5aea29e4f45b6d

Current Commit Version: eb6d4353565be31c6e20bfca2c5aea29e4f45b6d

★═══════════════════════════════════════★

warn Data fetching is changing to a single fetch in React Router v7

┃ You can use the v3_singleFetch future flag to opt-in early.

┃ → Future Flags (v2.13.1) | Remix

┗

➜ Local: http://localhost:5173/

➜ Network: use --host to expose

➜ press h + enter to show help

WARN Constants Failed to get Ollama models: fetch failed

WARN Constants Failed to get Ollama models: fetch failed

TypeError: fetch failed

at node:internal/deps/undici/undici:13178:13

at processTicksAndRejections (node:internal/process/task_queues:95:5)

at Object.getOpenRouterModels [as getDynamicModels] (E:/bolt/bolt.diy/app/utils/constants.ts:439:23)

at async Promise.all (index 2)

at Module.getModelList (E:/bolt/bolt.diy/app/utils/constants.ts:343:9)

at Module.streamText (E:/bolt/bolt.diy/app/lib/.server/llm/stream-text.ts:101:22)

at chatAction (E:/bolt/bolt.diy/app/routes/api.chat.ts:101:20)

at Object.callRouteAction (E:\bolt\bolt.diy\node_modules.pnpm@remix-run+server-runtime@2.15.0_typescript@5.7.2\node_modules@remix-run\server-runtime\dist\data.js:36:16)

at E:\bolt\bolt.diy\node_modules.pnpm@remix-run+router@1.21.0\node_modules@remix-run\router\router.ts:4899:19

at callLoaderOrAction (E:\bolt\bolt.diy\node_modules.pnpm@remix-run+router@1.21.0\node_modules@remix-run\router\router.ts:4963:16)

at async Promise.all (index 0)

at defaultDataStrategy (E:\bolt\bolt.diy\node_modules.pnpm@remix-run+router@1.21.0\node_modules@remix-run\router\router.ts:4772:17)

at callDataStrategyImpl (E:\bolt\bolt.diy\node_modules.pnpm@remix-run+router@1.21.0\node_modules@remix-run\router\router.ts:4835:17)

at callDataStrategy (E:\bolt\bolt.diy\node_modules.pnpm@remix-run+router@1.21.0\node_modules@remix-run\router\router.ts:3992:19)

at submit (E:\bolt\bolt.diy\node_modules.pnpm@remix-run+router@1.21.0\node_modules@remix-run\router\router.ts:3755:21)

at queryImpl (E:\bolt\bolt.diy\node_modules.pnpm@remix-run+router@1.21.0\node_modules@remix-run\router\router.ts:3684:22)

at Object.queryRoute (E:\bolt\bolt.diy\node_modules.pnpm@remix-run+router@1.21.0\node_modules@remix-run\router\router.ts:3629:18)

at handleResourceRequest (E:\bolt\bolt.diy\node_modules.pnpm@remix-run+server-runtime@2.15.0_typescript@5.7.2\node_modules@remix-run\server-runtime\dist\server.js:402:20)

at requestHandler (E:\bolt\bolt.diy\node_modules.pnpm@remix-run+server-runtime@2.15.0_typescript@5.7.2\node_modules@remix-run\server-runtime\dist\server.js:156:18)

at E:\bolt\bolt.diy\node_modules.pnpm@remix-run+dev@2.15.0_@remix-run+react@2.15.0_react-dom@18.3.1_react@18.3.1__react@18.3.1_typ_3djlhh3t6jbfog2cydlrvgreoy\node_modules@remix-run\dev\dist\vite\cloudflare-proxy-plugin.js:70:25 {

[cause]: ConnectTimeoutError: Connect Timeout Error (attempted addresses: 104.22.48.189:443)

at onConnectTimeout (node:internal/deps/undici/undici:2331:28)

at node:internal/deps/undici/undici:2283:50

at Immediate._onImmediate (node:internal/deps/undici/undici:2313:37)

at processImmediate (node:internal/timers:483:21)

at process.topLevelDomainCallback (node:domain:160:15)

at process.callbackTrampoline (node:internal/async_hooks:128:24) {

code: ‘UND_ERR_CONNECT_TIMEOUT’

}

}

bro!! i can’t use docker alternative too due to windows update error , so i have to solve this error only

this is just an handled error where the server failed to get open-router models but it should not hamper the function of your other operations