Would be great if the dropdown boxes were populated with the LLM’s previously chosen options.

Then you could just type and press enter without having to change the LLM settings each time.

I’m willing to work on this one but I wanted to put the idea out there first for discussion.

What do you guys think?

1 Like

mahoney

November 12, 2024, 5:40pm

2

Taking a note for roadmap, low effort and this makes a lot of sense as once you’ve got your desired model you will probably only want to change models explicitly, i.e. if something you are attempting requires a larger one.

3 Likes

Ou yeah, really want that too, wanted to even sneak in but should be separate PR, if no one will do it I may add a PR with that tomorrow

1 Like

Turned out it was there already, in cookies, just due to bugs not picked up

2 Likes

Kudos for looking at what’s already there

Really stupid question but where do I find the roadmap?

1 Like

mahoney

November 13, 2024, 7:18pm

7

Not a stupid question, we’ll be working on a public-facing version in the near future.

1 Like

Here is PR where it should work, spent whole day today making a new version I host work

coleam00:main ← wonderwhy-er:addGetKeyLinks

opened 09:48PM - 11 Nov 24 UTC

### Goal

Goal was to provide link to get API key for first time users

To achie… ve that I standardised provider list to include name, static and dynamic model lists and API key url and label.

For LM Studio link and label are about downloading LM Studio, for Ollama too

### PR Summary

1. **Constants Update**:

- Introduces `ProviderInfo` and `PROVIDER_LIST`, organizing providers with models and API key links.

- Refactors model fetching to be dynamic for supported providers.

2. **Base Chat Component Update**:

- Uses `PROVIDER_LIST` dynamically for provider selection, removing hardcoded options.

3. **API Key Management Component Update**:

- Added link to get API keys if providers has the link

4. **Added Dynamic Model list for OpenRouter**

5. **Made server use API keys from cookies instead of request body** - there were issues with them not being actual in request body

6. **Fixed Google API keys provider** - API key was passed as argument while options with it as property was expected

And here is my version I host, it has some differences but LLM settings are preserved there

I tried to run this but the list of LLMS don’t seem to be populated anymore?

I see this console error:

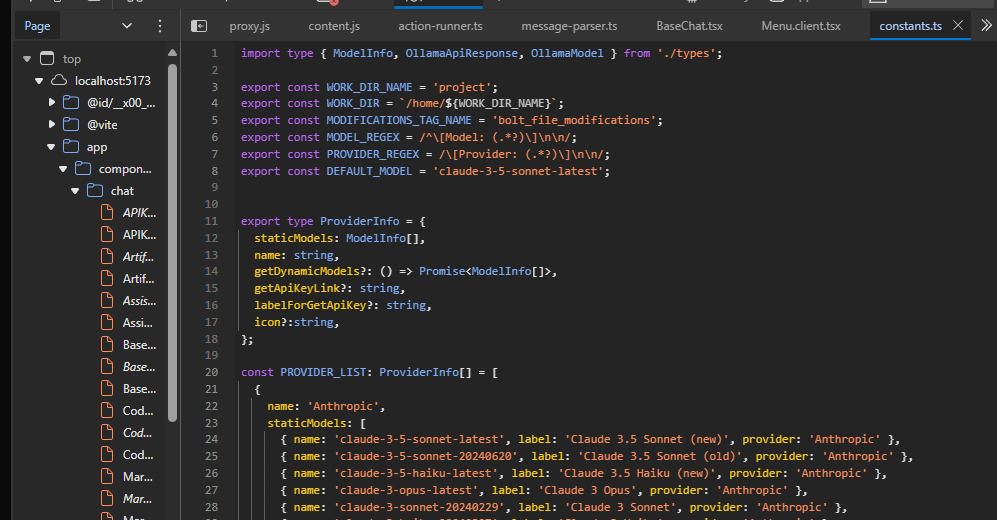

If I go to the constants file I see

export type ProviderInfo = {

staticModels: ModelInfo[],

name: string,

getDynamicModels?: () => Promise<ModelInfo[]>,

getApiKeyLink?: string,

labelForGetApiKey?: string,

icon?:string,

};

Hmmm, very strange.

interesting, typecheck, build, start, dev commands work for me without that error

I’m running it with dev.

I also did pull the repo again from scratch today.

mahoney

November 14, 2024, 9:17am

13

@wonderwhy.er I dropped a short diff on comments for your PR based on seeing this as well, I also use disable cache so it’s likely related to that.

mahoney

November 14, 2024, 9:17am

14

Hit me up with any thoughts there, the diff does allow the branch to work great

I see this when I try to apply that patch. It looks like it might be incomplete?

I used the copy button to ensure that I’d not missed anything:

❯ git apply ./mahoney.patch

error: patch failed: app/utils/constants.ts:234

error: app/utils/constants.ts: patch does not apply

We are talking about this comment right?

I pushed type fix, but its not for that export problem, I sadly do not get that export problem.

What node.js version, operating system, environment you have?

I am wondering how to reproduce

Ah okay that makes sense, I understand.

What version of node are you using? I’m not at my machine right now but I can let you know. I was doing a pnpm run dev. I’m happy to make a video a bit later or we can jump on a call and look at it together also.

Wait, I just reproduced it.

1 Like

Oooo, I love it when that happens. What did you change? Your node version?

Perhaps when I wrote pnpm that might have been the thing you tried?

Here are my node, npm and pnpm versions:

bolt.new-any-llm on addGetKeyLinks [?] via v20.16.0

❯ node -v

v20.16.0

bolt.new-any-llm on addGetKeyLinks [?] via v20.16.0

❯ npm -v

10.8.1

bolt.new-any-llm on addGetKeyLinks [?] via v20.16.0

❯ pnpm -v

9.12.2

No, it just that I use use built + start not dev command.

And in dev it did fail.

Just pushed in the fix

Seems like we can’t export types from all of the files in current setup.

1 Like