Hi,

I am NOT a coder, but I am a systems and networking guy with a lot of experience in architecture.

My interests are initially in expanding the areas of memory management and token management, but overall architecture questions are what fill my mind.

Given this I worked the following up with ChatGPT, and I’d like to hear what others think. I see a lot of talk about features to add, but very little regarding architecture and a real roadmap. Have a look:

Architecture Summary for Memory and Token Management Framework

Goals

-

Comprehensive Memory Management:

- Store and retrieve chat histories, datasets, and metadata.

- Support multiple storage backends, including databases (e.g., PostgreSQL) and vector databases (e.g., Pinecone, Weaviate).

- Enable summarization, context generation, and seamless integration with chat flows.

-

Efficient Token Management:

- Manage tokenization, detokenization, and token limits dynamically.

- Provide compatibility across multiple language models with configurable token strategies.

-

Dynamic Dataset Handling:

- Allow loading, querying, and semantic searching of structured datasets.

- Enable integration with retrieval-augmented generation (RAG) pipelines.

-

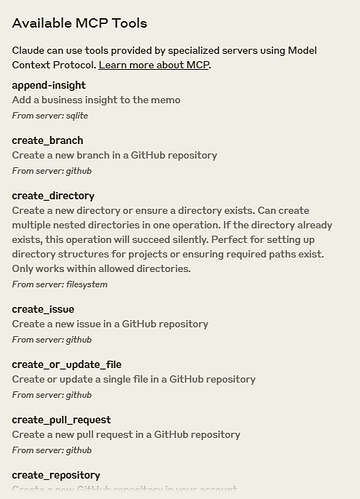

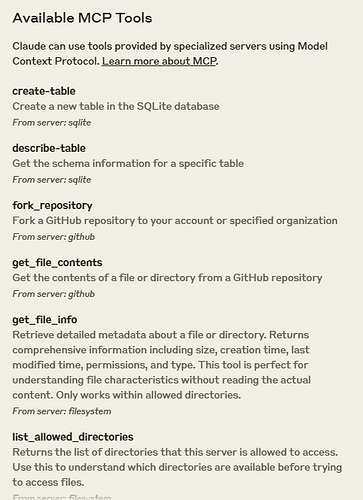

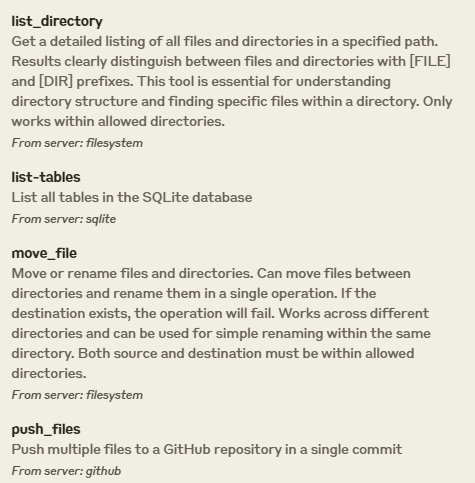

Agent Framework:

- Support modular agents, including Swarm agents, MCP-compatible agents, and task-specific agents.

- Facilitate multi-agent collaboration and communication.

-

Extensibility:

- Provide a plugin system for memory, token management, and dataset backends.

- Allow external tools and APIs (e.g., LangChain, FAISS) to integrate seamlessly.

-

Python Integration:

- Leverage Python for advanced memory, RAG, and tokenization tasks.

- Enable communication between Python microservices and the TypeScript app.

Architecture Components

1. Memory Management

- Core Interface:

- Abstracts storage, retrieval, summarization, and key management.

export interface MemoryManager { save(key: string, value: any): Promise<void>; load(key: string): Promise<any | null>; summarize(context: string[]): Promise<string>; delete(key: string): Promise<void>; listKeys(prefix?: string): Promise<string[]>; } - Backends:

- Relational Databases (e.g., PostgreSQL, MySQL):

- Store chat histories and metadata.

- Vector Databases (e.g., Pinecone, Weaviate):

- Handle embeddings and semantic searches.

- Hybrid Memory:

- Combine multiple backends for versatility.

- Relational Databases (e.g., PostgreSQL, MySQL):

2. Token Management

- Core Interface:

- Handles token operations like counting, splitting, and ensuring token limits.

export interface TokenManager { tokenize(input: string): string[]; detokenize(tokens: string[]): string; countTokens(input: string): number; isWithinLimit(input: string): boolean; } - Backends:

- OpenAI Token Manager: Token handling for OpenAI models.

- Custom Tokenizers: For Hugging Face, Anthropic, or other APIs.

3. Dataset Management

- Core Interface:

- Supports dataset loading, listing, and querying.

export interface DatasetManager { listDatasets(): Promise<string[]>; loadDataset(datasetName: string): Promise<any[]>; queryDataset(datasetName: string, query: string): Promise<any[]>; } - Backends:

- Relational Databases: Handle tabular data storage and querying.

- Python Integration: Perform semantic searches via LangChain.

4. Agent Framework

- Core Interface:

- Defines agents that respond to inputs and perform tasks.

export interface Agent { id: string; name: string; description: string; respond(input: string): Promise<string>; performAction(action: string, data: any): Promise<any>; } - Agent Types:

- Simple Agent: Linear task-based responses.

- Swarm Agent: Multi-agent collaboration for complex tasks.

- MCP Agent: Implements Anthropic’s Model Context Protocol for advanced communication.

5. Plugin System

- Core Design:

- Centralized plugin registration for memory, token, and dataset backends.

export class PluginManager<T> { private plugins: Map<string, T> = new Map(); register(name: string, plugin: T): void { this.plugins.set(name, plugin); } get(name: string): T | undefined { return this.plugins.get(name); } list(): string[] { return Array.from(this.plugins.keys()); } } - Use Cases:

- Add custom memory or token managers dynamically.

- Register dataset backends or RAG pipelines.

6. Python Integration

- Microservices:

- Run Python services using FastAPI for:

- RAG pipelines (e.g., LangChain).

- Tokenization and summarization.

- Dataset querying and semantic search.

- Run Python services using FastAPI for:

- TypeScript Interfacing:

- Communicate with Python services using HTTP APIs:

async function queryPythonService(endpoint: string, payload: any): Promise<any> { const response = await fetch(endpoint, { method: "POST", headers: { "Content-Type": "application/json" }, body: JSON.stringify(payload), }); return await response.json(); }

Proposed Directory Structure

src/

├── lib/

│ ├── memory/ # Memory managers

│ │ ├── InMemoryMemoryManager.ts

│ │ ├── VectorDatabaseMemoryManager.ts

│ │ └── DatabaseMemoryManager.ts

│ ├── tokens/ # Token managers

│ │ ├── OpenAITokenManager.ts

│ │ └── CustomTokenizer.ts

│ ├── agents/ # Agent logic

│ │ ├── MCPAgent.ts

│ │ ├── SwarmAgent.ts

│ │ └── SimpleAgent.ts

│ └── plugins/ # Plugin system

│ └── PluginManager.ts

├── hooks/ # React hooks

│ ├── useMemoryManager.ts

│ ├── useTokenManager.ts

│ └── useAgentManager.ts

├── utils/ # Utilities

│ └── fetch.ts # API communication

├── services/ # Python interop

│ ├── memoryService.ts # Communicates with Python memory services

│ ├── datasetService.ts # Handles dataset queries

│ └── ragService.ts # RAG pipeline integration

Next Steps

- Build Core Interfaces:

- Define and implement

MemoryManager,TokenManager, andAgentinterfaces.

- Define and implement

- Implement Database and Vector Backends:

- Create

DatabaseMemoryManagerfor chat history. - Add

VectorDatabaseMemoryManagerfor semantic retrieval.

- Create

- Integrate Python Microservices:

- Use FastAPI for LangChain-based RAG and tokenization.

- Develop Plugin System:

- Enable dynamic registration and loading of memory, token, and agent modules.

This architecture ensures scalability, modularity, and interoperability with Python-based resources like LangChain.

Even though I am not a coder, if the architecture were established and agreed upon I could do what I do at work (sort of as an experiment, too): see if my zero coding experience but vast IT experience oterhwise can result in some usable contribution to this effort.

Thoughts?