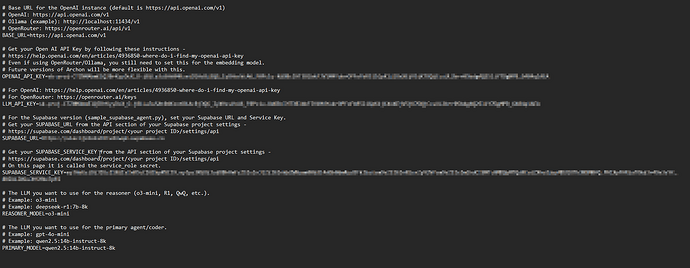

i have setup the MCP and start the graph service. It is receiving the prompts from windsurf (Windows version) but it seems it is not able to get to the pydantic ai coder? any thoughts what i missed along the way?

(venv) C:\SOURCE\Archon-main\Archon-main\iterations\v3-mcp-support>python graph_service.py

INFO: Started server process [19120]

INFO: Waiting for application startup.

INFO: Application startup complete.

INFO: Uvicorn running on http://127.0.0.1:8100 (Press CTRL+C to quit)

20:25:07.543 reasoner run prompt=[Scrubbed due to 'API key']

20:25:07.632 preparing model and tools run_step=1

20:25:07.633 model request

20:25:35.657 handle model response

20:25:35.668 pydantic_ai_coder run stream prompt=[Scrubbed due to 'API key']

20:25:35.695 run node StreamUserPromptNode

20:25:35.703 preparing model and tools run_step=1

20:25:35.704 model request run_step=1

INFO: 127.0.0.1:57689 - "POST /invoke HTTP/1.1" 500 Internal Server Error

20:25:47.603 reasoner run prompt=

User AI Agent Request: Create a Python script that uses t...o creating this agent for the user in the scope document.

20:25:47.605 preparing model and tools run_step=1

20:25:47.607 model request

20:26:05.003 handle model response

20:26:05.014 pydantic_ai_coder run stream prompt=Create a Python script that uses the NewsAPI.org service to fe... errors gracefully

6. Save the output to a dated markdown file

20:26:05.015 run node StreamUserPromptNode

20:26:05.019 preparing model and tools run_step=1

20:26:05.020 model request run_step=1

INFO: 127.0.0.1:57750 - "POST /invoke HTTP/1.1" 500 Internal Server Error