Hi,

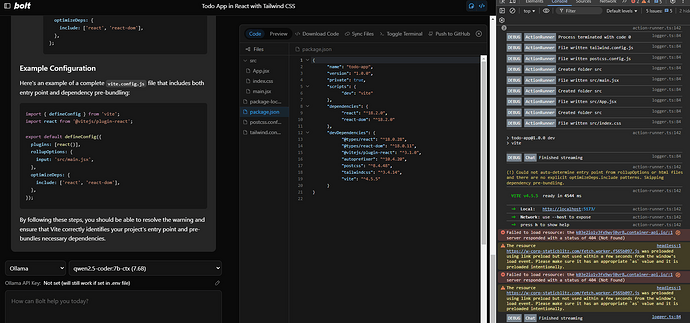

Any ideas how to resolve this issue with the preview not loading using docker?

I switched models so far qwen coder 2.5 and deepseekcoder are working for me

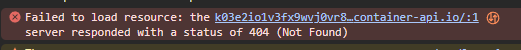

I’m using qwen coder 2.5 7B and it generates the files code files and tries to open the preview window but I get the 404 error for the preview window which indicates there is something wrong virtual environment needed to show to preview.

I have that issue intermittently, try deepseekcoder the small one

Yeah I agree with @stevenK it could be the LLM hallucinating and not running the right commands for the preview. I’d first try a different model and see if the issue persists. Because if it does then it could be a number of different things like a browser setting, an extension interfering, etc.

I wonder if it could be the temperature settings Ollama uses. I’m going to see if adjusting the temperature parameter helps with the hallucinating

How did it go? Did it work for you?

Didn’t seem to make a difference for the 404 error. I’m still evaluating if the temperature parameter improves the quality the LLM responses

Hi,

Any update on this issue?

Hi,

Any update on this issue?

Thats not a bolt problem at all. Its mostly just the LLM not working well with bolt together and is like in this case just to small to deliver proper output.

To ensure you got no problem at all, try Gemini 2.0 Flash (Google) and it should work fine.

Or one of the other models mentioned in the FAQ:

https://stackblitz-labs.github.io/bolt.diy/FAQ/