Hey guys,

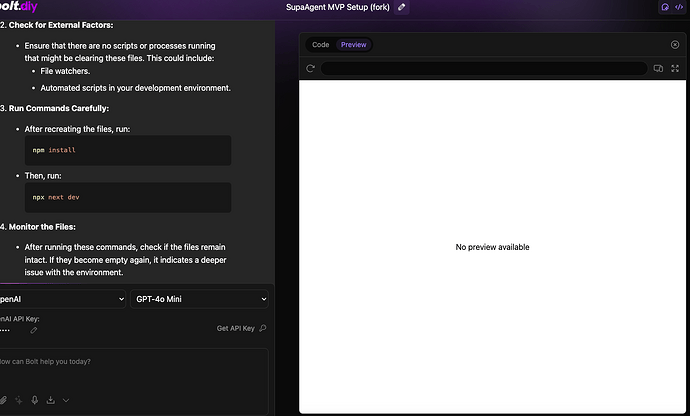

I’m new to Bolt.new and Bolt.diy and need help me with displaying my app in the Preview Page? I’m trying to get the LLM to display the website in the Preview page but it is asking for my WebContainer URL but I’m not sure where to get this info. I also ran the npm install && npm run dev commands and still couldn’t get the Preview page to work.

From the LLM:

"The WebContainer’s preview mechanism is different from a typical npm run dev setup. A standard npm run dev starts a development server on your local machine, making it accessible via localhost. The WebContainer, however, runs in a sandboxed environment, and its preview functionality handles the serving and accessibility of the application differently.

The instructions you quoted assume a standard Node.js development environment where you’d run commands on your local machine to start a development server. The WebContainer, however, manages the server and preview internally. My previous responses incorrectly implied that a simple npm run dev would update the preview. That’s not how the WebContainer’s preview works.

To see your application, you must obtain the correct URL from the WebContainer interface (as explained in previous responses) and access it through your browser. There’s no direct “preview update” mechanism controlled by running npm run dev or similar commands within the WebContainer in this specific context."