Hi

Firstly, where did this screen shot come from please. There’s very little helpful detail here.

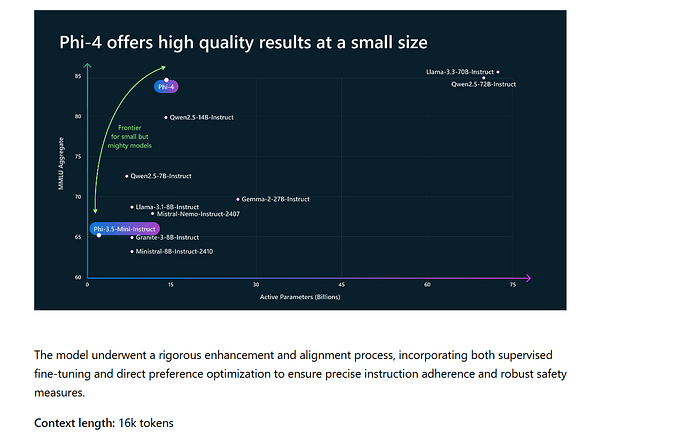

I guess from here: https://techcommunity.microsoft.com/blog/aiplatformblog/introducing-phi-4-microsoft’s-newest-small-language-model-specializing-in-comple/4357090

but as this is the official one from MS, would be interesting why you think it should be 32k ![]()

@giga - I was trying to work out what it has to do with Bolt.diy too. ?

its from ollama @shawn , just scroll down from the phi 4 model . I was trying to use it as a ollama model in bolt.diy until the context length didnt make sense

What do you mean by its from Ollama? Ollama is installed locally on your system and just has the Models your downloaded yourself. There is no fixed list. Its fully on you.

please attach some screenshots maybe to clarify what you hinting at.

phi4 scroll down

Ok, but still the question open where the assumption comes from that it should have 32k

yea idk either, just pointing out where ollama has their model info for your general fyi