Hello everyone! This is my first post in this forum, and I’m thrilled to be part of the community. Before I get started, I want to give a huge kudos to the entire community, which I’ve been following closely since the Bolt.new fork. I think I must be among the first to watch every Eduards and Colin video! lol

Yesterday, I faced an issue after pulling either commit e064803 or fce8999. It’s a bit of a guess, but I can’t be certain which one caused the problem.

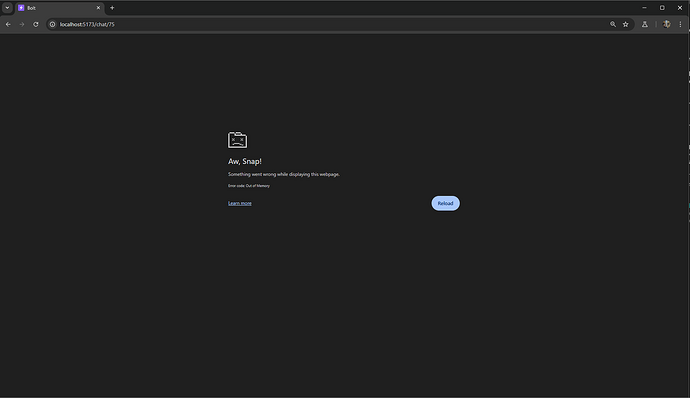

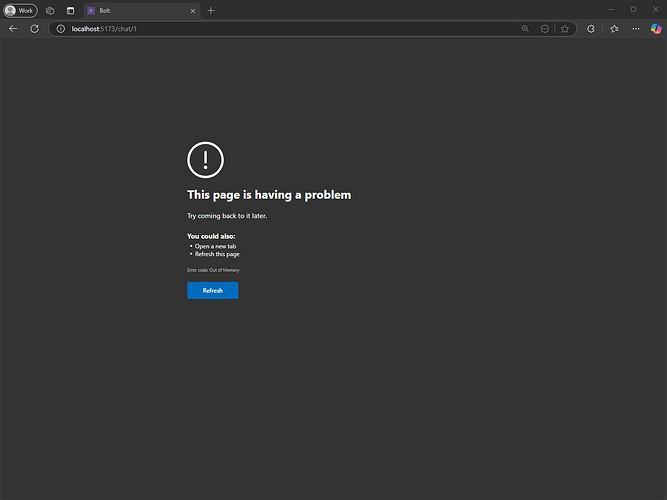

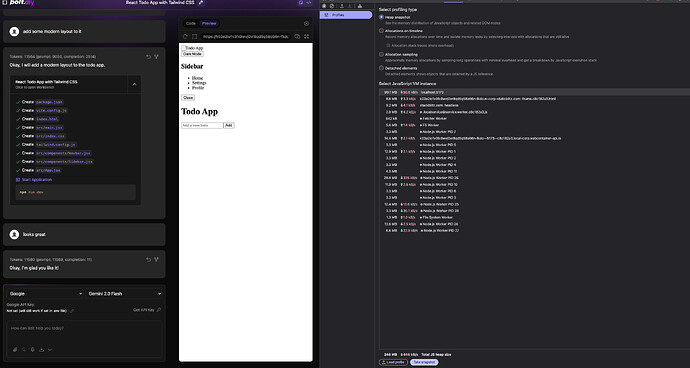

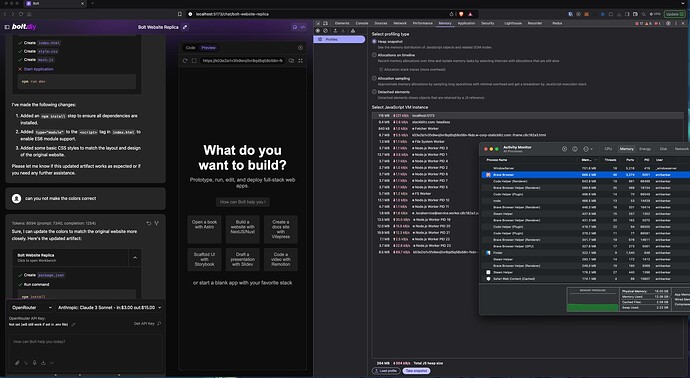

Here’s what happens: my browser crashes and I receive an Out of Memory error on my Canary browser

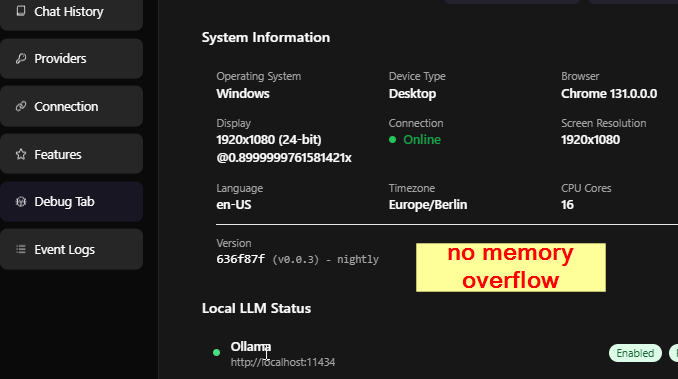

Here is my debug info:

{

“System”: {

“os”: “Windows”,

“browser”: “Chrome 133.0.0.0”,

“screen”: “2560x1080”,

“language”: “en-US”,

“timezone”: “America/Sao_Paulo”,

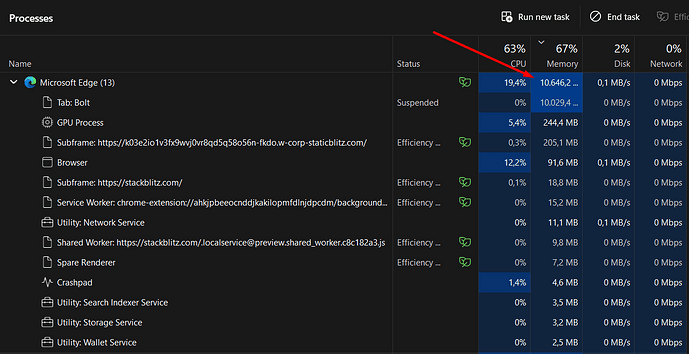

“memory”: “4 GB (Used: 198.03 MB)”,

“cores”: 8,

“deviceType”: “Desktop”,

“colorDepth”: “24-bit”,

“pixelRatio”: 0.800000011920929,

“online”: true,

“cookiesEnabled”: true,

“doNotTrack”: false

},

“Providers”: [

{

“name”: “Ollama”,

“enabled”: true,

“isLocal”: true,

“running”: false,

“error”: “No URL configured”,

“lastChecked”: “2024-12-19T15:12:34.640Z”,

“url”: null

},

{

“name”: “OpenAILike”,

“enabled”: true,

“isLocal”: true,

“running”: false,

“error”: “No URL configured”,

“lastChecked”: “2024-12-19T15:12:34.640Z”,

“url”: null

},

{

“name”: “LMStudio”,

“enabled”: true,

“isLocal”: true,

“running”: false,

“error”: “No URL configured”,

“lastChecked”: “2024-12-19T15:12:34.641Z”,

“url”: null

}

],

“Version”: {

“hash”: “381d490”,

“branch”: “main”

},

“Timestamp”: “2024-12-19T15:12:36.477Z”

}

I can’t say for sure if this is directly related, but it’s worth noting that code streaming seems glitchy and irregular—it just doesn’t “flow” as smoothly as before.

Moreover, I’ve noticed some warnings:

[WARNING]

12/19/2024, 12:40:35 PM

Resource usage threshold approaching

{

“memoryUsage”: “75%”,

“cpuLoad”: “60%”

}

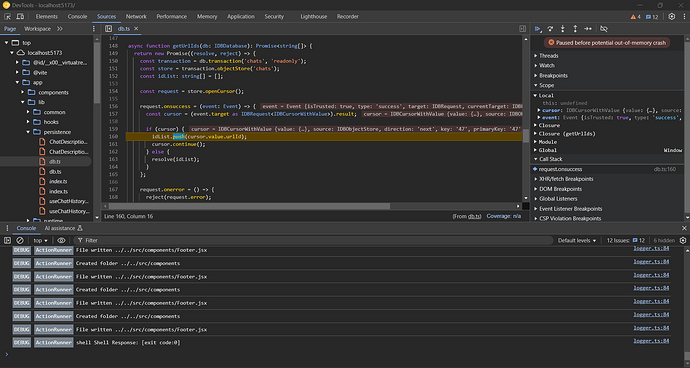

Overall, the performance is noticeably slower, and after some time, my Chrome crashes and this is what I get on the console:

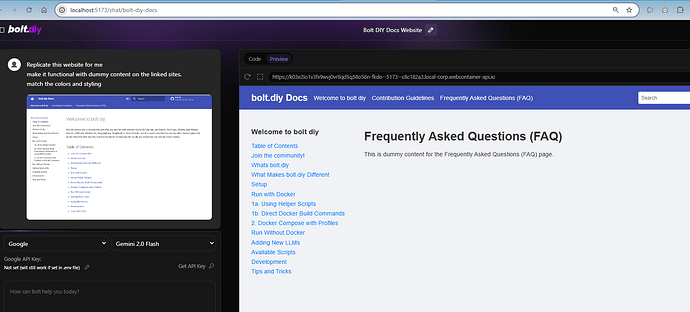

The initial issue occurred with Haiku 3.5, but it has also shown up with several other models, including Llama 3.3, Amazon Nova, Codestral, and GPT-4o.

Thank you for your time, and looking forward to your insights!