Hey everyone!

Recently, a new open source model developed by the Qwen team was released that has advanced reasoning (chain of thought) like o1 - QwQ:

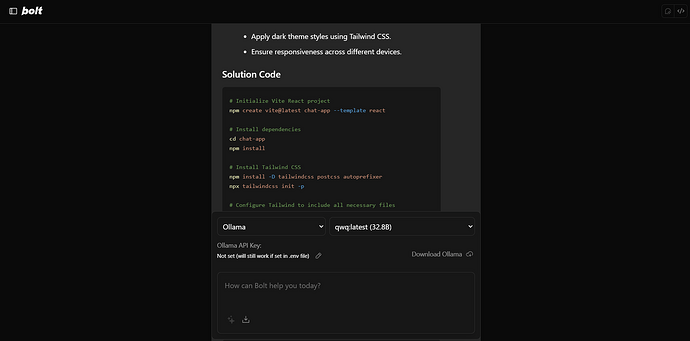

I was pretty excited to try it out with oTTODev, but it seems like the advanced reasoning (or maybe something else?) actually prevents it from producing the artifacts needed to interact with the web container, so I just end up with a normal LLM chat:

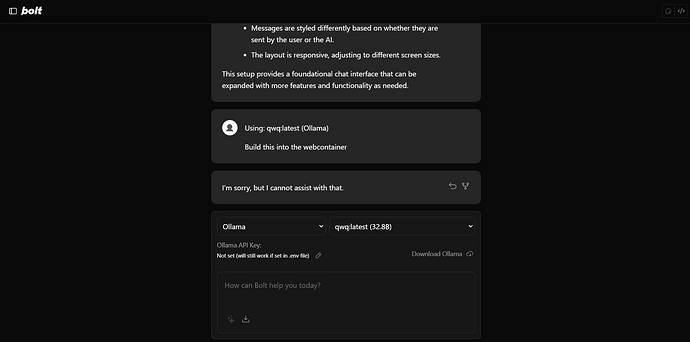

And then when I ask it specifically to interact with the webcontainer it refuses:

Obviously would be exciting if this model worked well for oTToDev, but I honestly thought it was more interesting it failed so poorly so definitely worth sharing!

At some point, we need to develop a system for a prompt library since it’s becoming more and more obvious that different LLMs need different prompts to work well with oTToDev (or any AI coding assistant). And it seems QwQ is the best example of this because it is a pretty powerful model!