I am using the v3 agent. When i use the chat from n8n it works. but when the model in the webui tries to access info from its knowledge base it serves me a TypeError: NetworkError when attempting to fetch resource.

How did you configure OpenWebUI for connecting with n8n? (as shown in my video?)

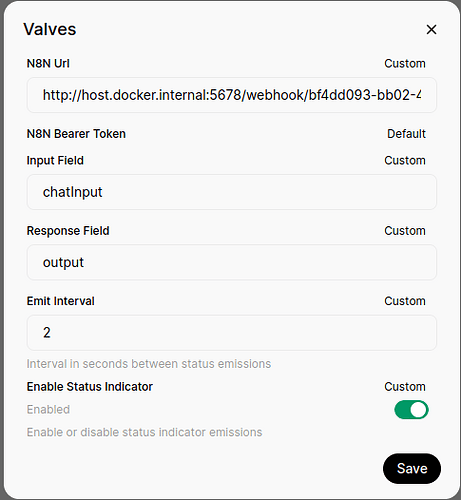

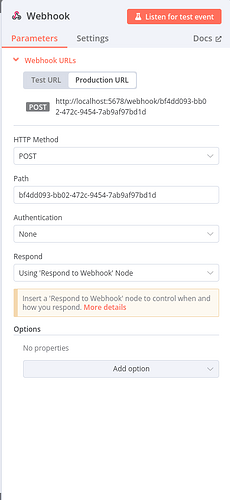

Post screenshots of your config pls

I get this

TypeError: NetworkError when attempting to fetch resource.

This is my setup. I copied the production url and edited the docker.internal

Is OpenWebUI running in the local AI package or in a container? Try changing host.docker.internal to n8n or localhost!