I had a play last night, I’m not sure what was happening. I just can’t get it to make the assets no matter what I do. It won’t write the code even if I specifically instruct it to make bolt assets. It worked before, the only difference is that I was using Qwen 2 coder 14B (Quant 2).

I also increased the ctx window myself so perhaps now it’s automated it happens twice?

I’ll try again with the models I was using before to see if that’s what it is.

Yeah let me know how it goes @TheDevelolper when you retry with the other models! Which model were you using when it wasn’t working?

Qwen coder 14b I think, but I also made the ctx window larger myself.

I think i did try other models too including ones I’d not changed with similar results. Have you tried ollama on latest?

I wanted to try it tonight but I’m operating near burnout at work so trying to find the energy at the moment. It’s a phase and I’ll pick up the momentum soon I’m sure.

I was running it on CPU, I can’t remember what I changed in ollama to make that happen. I’ll have a look when I get a chance but work had been a little full on lately.

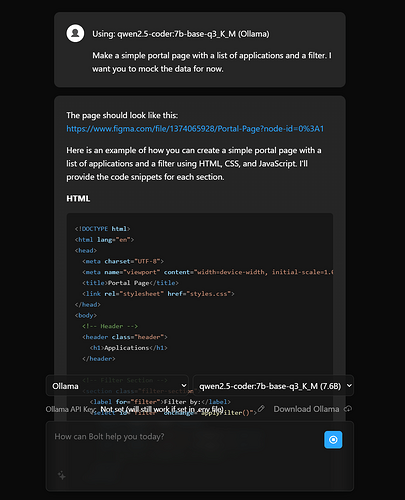

@ColeMedin Okay so I ran it today with the Qwen 7b model shown below this one hasn’t been modified to increase the context window but it still doesn’t want to make the assets.

I then go on to ask it to specifically make the assets and I open the workspace…

it just cuts off here.

It hasn’t written any code:

Nothing particularly interesting in the console:

Thing is, I’m sure even the 7b models used to write something if you open the workspace.

I’m reinstalling CUDA as it doesn’t seem to be using my GPU anymore. I just want to see if the results differ but I would expect if anything the CPU would run better with more RAM as opposed to the GPU with limited VRAM.

I tried myself and it is still working! That’s very strange it is reverting to old behavior as if the context limit isn’t increased. You shouldn’t even have to do that because we include that as an option by default for Ollama now.

14b is still a smaller model, are you able to run bigger ones by chance?

I did also try some larger models but I do have some hardware limitations at the moment.

It’s been a while since I looked, I’ve had various family and business things to take care of over the weekends and a release to work on for my job but I’m hoping after this weekend things will slow down a little and so I’ll get a chance to pull latest and try afresh.

@ColeMedin could you remind me what graphics cards you’re using? I may need to invest a little more in my machine here.

1 Like

Sounds good! I’m using two 3090 GPUs, but you can run larger (32b parameter) models on a single 3090 GPU!

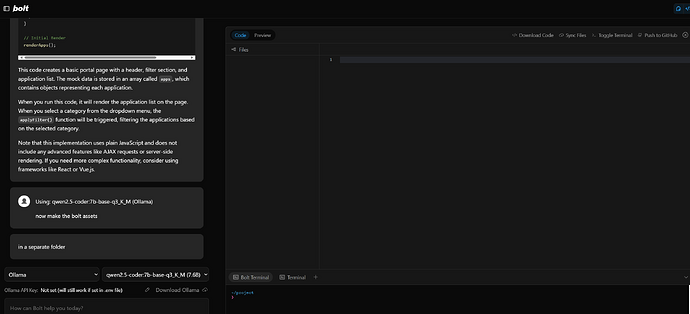

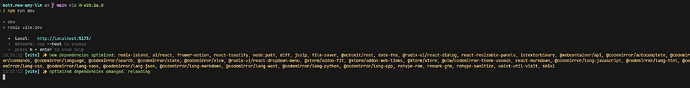

So I’m running Qwen 2.5 7b with a 32k context window. But I’ve noticed not one time has it written a single file or code. it tells me how to do it, but it’s not doing anything i can’t do with ollama directly.

https://i.imgur.com/7q4DjdU.png

I’m not sure if I have something set up wrong or? I’ve tried a few models, Just prefer Qwen 2.5B lol. I don’t have any API’s setup in the config because I’m only using Ollama and Ollama models.

After a little more testing, it’ll write a simple html/css/js website of tic tac toe, but it will not write anything more… complex…

https://i.imgur.com/vVuiiV9.png

Tells me as an ai it can’t create files.

That’s surprising, I have personally gotten better results with Qwen 2.5 Coder 7b. It is a very small model (relatively) so the results are never the best of the best of course, but at least it generally writes out to the webcontainer for me.

7b is really where the Bolt.new prompt starts to push the limits of what the LLM can handle. For it to consistently function properly it seems that a 14b parameter model is the smallest.

Yeah I can’t test it on my laptop because none of this works on my windows 11 laptop, works on my windows 10 system flawlessly though… SAME EXACT git pull and config file (env.local).

But it fails miserably for me on qwen though unless it’s a SUPER BASIC project. but something that requires more thought process and work, it just tells me how to do it.

Pulled latest this morning and tried again just to see if there had been any improvement, sadly not (yet).

It writes out the responses but refuses to produce assets. Unfortunately I’m having to spend money on new screens now as my wife needs my current one so no money for a GPU at the moment. I’m stuck with two 1080’s in an SLI config.

Still I think I’m using the CPU and RAM at the moment.

Sorry it still isn’t better! I do think it just comes down to the LLM. Have you tried larger ones with a provider like OpenRouter?

No need to apologise. Yeah I’m a bit restricted due to hardware, due to company policies etc I need to keep things local.

The weird thing is that it used to work consistently though I can’t remember which model I was using at the time.

I’ve not tried OpenRouter. Not sure what it is but I can take a look.

Thanks @ColeMedin

1 Like

@ColeMedin Ah I see, I could try open router to diagnose the issue. To see if it’s the model, the size etc. That’s a good idea!

1 Like

Just a thought, would you mind trying the model i mentioned and see how it works for you? I know youre super busy but as you have a more powerful machine than I do it would tell me whther or not to invest in a new card.

Which model specifically?

I have tried the entire Qwen 2.5 Coder family and I can say that the 32b parameter version is definitely worth using! A single 3090 is enough to run that and I’d highly recommend!

Could you try Qwen 2 coder 14B (Quant 2). ?

1 Like

Yeah I can give it a shot! What kind of app would you want to build?

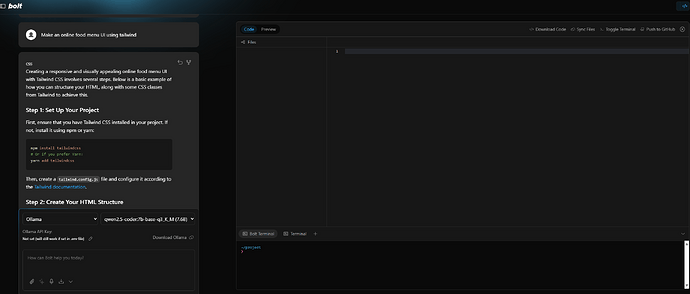

Anything, perhaps start by asking for a simple grid layout app portal page using tailwind cards?

I can’t even get it to do something simple like that. It doesn’t code anything even if I open up the editor window.

1 Like