Good afternoon,

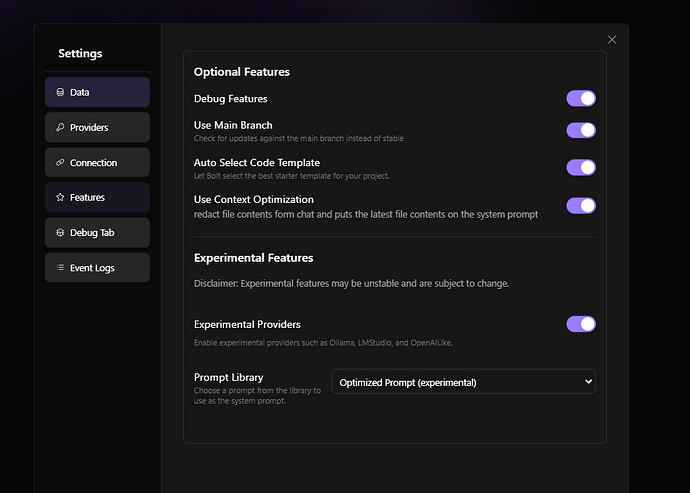

Firstly I would like to send a huge shoutout to @leex279 for the help in getting me off the ground. I managed to get bolt.diy up and running with gemini, however, when I want to use Ollama / Gwen it fails with the error API connection failed.

The logs are below:

[INFO]

2/2/2025, 5:07:00 PM

Application initialized

{

"environment": "development"

}

[DEBUG]

2/2/2025, 5:07:00 PM

System configuration loaded

{

"runtime": "Next.js",

"features": [

"AI Chat",

"Event Logging"

]

}

[WARNING]

2/2/2025, 5:07:00 PM

Resource usage threshold approaching

{

"memoryUsage": "75%",

"cpuLoad": "60%"

}

[ERROR]

2/2/2025, 5:07:00 PM

API connection failed

{

"endpoint": "/api/chat",

"retryCount": 3,

"lastAttempt": "2025-02-02T15:07:00.486Z",

"error": {

"message": "Connection timeout",

"stack": "Error: Connection timeout\n at http://localhost:5173/app/components/settings/event-logs/EventLogsTab.tsx:63:48\n at commitHookEffectListMount (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=21b5683c:23793:34)\n at commitPassiveMountOnFiber (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=21b5683c:25034:19)\n at commitPassiveMountEffects_complete (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=21b5683c:25007:17)\n at commitPassiveMountEffects_begin (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=21b5683c:24997:15)\n at commitPassiveMountEffects (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=21b5683c:24987:11)\n at flushPassiveEffectsImpl (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=21b5683c:26368:11)\n at flushPassiveEffects (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=21b5683c:26325:22)\n at commitRootImpl (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=21b5683c:26294:13)\n at commitRoot (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=21b5683c:26155:13)"

}

}

Ollama is running locally and I have confirmed that it is running. I have set the base URL in the GUI as well as in the .env.local file.

If anyone can help me with this integration I would be appreciative.