Hi everyone.

I use my old pc to homeserver (with 2 rtx gpu), and macbook. OS is ubuntu 24.04. I install ollama and can run locally and from network (in macbook i use cline inside vscode and it can use ollama on my ubuntu server.)

I install bolt.diy to my homeserver. Its work on 192.168.1.200, ports are reachable (11434, 5173 or other pinokio ports).

I wrote my other api keys and local ollama adress, then change filename to ‘.env’ . I tried ollama adress: 127.0.0.1:11434 and 192.168.1.200:11434

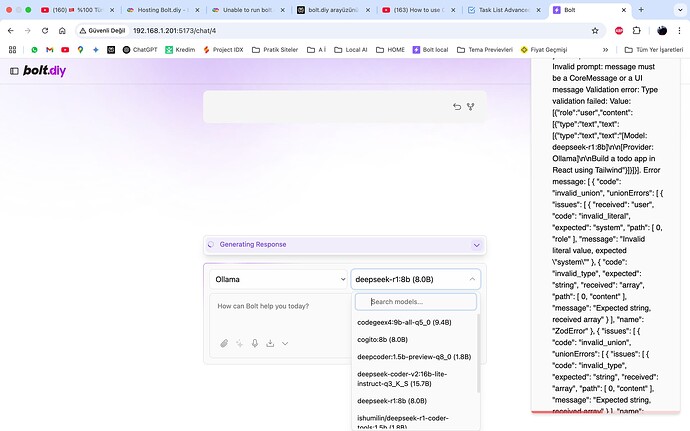

when i open 192.168.1.201:5137 on my macbook i can open bolt.diy main page, i can select Ollama, bolt.diy can get models list. When i send message to bolt, it cant answer.

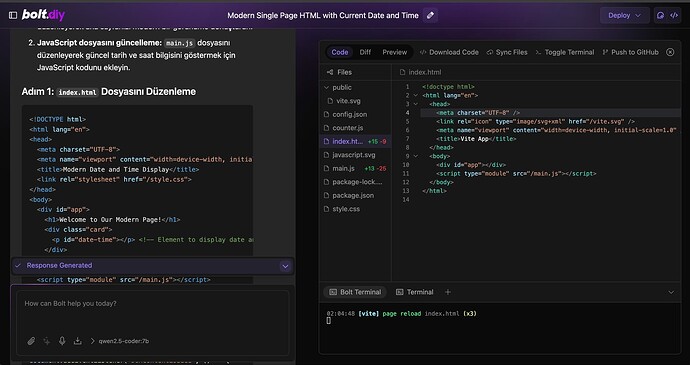

I add this scenes screenshot.

Can u give a advice to fix this please.

cumhur@UB-3060TI-AI:~/bolt.diy$ npm run dev – --host

bolt@0.0.7 dev

node pre-start.cjs && remix vite:dev --host

★═══════════════════════════════════════★

B O L T . D I Y

Welcome

Welcome

★═══════════════════════════════════════★

Current Version Tag: v"0.0.7"

Current Version Tag: v"0.0.7"

Current Commit Version: “332edd3”

Current Commit Version: “332edd3”

Please wait until the URL appears here

★═══════════════════════════════════════★

warn Data fetching is changing to a single fetch in React Router v7

┃ You can use the v3_singleFetch future flag to opt-in early.

┃ →

┗

➜ Local: localhost:5173/

➜ Network: 192.168.1.201:5173/

➜ Network: 192.168.1.200:5173/

➜ Network: 172.17.0.1:5173/

➜ press h + enter to show help

[unocss] failed to load icon “lucide:clock”

[unocss] failed to load icon “lucide:message-square”

[unocss] failed to load icon “lucide:search”

[unocss] failed to load icon “ph:git-repository”

INFO LLMManager Registering Provider: Anthropic

INFO LLMManager Registering Provider: Cohere

INFO LLMManager Registering Provider: Deepseek

INFO LLMManager Registering Provider: Google

INFO LLMManager Registering Provider: Groq

INFO LLMManager Registering Provider: HuggingFace

INFO LLMManager Registering Provider: Hyperbolic

INFO LLMManager Registering Provider: Mistral

INFO LLMManager Registering Provider: Ollama

INFO LLMManager Registering Provider: OpenAI

INFO LLMManager Registering Provider: OpenRouter

INFO LLMManager Registering Provider: OpenAILike

INFO LLMManager Registering Provider: Perplexity

INFO LLMManager Registering Provider: xAI

INFO LLMManager Registering Provider: Together

INFO LLMManager Registering Provider: LMStudio

INFO LLMManager Registering Provider: AmazonBedrock

INFO LLMManager Registering Provider: Github

indexedDB is not available in this environment.

[unocss] The labeled variant is experimental and may not follow semver.

[unocss] failed to load icon “ph:file-json”

[unocss] failed to load icon “ph:microchip”

[unocss] failed to load icon “ph:node”

INFO LLMManager Caching 0 dynamic models for Together

ERROR LLMManager Error getting dynamic models Anthropic : Missing Api Key configuration for Anthropic provider

ERROR LLMManager Error getting dynamic models Groq : Missing Api Key configuration for Groq provider

ERROR LLMManager Error getting dynamic models Hyperbolic : Missing Api Key configuration for Hyperbolic provider

ERROR LLMManager Error getting dynamic models OpenAI : Missing Api Key configuration for OpenAI provider

INFO LLMManager Caching 8 dynamic models for Ollama

INFO LLMManager Caching 40 dynamic models for Google

INFO LLMManager Caching 322 dynamic models for OpenRouter

ERROR LLMManager Error getting dynamic models Anthropic : Missing Api Key configuration for Anthropic provider

ERROR LLMManager Error getting dynamic models Groq : Missing Api Key configuration for Groq provider

ERROR LLMManager Error getting dynamic models Hyperbolic : Missing Api Key configuration for Hyperbolic provider

ERROR LLMManager Error getting dynamic models OpenAI : Missing Api Key configuration for OpenAI provider

i have to delete http:// tags before some ip adresses, because im new user and to send that message i cant add links more 2.

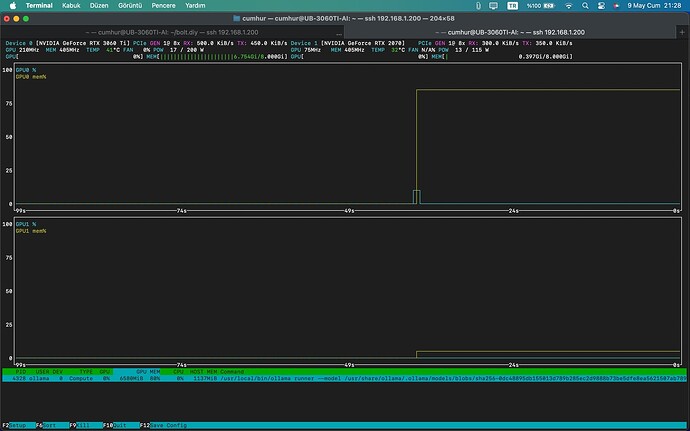

and this is nvtop screenshot, gpu works only one time at start loading model, then no process anythisg, also bold diy cant answer me.

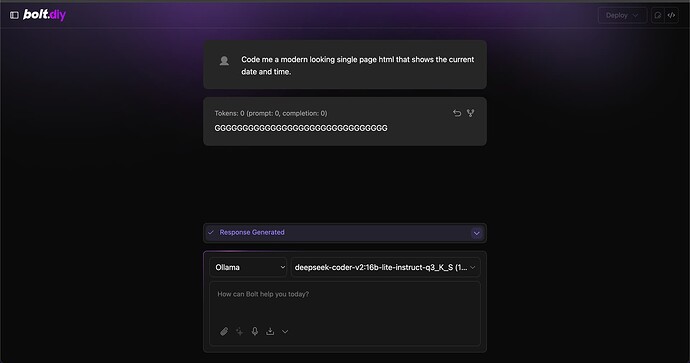

Now, i can run but also have an big error

my mistake is use npm to install and running. now i use pnpm to install and run. But now i cant get correct answers. I install bolt.diy on my macbook now. but ollama in ubuntu (192.168.1.200:11434)

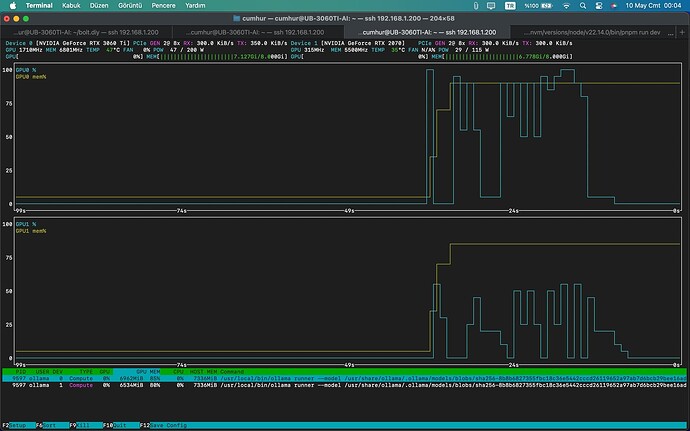

you can see bolt answer, gpu performance and bolt.diy logs.

and also i need help to use bolt.new correctly

Bolt.diy Screen:

My GPUs:

And bolt.diy logs:

cumhur@unknown3e6bcde37ec0 bolt.diy % pnpm run dev

bolt@0.0.7 dev /Users/cumhur/Projeler/bolt.diy

node pre-start.cjs && remix vite:dev

★═══════════════════════════════════════★

B O L T . D I Y

Welcome

Welcome

★═══════════════════════════════════════★

Current Version Tag: v"0.0.7"

Current Version Tag: v"0.0.7"

Current Commit Version: “332edd3”

Current Commit Version: “332edd3”

Please wait until the URL appears here

★═══════════════════════════════════════★

warn Data fetching is changing to a single fetch in React Router v7

┃ You can use the v3_singleFetch future flag to opt-in early.

┃ → Future Flags (v2.13.1) | Remix

┗

➜ Local: http://localhost:5173/

➜ Network: use --host to expose

➜ press h + enter to show help

[unocss] failed to load icon “ph:git-repository”

[unocss] failed to load icon “lucide:clock”

[unocss] failed to load icon “lucide:message-square”

[unocss] failed to load icon “lucide:search”

INFO LLMManager Registering Provider: Anthropic

INFO LLMManager Registering Provider: Cohere

INFO LLMManager Registering Provider: Deepseek

INFO LLMManager Registering Provider: Google

INFO LLMManager Registering Provider: Groq

INFO LLMManager Registering Provider: HuggingFace

INFO LLMManager Registering Provider: Hyperbolic

INFO LLMManager Registering Provider: Mistral

INFO LLMManager Registering Provider: Ollama

INFO LLMManager Registering Provider: OpenAI

INFO LLMManager Registering Provider: OpenRouter

INFO LLMManager Registering Provider: OpenAILike

INFO LLMManager Registering Provider: Perplexity

INFO LLMManager Registering Provider: xAI

INFO LLMManager Registering Provider: Together

INFO LLMManager Registering Provider: LMStudio

INFO LLMManager Registering Provider: AmazonBedrock

INFO LLMManager Registering Provider: Github

indexedDB is not available in this environment.

[unocss] The labeled variant is experimental and may not follow semver.

[unocss] failed to load icon “ph:file-json”

[unocss] failed to load icon “ph:microchip”

[unocss] failed to load icon “ph:node”

INFO LLMManager Caching 0 dynamic models for Together

ERROR LLMManager Error getting dynamic models Anthropic : Missing Api Key configuration for Anthropic provider

ERROR LLMManager Error getting dynamic models Groq : Missing Api Key configuration for Groq provider

ERROR LLMManager Error getting dynamic models Hyperbolic : Missing Api Key configuration for Hyperbolic provider

ERROR LLMManager Error getting dynamic models OpenAI : Missing Api Key configuration for OpenAI provider

INFO LLMManager Caching 8 dynamic models for Ollama

INFO LLMManager Caching 40 dynamic models for Google

INFO LLMManager Caching 322 dynamic models for OpenRouter

ERROR LLMManager Error getting dynamic models Anthropic : Missing Api Key configuration for Anthropic provider

ERROR LLMManager Error getting dynamic models Groq : Missing Api Key configuration for Groq provider

ERROR LLMManager Error getting dynamic models Hyperbolic : Missing Api Key configuration for Hyperbolic provider

ERROR LLMManager Error getting dynamic models OpenAI : Missing Api Key configuration for OpenAI provider

ERROR LLMManager Error getting dynamic models Anthropic : Missing Api Key configuration for Anthropic provider

ERROR LLMManager Error getting dynamic models Groq : Missing Api Key configuration for Groq provider

ERROR LLMManager Error getting dynamic models Hyperbolic : Missing Api Key configuration for Hyperbolic provider

ERROR LLMManager Error getting dynamic models OpenAI : Missing Api Key configuration for OpenAI provider

ERROR LLMManager Error getting dynamic models Anthropic : Missing Api Key configuration for Anthropic provider

ERROR LLMManager Error getting dynamic models Groq : Missing Api Key configuration for Groq provider

ERROR LLMManager Error getting dynamic models Hyperbolic : Missing Api Key configuration for Hyperbolic provider

ERROR LLMManager Error getting dynamic models OpenAI : Missing Api Key configuration for OpenAI provider

ERROR LLMManager Error getting dynamic models Anthropic : Missing Api Key configuration for Anthropic provider

ERROR LLMManager Error getting dynamic models Groq : Missing Api Key configuration for Groq provider

ERROR LLMManager Error getting dynamic models Hyperbolic : Missing Api Key configuration for Hyperbolic provider

ERROR LLMManager Error getting dynamic models OpenAI : Missing Api Key configuration for OpenAI provider

INFO api.llmcall Generating response Provider: Ollama, Model: deepseek-coder-v2:16b-lite-instruct-q3_K_S

DEBUG Ollama Base Url used: http://192.168.1.200:11434

INFO api.llmcall Generated response

DEBUG api.chat Total message length: 2, words

INFO LLMManager Found 8 cached models for Ollama

INFO stream-text Sending llm call to Ollama with model deepseek-coder-v2:16b-lite-instruct-q3_K_S

DEBUG Ollama Base Url used: http://192.168.1.200:11434

DEBUG api.chat usage {“promptTokens”:null,“completionTokens”:null,“totalTokens”:null}

and now i cnage my llm to qwen2.5-coder:7b and its work. now bolt.diy can write codes

but

for only to first promt. It can write codes on code page normally for first promt then it answer another promts only chat window.  Also i cant edit codes manually, SAVE button wont work.

Also i cant edit codes manually, SAVE button wont work.

you can see it write second answer only chat page.

![]() Welcome

Welcome ![]()

![]() Current Version Tag: v"0.0.7"

Current Version Tag: v"0.0.7"![]() Current Commit Version: “332edd3”

Current Commit Version: “332edd3”