I’m trying Archon for the first time and running into issues getting a result. I first tried all local ollama models but it kept getting openAi-related errors despite not setting any openAi env vars. I then switched over to trying OpenAi, but wasn’t able to get it working with o1-mini for the resonner. I don’t have access to o3-mini currently, but it appears this error may be related to a difference between o1-mini and o3-mini? Ref

I’m new to all of this, so let me know if you need anything else. Would also love to get more info on running everything locally with ollama.

openai.BadRequestError: Error code: 400 - {'error': {'message': "Unsupported value: 'messages[0].role' does not support 'system' with this model.", 'type': 'invalid_request_error', 'param': 'messages[0].role', 'code': 'unsupported_value'}} During task with name 'define_scope_with_reasoner'

Hello there,

I encountered the same error when I couldn’t use o3-mini and switched to o1-mini. The issue is that o1-mini doesn’t support the “system” role in messages.

Looking at the code, I noticed that when setting a “system_prompt” for the Pydantic AI Agent, it sets the role to “system” in the API request. This is in archon/archon_graph.py lines 47-50.

Since I just wanted to get Archon working, I directly modified the code:

# comment out system_prompt

reasoner = Agent(

reasoner_llm_model,

# system_prompt='You are an expert at coding AI agents with Pydantic AI and defining the scope for doing so.',

)

Then I slightly adjusted the prompt in the define_scope_with_reasoner function defined in the same file:

# Scope Definition Node with Reasoner LLM

async def define_scope_with_reasoner(state: AgentState):

# First, get the documentation pages so the reasoner can decide which ones are necessary

documentation_pages = await list_documentation_pages_tool(supabase)

documentation_pages_str = "\n".join(documentation_pages)

# Then, use the reasoner to define the scope

prompt = f"""

System Prompt: You are an expert at coding AI agents with Pydantic AI and defining the scope for doing so.

User AI Agent Request: {state['latest_user_message']}

Create detailed scope document for the AI agent including:

...

I added the System Prompt: .... line to include the system instructions directly in the user message.

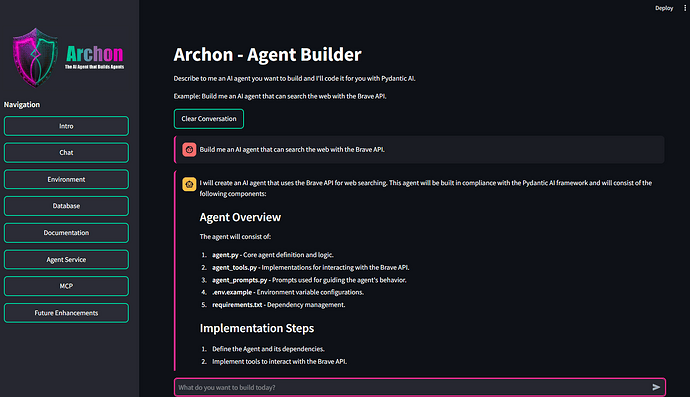

After making these code modifications, I restarted the container, launched the Agent, and confirmed it works by sending “Build me an AI Agent that can search the web the Brave API.” from the Chat interface.

I hope this information helps!

Thank you.

Thank you, that did appear to help, although I keep getting a response stating that it couldn’t find any documentation. Any ideas? I confirmed the embeddings were processed and are stored in Supabase

Hmm, I’m not sure what the issue might be.

It’s working fine in my environment.

Just to clarify, is Archon displaying that message and then ending without showing any code?

In other words, does the answer end with just that message?

If there’s some code displayed after the message, then it seems like there shouldn’t be a problem.

I’m curious whether you get the same “couldn’t find documentation” response if you try the same prompt multiple times, or if you get the same response when you provide a prompt to create a different AI agent.

AI generates text non-deterministically, so it’s possible it just happened to respond that way.

For reference, I’ve attached a screenshot of what it looks like in my environment.

1 Like

It does create the code but I do get the same or similar missing documentation message if I run multiple times. Is there a way to confirm it’s actually using the docs?

1 Like

Yes! In the logs for the Agent Service, you’ll see tool calls that say “retrieve_relevant_documentation” or “get_page_contents” when it’s using the docs to aid in its code generation.

Thanks! I confirmed it is now being called

1 Like