Thanks for the reply! I appreciate the clarification and the guidance. Here’s my debug information and some observations:

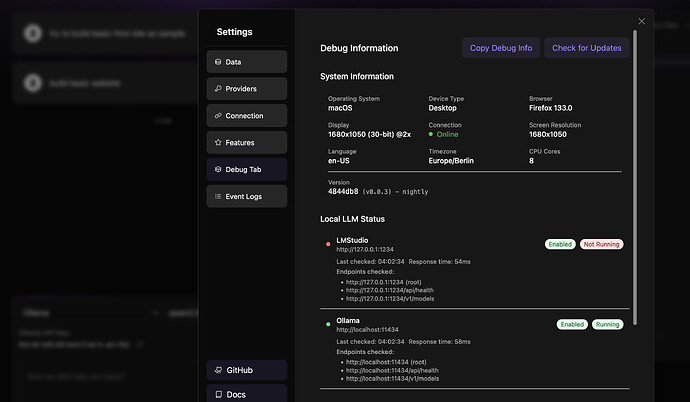

Debug Information: {

“System”: {

“os”: “macOS”,

“browser”: “Firefox 133.0”,

“screen”: “1680x1050”,

“language”: “en-US”,

“timezone”: “Europe/Berlin”,

“memory”: “Not available”,

“cores”: 8,

“deviceType”: “Desktop”,

“colorDepth”: “30-bit”,

“pixelRatio”: 2,

“online”: true,

“cookiesEnabled”: true,

“doNotTrack”: true

},

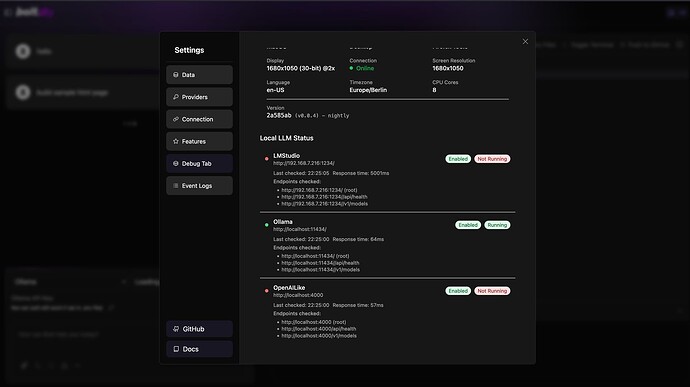

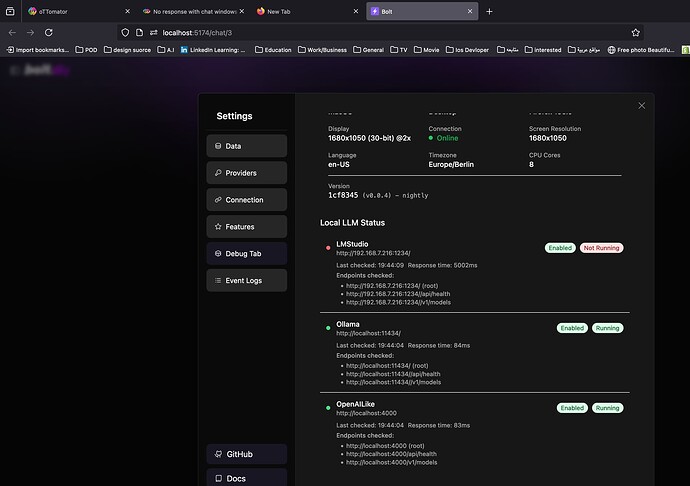

“Providers”: [

{

“name”: “LMStudio”,

“enabled”: true,

“isLocal”: true,

“running”: false,

“lastChecked”: “2024-12-31T03:01:34.149Z”,

“responseTime”: 73.69999999999709,

“url”: “http://127.0.0.1:1234”

},

{

“name”: “Ollama”,

“enabled”: true,

“isLocal”: true,

“running”: true,

“lastChecked”: “2024-12-31T03:01:34.153Z”,

“responseTime”: 76.34000000001106,

“url”: “http://localhost:11434”

},

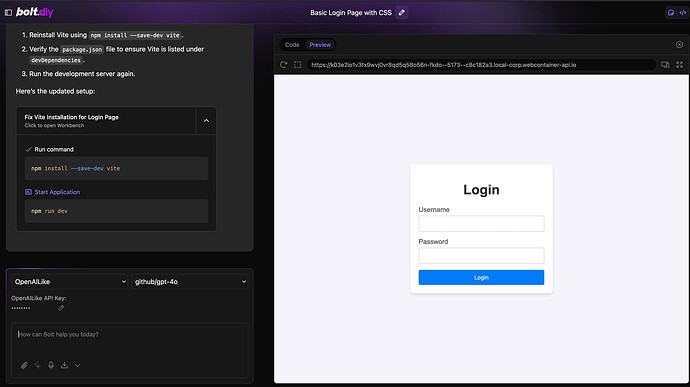

{

“name”: “OpenAILike”,

“enabled”: true,

“isLocal”: true,

“running”: false,

“lastChecked”: “2024-12-31T03:01:34.149Z”,

“responseTime”: 72.18000000000757,

“url”: " http://localhost:4000"

}

],

“Version”: {

“hash”: “4844db8”,

“branch”: “main”

},

“Timestamp”: “2024-12-31T03:01:46.604Z”

}… Observations:

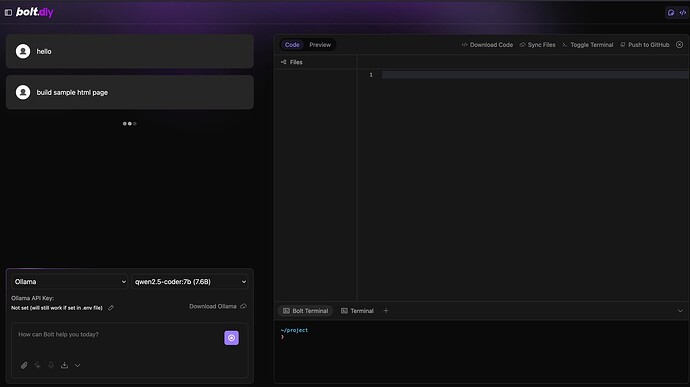

- Ollama Provider:

• It’s running and responding on http://localhost:11434 with a response time of ~76 ms.

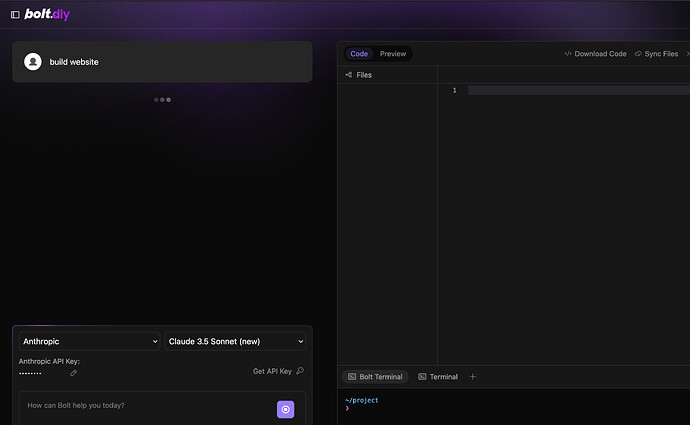

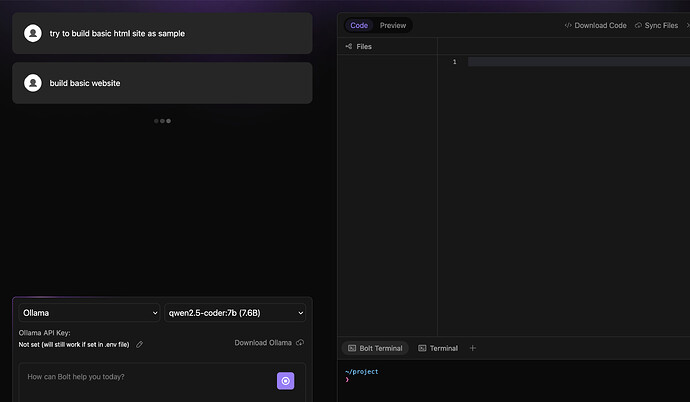

• I have selected Ollama in the dropdown as you mentioned.

- LMStudio and OpenAILike Providers:

• Both are not running, as indicated by running: false.

• Their response times and status indicate they are not actively serving requests.

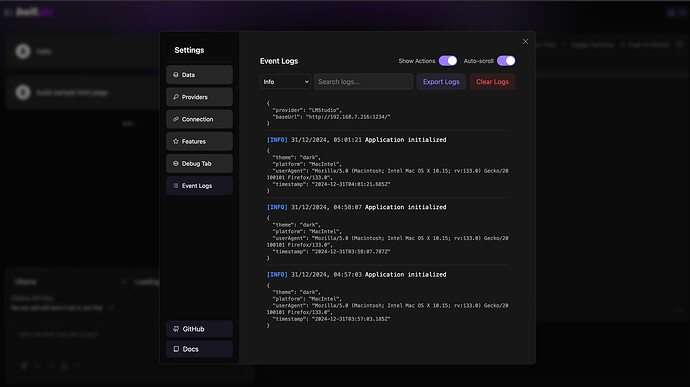

- Connection Issue:

• It seems like the ECONNREFUSED error might not be related to Bolt.diy but instead an issue with network connectivity to one of the services or an improper fallback when LMStudio or OpenAILike is enabled but not running.