Hi @ColeMedin so, used your code for crawl_for_ai so, used local llama3.2 and all-minilm for embedding which work without issue and stored the data in supabase pg vector . But it comes to using the rag though the streamlit ui then it just get stuck forever and nothing is returned also no error is shown

That’s super cool you adapted my solution to use a local LLM and embedding model!

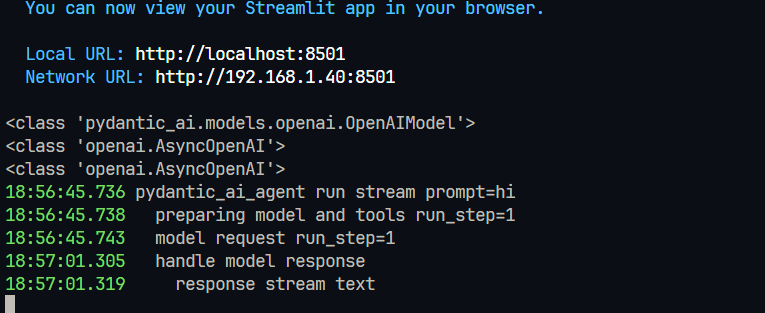

Sorry you are running into this issue though… I would start by adding a print statement right after the LLM call to see if that is ever returning. Either it’s stuck in the LLM call or maybe something isn’t correct in the code so you’re getting a response but just not displaying it (so it appears stuck).

Also, I would try with a different LLM, it could be the LLM getting stuck with all the extra context, especially if it is a smaller one.

Well as of now I used llama3.2 model and all-minilmn while I ran crawler so, using both of above model i stored the embedding in suppabase .

Then I tried using it in streamlist so, when I used the openai I was getting response but as it has limited quata I gave quata exasted .

So, I used llama3.2 again and you asked for adding print statement so, I did but getting no response for some reason just got input+ system_prompt

I am trying things out also just for info I had to change the embedding from 1536 to 384 according to the all-minilm embedding model .

Also if you can test it yourself that would be very helpful as you certainly have a lot of experience in this Thank you

Gotcha, it really does seem like an issue with the LLM then. Have you tried with a different local LLM? Especially if you could try a larger one that is at least 14b parameters.

Tried the smaller once gonna try bigger now I gues it be me due to parameter limitations maybe