TL;DR;

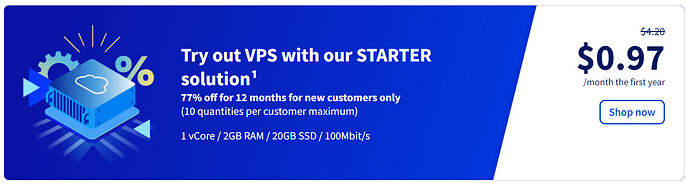

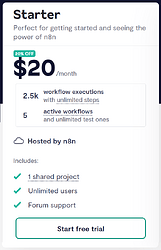

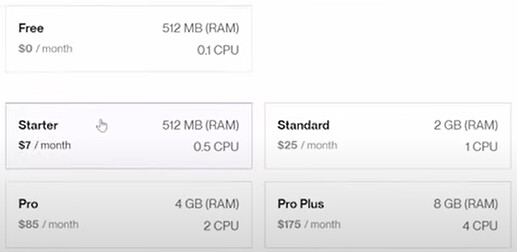

I wanted to host n8n on the cloud so I didn’t have to spin it up locally, could manage my workflows on multiple devices with ease, and so that I could execute my workflows through their API on a schedule. But the free tier was a joke and I didn’t want to spend $20/mo for what OVH offers for under $1 per month.

n8n Charges $20/mo (left) for their Starter plan, while Render offers a comparable plan for $25/mo and up (right):

| Component | Sizing | Supported |

|---|---|---|

| CPU/vCPU | Minimum 10 CPU cycles, scaling as needed | Any public or private cloud |

| Database | 512 MB - 4 GB SSD | SQLite or PostgreSQL |

| Memory | 320 MB - 2 GB |

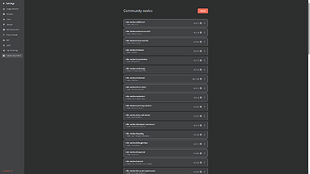

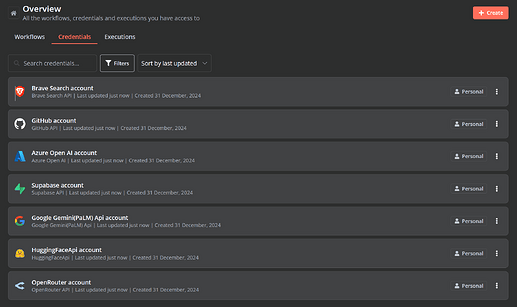

I got n8n running pretty easily on OVH, through their KVM (I used Putty to connect over SSH). I but did have to figure out things by myself as all the documentation I found recommends using Docker, which I did not use. Then I got busy installing all of the useful Community Nodes and various Integrations:

Then I set up my Credentials and started finalizing setting everything the way I want it.

Now that I have n8n setup, I’m going to be experimenting with making a “Reasoning” workflow and getting Bolt.diy to use it like any other Provider API endpoint. I have a really cool plan that I’ve been working on, and I look forward to seeing how it works out. But that’s a story for another time.

Now, after getting n8n, Postgres, nginx up and running, this is what I got:

Hardware Specs

- Operating System: Debian 12

- CPU: 1vCore @ 2.0GHz (Intel pc-i440fx-8.2)

- RAM: 2GB

- Storage: 20GB

- Read: 376 MB/s

- Write: 292 MB/s

- Bandwidth:

- Download: 97.49 Mbit/s

- Upload: 98.46 Mbit/s

- Latency: 1.5 ms

Under normal load conditions, this is what I was seeing:

- CPU Usage shows < 1%

- RAM Usage shows < 35%

- Base n8n uses ~6GB of storage

- n8n with 100+ Community Nodes installed ~11GB.

Pretty good for on $11.64 a year.

And I’m pretty happy with the setup, just a little disappointed that some of the n8n features are locked behind an Enterprise paywall. Not a deal breaker though. I might check out Flowise and Langflow as well but I know as they use Python under the hood, they are more resource intensive. However, LangFlow allows you to export a flow into Python code, which could be quite useful. I would prefer something that could work with Cloudflare Pages and Workers though, so I would probably be looking into compiling the workflow into NodeJS. One thing at a time though.

And while this is really only for my own personal development purposes, if anyone would like a setup tutorial, please let me know. Also, if anyone knows some other alternatives or “a better way”, please let me know. I’m always looking to learn.

PS. Please scroll down for technical details if you’re interested.

Thanks!

> lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Address sizes: 40 bits physical, 48 bits virtual

Byte Order: Little Endian

CPU(s): 1

NUMA:

NUMA node(s): 1

Vendor ID: GenuineIntel

BIOS Vendor ID: QEMU

Model name: Intel Core Processor (Haswell, no TSX)

BIOS Model name: pc-i440fx-8.2 CPU @ 2.0GHz

Virtualization features:

Virtualization: VT-x

Hypervisor vendor: KVM

Virtualization type: full

> du -h --max-depth=0

11G

> lspci | grep -i vga

00:02.0 VGA compatible controller: Cirrus Logic GD 5446

> speedtest-cli

Retrieving speedtest.net configuration...

Download: 96.05 Mbit/s

Upload: 98.64 Mbit/s

> free -h

total used free shared buff/cache available

Mem: 1.9Gi 652Mi 161Mi 26Mi 1.3Gi 1.2Gi

Swap: 0B 0B 0B