Has anyone else used this with a local setup. For my pgAdmin container I use supabase-db:5432 but it strangled Archon.

I sorted it out and another great reason to have Portainer in your docker stack for local-ai. Since the script starts the whole Archon container I added a default network to the cmd to start the container so it’s on the same network. Then in Portainer take the ip address for supabase-db and it connects fine with your SERVICE_ROLE_KEY from the supabase .env.

Should be something like

which is the internal docker address which again is super easy to sort out from Portainer

print("\n=== Starting Archon container ===")

cmd = [

"docker", "run", "-d",

"--name", "archon-container",

"--network", "localai_default", # force Archon to the localai_default network

"--gpus", "all", # Pass GPU access

"-e NVIDIA_VISIBLE_DEVICES=all",

"-e NVIDIA_DRIVER_CAPABILITIES=compute,utility",

"-e NVIDIA_REQUIRE_CUDA=\"cuda>=12.8\"", # Force CUDA 12.8

"-p", "8501:8501",

"-p", "8100:8100",

"--add-host", "host.docker.internal:host-gateway"

]

Can we add a DEFAULT_DB=supabase

POSTGRES_DB=supbase

I’m actually new to Portainer! Could you help me understand how it helps here?

OK…you have all the stacks, containers, images, networks, volumes and logs. You can super easily start, pause(look in another stack for continuing errors…f$%&king supabase), resume, restart and remove containers and it highlights orphans and unused volumes. All the local container IP’s are right there as well as the network they are connected which helped me when starting Archon from a script which causes it to be on a bridge network rather than the localai_default I setup for your initial local-ai-packaged stack. I did that to make sure the loca-ai stack and your supabase script all land together for no conflicts but anything that might land on another network can be re-assigned and then pointed to the one you want. I have 22 containers in total and while desktop has similar features they are not quite as accessible and the network re-assignment and ip addressing resolution is awesome.

portainer:

image: portainer/portainer-ce

container_name: portainer

restart: unless-stopped

networks:

- localai_default # Ensure it is in the correct network

ports:

- “9443:9443” # Web UI (HTTPS)

- “8000:8000” # Edge agent

volumes:

- /var/run/docker.sock:/var/run/docker.sock # Allows Portainer to manage Docker

- portainer_data:/data # Persistent storage

![]()

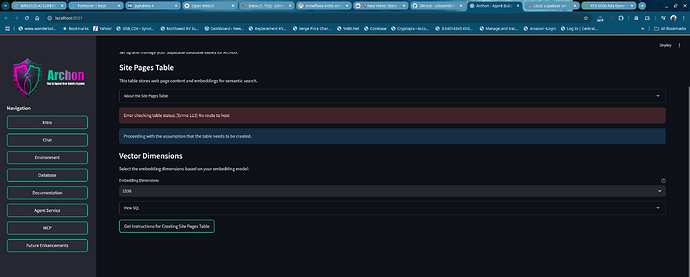

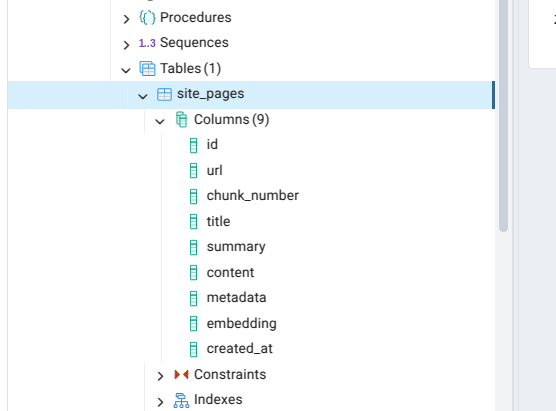

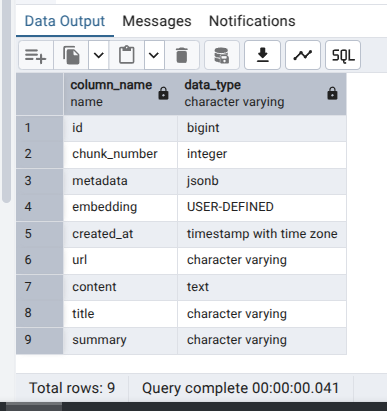

Here’s where Portainer is very handy…been debugging this connection to supabase and of course I had the wrong host…this needs too connect to supabase-kong:8001 and then I start getting activity and I can just look at the container list and see that .08 is my Archon and .03 is my supabase-vector. problem of course 200 to vector and 401 to Archon…progress anyway,

Glad you’re making progress! Sure seems like I need to look into using Portainer.

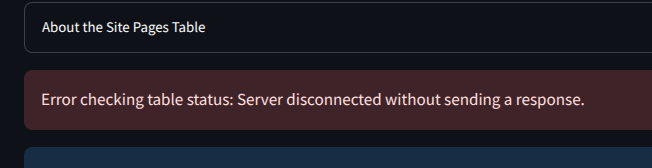

Authentication is absolutely killing me right now… ![]()

I have it connecting but cannot for the life of me figure out what the heck this thing is looking for I have worn out the supabase key generator and it just keeps telling me “Error checking table status: {‘message’: ‘Invalid authentication credentials’}” The table and schema and all the columns are created and I even disable rls and I still can’t link up. any thoughts would be greatly appreciated.

172.18.0.24 is my Archon container so it's tickling it...

{

"appname": "supabase-kong",

"event_message": "172.18.0.24 - - [10/Mar/2025:23:25:20 +0000] \"GET /rest/v1/site_pages?select=id&limit=1 HTTP/1.1\" 401 52 \"-\" \"python-httpx/0.27.2\"",

"id": "c08de93b-f946-4d7b-8b4e-36088d9777fc",

"metadata": {

"request": {

"headers": {

"cf_connecting_ip": "172.18.0.24",

"user_agent": "python-httpx/0.27.2"

},

"method": "GET",

"path": "/rest/v1/site_pages?select=id&limit=1",

"protocol": "HTTP/1.1"

},

"response": {

"status_code": 401

}

},

"project": "default",

"timestamp": 1741649120000000

}

Hard to say without looking into your setup and config more… but what I will say is that I’m planning on hooking Archon into the local AI stack soon so I’ll let you know if I run into any issues myself and I’ll also be putting out a guide once I get it set up!

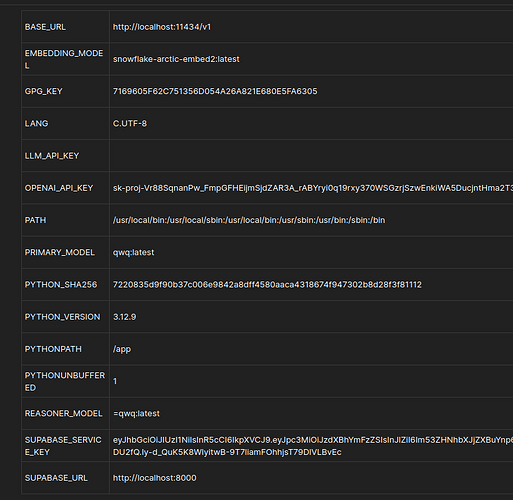

I found the issue but for whatever reason can’t flush this…Again in Portainer I can see the environment variables plain as day and what I have loaded in rest is:

“PGRST_DB_URI=postgres://authenticator:2020@supabase-db:5432/postgres”

Which originates in compose:PGRST_DB_URI: postgres://authenticator:${POSTGRES_PASSWORD}@${POSTGRES_HOST}:${POSTGRES_PORT}/${POSTGRES_DB}

and is fed by .env

POSTGRES_DB=_supabase

How does PGRST_DB_URI=xxxxx/postgres when it is defined as _supabase

Sorry could you clarify what exactly is wrong here? Are you saying you expect PGRST_DB_URI to be something different?

No matter what I have tried I cannot get connected to the database. It may have something to do with the envirnment variables not saving my Service Role Key. I paste and save then check Database page, it says credential errors and I go back to environment and key is no longer there even if I toggle it visible. Below is kong error for request.

INFO:httpx:HTTP Request: GET http://kong:8000/rest/v1/site_pages?select=id&limit=1 “HTTP/1.1 401 Unauthorized”

One other thing any reason I should also be getting javscript errors in searxng…worked for a brief time and I switched to dark mode in settings and hit save…it threw an https error even though the base url is not secure and now shows internal server error and limiter.toml errors even though the config is limiter: false…I even added a line to the compose file but still hunting for an imaginary file.

TypeError: schema of /etc/searxng/limiter.toml is invalid!

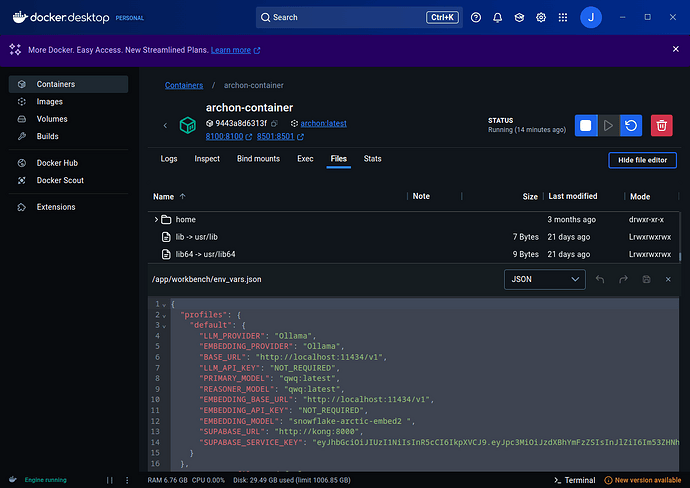

I did update Archon to the lastest as well as local-ai

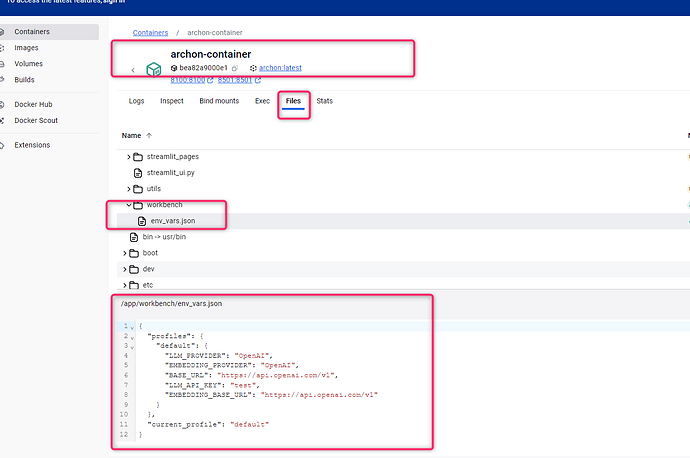

@johnmag2020 Can you please check the file where the env files are stored and see whats in there:

You can also manually try to edit it, put all in and reload your page, to see if it then works.

The thing is I can see the data being passed in is correct but it just won’t connect.

Correct me if I’m wrong but that is the profile loaded in the container and that service key works in other stacks…just perplexed.

I dont understand what that screenshot is. As far as I know the only thing relevant is, what in the workbench/env_vars.json is. So you should check this and try what I mentioned above.

I don’t know what else to say or do…that is information I have and use.

Btw that image is the same info from Portainer as I use Docker CLI…in Portainer you just go to advanced mode and it lists all the various information including all the env:

You just don’t have to fish through a file manager. Quite efficient I think…I always have some gripe with desktop in linux but use it regularly on windows. Try Portainer sometime…I think it’s worth it just for the one click recreate that stop, -rm and restart when debugging and housecleaning.