Ubuntu 20.x LTS, self-hosted, dedicated server, 8 core, 32 MB ram, script start_services.py (latest)

Hi!

I try to use Coles local-ai-packaged on a dedicated server.

Can someone help with this?

Are these kind of health-checks valid with a real domain name in the server?..

depends_on:

analytics:

condition: service_healthy

environment:

STUDIO_PG_META_URL: http://meta:8080

POSTGRES_PASSWORD: ${POSTGRES_PASSWORD}

DEFAULT_ORGANIZATION_NAME: ${STUDIO_DEFAULT_ORGANIZATION}

DEFAULT_PROJECT_NAME: ${STUDIO_DEFAULT_PROJECT}

OPENAI_API_KEY: ${OPENAI_API_KEY:-}

SUPABASE_URL: http://kong:8000

SUPABASE_PUBLIC_URL: ${SUPABASE_PUBLIC_URL}

SUPABASE_ANON_KEY: ${ANON_KEY}

SUPABASE_SERVICE_KEY: ${SERVICE_ROLE_KEY}

AUTH_JWT_SECRET: ${JWT_SECRET}

[...]

auth:

container_name: supabase-auth

image: supabase/gotrue:v2.170.0

restart: unless-stopped

healthcheck:

test:

[

"CMD",

"wget",

"--no-verbose",

"--tries=1",

"--spider",

"http://localhost:9999/health"

]

timeout: 5s

interval: 5s

retries: 3

[...]

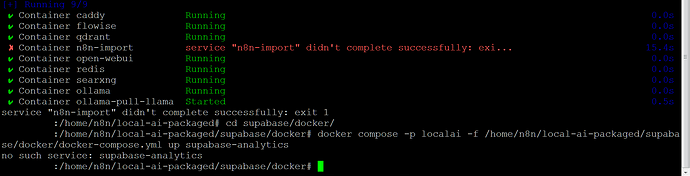

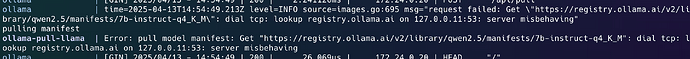

Steps to Reproduce

trigger: python3 start_services.py --profile cpu

Ouput

Starting Supabase services...

Running: docker compose -p localai -f supabase/docker/docker-compose.yml up -d

[+] Running 14/14

✔ Network localai_default Created 0.1s

✔ Container supabase-imgproxy Started 0.8s

✔ Container supabase-vector Healthy 6.3s

✔ Container supabase-db Healthy 13.1s

✘ Container supabase-analytics Error 125.3s

✔ Container supabase-rest Created 0.1s

✔ Container supabase-meta Created 0.1s

✔ Container supabase-kong Created 0.1s

✔ Container realtime-dev.supabase-realtime Created 0.1s

✔ Container supabase-edge-functions Created 0.1s

✔ Container supabase-studio Created 0.1s

✔ Container supabase-auth Created 0.1s

✔ Container supabase-pooler Created 0.1s

✔ Container supabase-storage Created 0.0s

dependency failed to start: container supabase-analytics is unhealthy

Traceback (most recent call last):

File "start_services.py", line 242, in <module>

main()

File "start_services.py", line 232, in main

start_supabase()

File "start_services.py", line 63, in start_supabase

run_command([

File "start_services.py", line 21, in run_command

subprocess.run(cmd, cwd=cwd, check=True)

File "/usr/lib/python3.8/subprocess.py", line 516, in run

raise CalledProcessError(retcode, process.args,

subprocess.CalledProcessError: Command '['docker', 'compose', '-p', 'localai', '-f', 'supabase/docker/docker-compose.yml', 'up', '-d']' returned non-zero exit status 1.