Hello, I plan on migrating from using Digital Ocean to self hosting on a local server. My intent is to use the Local AI package from Cole. In reading the installation guide, I feel confident with the steps. The only question I have relates to setting up the user account on n8n and creating credentials for service.

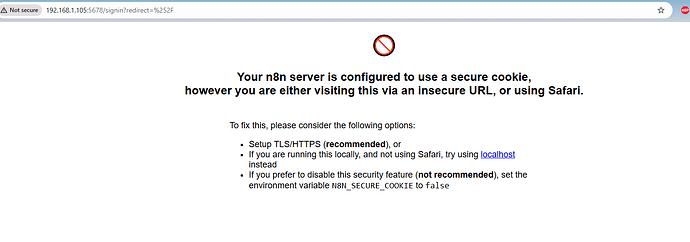

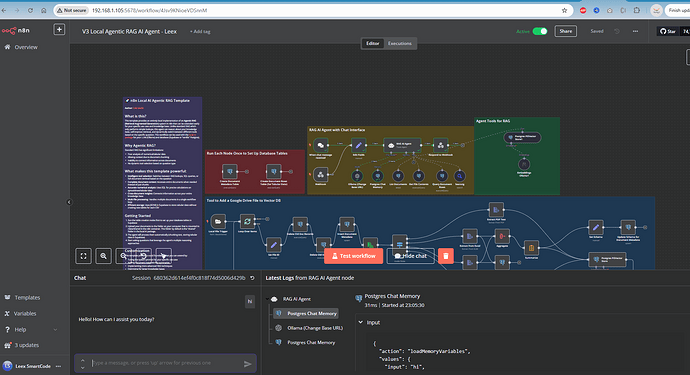

I assume rather than accessing via the local machine (e.g. http://localhost:5678/) I can instead connect from another computer on the network via browser (assuming ports are open). For example http://192.168.10.144:5678/. Does anyone anticipate any issues with this? Thanks in advance!

Hi,

seems to work, just tested it out quickly.

You just need HTTPS or disable secure cookies:

2 Likes

Excellent, thanks for testing and responding so quickly!

2 Likes

Hi. I also think about deploying an instance to digitalocean. Could you please share your specs?

Thank you

Wolf

Hi @nic0711, are you asking about the specs for my Digital Ocean droplet? If so, I’m using the smallest option— 1 GB Memory / 25 GB Disk / NYC3 - Docker on Ubuntu 22.04.

I’m migrating to a local machine that I’ll be setting up as a headless server. Let me know if you’re asking for details on this or something else.

Hi @dkderek!

Thanks for your reply.

Yes, that was my question.

I plan to install the local AI package from cole in the cloud and still have a vps with 4GB ram “lying around”. However, I’m not sure if that’s enough for the whole package including Ollama, OpenWebUI and Supabase etc.

1 Like

Hey Nico, I don’t think that will be sufficient for the full local AI stack. While I don’t know your actual use cases, at minimum you will likely need 4 CPU cores, 16GB RAM, and 100 GB storage (SSD if you can). More likely you’ll need more CPU cores, RAM, and storage. You can generally find good guidance on this online or by chatting with Claude or another LLM.

1 Like

Hi @dkderek

that’s what i thought. I’ll switch to 16 GB and 10cores.

Thank you

1 Like