at async /app/node_modules/.pnpm/@remix-run+dev@2.10.0_@remix-run+react@2.10.2_react-dom@18.3.1_react@18.3.1__react@18.3.1_typ_qwyxqdhnwp3srgtibfrlais3ge/node_modules/@remix-run/dev/dist/vite/cloudflare-proxy-plugin.js:70:25 {

2024-11-12 12:57:45 bolt-ai-dev-1 | cause: Error: connect ECONNREFUSED 127.0.0.1:1234

2024-11-12 12:57:45 bolt-ai-dev-1 | at TCPConnectWrap.afterConnect [as oncomplete] (node:net:1607:16)

2024-11-12 12:57:45 bolt-ai-dev-1 | at TCPConnectWrap.callbackTrampoline (node:internal/async_hooks:130:17) {

2024-11-12 12:57:45 bolt-ai-dev-1 | errno: -111,

2024-11-12 12:57:45 bolt-ai-dev-1 | code: ‘ECONNREFUSED’,

2024-11-12 12:57:45 bolt-ai-dev-1 | syscall: ‘connect’,

2024-11-12 12:57:45 bolt-ai-dev-1 | address: ‘127.0.0.1’,

2024-11-12 12:57:45 bolt-ai-dev-1 | port: 1234

2024-11-12 12:57:45 bolt-ai-dev-1 | },

2024-11-12 12:57:45 bolt-ai-dev-1 | url: ‘http://localhost:1234/v1/chat/completions’,

2024-11-12 12:57:45 bolt-ai-dev-1 | requestBodyValues: {

2024-11-12 12:57:45 bolt-ai-dev-1 | model: ‘claude-3-5-sonnet-latest’,

2024-11-12 12:57:45 bolt-ai-dev-1 | logit_bias: undefined,

2024-11-12 12:57:45 bolt-ai-dev-1 | logprobs: undefined,

2024-11-12 12:57:45 bolt-ai-dev-1 | top_logprobs: undefined,

2024-11-12 12:57:45 bolt-ai-dev-1 | user: undefined,

2024-11-12 12:57:45 bolt-ai-dev-1 | parallel_tool_calls: undefined,

2024-11-12 12:57:45 bolt-ai-dev-1 | max_tokens: 8000,

2024-11-12 12:57:45 bolt-ai-dev-1 | temperature: 0,

2024-11-12 12:57:45 bolt-ai-dev-1 | top_p: undefined,

2024-11-12 12:57:45 bolt-ai-dev-1 | frequency_penalty: undefined,

2024-11-12 12:57:45 bolt-ai-dev-1 | presence_penalty: undefined,

2024-11-12 12:57:45 bolt-ai-dev-1 | stop: undefined,

2024-11-12 12:57:45 bolt-ai-dev-1 | seed: undefined,

2024-11-12 12:57:45 bolt-ai-dev-1 | max_completion_tokens: undefined,

2024-11-12 12:57:45 bolt-ai-dev-1 | store: undefined,

2024-11-12 12:57:45 bolt-ai-dev-1 | metadata: undefined,

2024-11-12 12:57:45 bolt-ai-dev-1 | response_format: undefined,

2024-11-12 12:57:45 bolt-ai-dev-1 | messages: [Array],

2024-11-12 12:57:45 bolt-ai-dev-1 | tools: undefined,

2024-11-12 12:57:45 bolt-ai-dev-1 | tool_choice: undefined,

2024-11-12 12:57:45 bolt-ai-dev-1 | stream: true,

2024-11-12 12:57:45 bolt-ai-dev-1 | stream_options: undefined

2024-11-12 12:57:45 bolt-ai-dev-1 | },

2024-11-12 12:57:45 bolt-ai-dev-1 | statusCode: undefined,

2024-11-12 12:57:45 bolt-ai-dev-1 | responseHeaders: undefined,

2024-11-12 12:57:45 bolt-ai-dev-1 | responseBody: undefined,

2024-11-12 12:57:45 bolt-ai-dev-1 | isRetryable: true,

2024-11-12 12:57:45 bolt-ai-dev-1 | data: undefined,

2024-11-12 12:57:45 bolt-ai-dev-1 | [Symbol(vercel.ai.error)]: true,

2024-11-12 12:57:45 bolt-ai-dev-1 | [Symbol(vercel.ai.error.AI_APICallError)]: true

2024-11-12 12:57:45 bolt-ai-dev-1 | },

2024-11-12 12:57:45 bolt-ai-dev-1 | [Symbol(vercel.ai.error)]: true,

2024-11-12 12:57:45 bolt-ai-dev-1 | [Symbol(vercel.ai.error.AI_RetryError)]: true

2024-11-12 12:57:45 bolt-ai-dev-1 | }

You’re not able to connect to your LM Studio instance at 127.0.0.1:1234/v1 - If you’re using Docker for the oTToDev instance and LM Studio locally, you need to use host.docker.internal:1234/v1 instead.

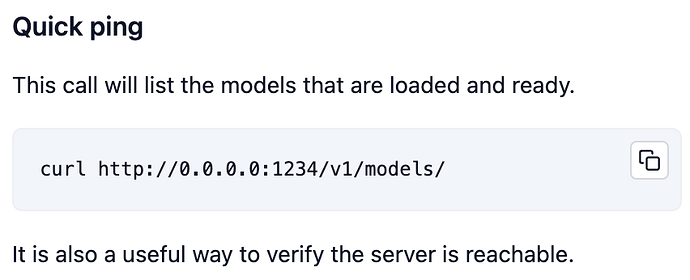

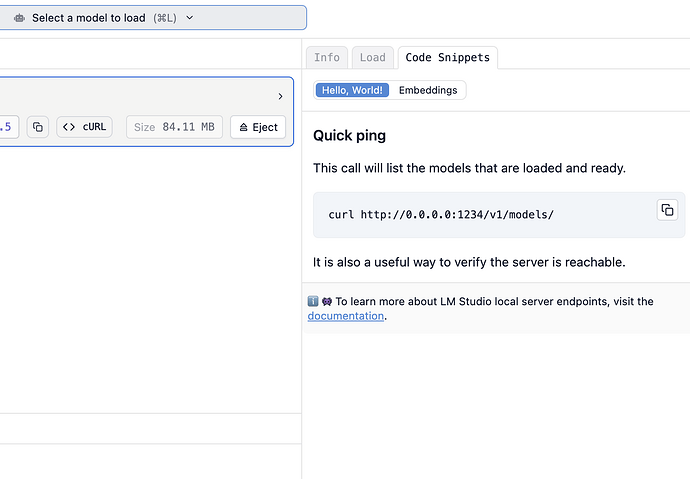

If that’s not the issue, please make sure you can run one of the test cURL calls from within LM Studio, i.e. retrieving the list of models.

I’m running both locally (LM Studio and oTToDev) because i’m not experienced with docker and have a lot of problems…

Curl work corectly but inside oTToDev i’m having the same error of this topic.

Also occurs when I use Ollama locally, Ollama accepts curl but oTToDev can’t communicate with the local server, both LM Studio and Ollama servers working well.

Work in progress PR should resolve some issues with provider & model selection that seem to affect OpenAILike and Ollama in a similar fashion. We’ll update here when the merge goes through, likely soon.

Thanks for replying, i’m just now trying to solve this, but its much more difficulty than python lol

Here in Brazil are 02:20 AM and I can’t sleep because I can’t solve this issue.

But thanks to your reply, I think I can sleep now.

I don’t know if it can help, but i’m able to use LM Studio when I selected the option to “Serve on local network” so now oTToDev can make requests to LM Studio.

I see the network table in oTToDev and the requests are made for this endpoint: http://localhost:5173/api/chat

Because I didn’t find how the requests are made I can’t confirm why oTToDev don’t make requests to http://localhost:1234 directly and only work if the local server are running in the local network and not only in local host.

I’m very happy because I found a solution to make it work for now, very excited and awaiting the next PR to correct this, hope my reply can help the team to solve this issue. Thanks for all, I think I’ll stop paying for ChatGPT Plus (Is very expensive converting to BRL) maybe I’ll only use the API and Pay as I use, this preview feature is the best thing I ever see, and I’m not limited to claude platform for this feature, I’m very happy, really thanks for the work!!!

can you send the link of the changes made @Just4D3v

FYI if you are using LM Studio as an OpenAILike provider, the full URL is http://[hostname]:1234/v1 - That allows access to the chat completion and model APIs while using LM Studio as the LLM server. See the below section in the ‘Developer’ area where the HTTP server is started; you should be able to run that curl command successfully from wherever you are attempting to access it.

Hope that helps

My response (I only have one embedding model loaded in LM Studio right now):

$ curl http://0.0.0.0:1234/v1/models/

{

"data": [

{

"id": "text-embedding-nomic-embed-text-v1.5",

"object": "model",

"owned_by": "organization_owner"

}

],

"object": "list"

}

Can’t get it to work , when I start Bolt on http://localhost:5173/ I can see LM studio getting the request to /v1/models so it feed with all models I have downloaded to LM studio , but when I start a chat , it return ECONNREFUSED

lastError: APICallError [AI_APICallError]: Cannot connect to API: connect ECONNREFUSED ::1:1234

at postToApi (file:///C:/Bolt/bolt.diy/node_modules/.pnpm/@ai-sdk+provider-utils@1.0.20_zod@3.23.8/node_modules/@ai-sdk/provider-utils/src/post-to-api.ts:137:15)

at processTicksAndRejections (node:internal/process/task_queues:105:5)

at OpenAIChatLanguageModel.doStream (file:///C:/Bolt/bolt.diy/node_modules/.pnpm/@ai-sdk+openai@0.0.66_zod@3.23.8/node_modules/@ai-sdk/openai/src/openai-chat-language-model.ts:386:50)

at fn (file:///C:/Bolt/bolt.diy/node_modules/.pnpm/ai@3.4.33_react@18.3.1_sswr@2.1.0_svelte@5.4.0__svelte@5.4.0_vue@3.5.13_typescript@5.7.2__zod@3.23.8/node_modules/ai/core/generate-text/stream-text.ts:378:23)

at file:///C:/Bolt/bolt.diy/node_modules/.pnpm/ai@3.4.33_react@18.3.1_sswr@2.1.0_svelte@5.4.0__svelte@5.4.0_vue@3.5.13_typescript@5.7.2__zod@3.23.8/node_modules/ai/core/telemetry/record-span.ts:18:22

at _retryWithExponentialBackoff (file:///C:/Bolt/bolt.diy/node_modules/.pnpm/ai@3.4.33_react@18.3.1_sswr@2.1.0_svelte@5.4.0__svelte@5.4.0_vue@3.5.13_typescript@5.7.2__zod@3.23.8/node_modules/ai/util/retry-with-exponential-backoff.ts:37:12)

at startStep (file:///C:/Bolt/bolt.diy/node_modules/.pnpm/ai@3.4.33_react@18.3.1_sswr@2.1.0_svelte@5.4.0__svelte@5.4.0_vue@3.5.13_typescript@5.7.2__zod@3.23.8/node_modules/ai/core/generate-text/stream-text.ts:333:13)

at fn (file:///C:/Bolt/bolt.diy/node_modules/.pnpm/ai@3.4.33_react@18.3.1_sswr@2.1.0_svelte@5.4.0__svelte@5.4.0_vue@3.5.13_typescript@5.7.2__zod@3.23.8/node_modules/ai/core/generate-text/stream-text.ts:414:11)

at file:///C:/Bolt/bolt.diy/node_modules/.pnpm/ai@3.4.33_react@18.3.1_sswr@2.1.0_svelte@5.4.0__svelte@5.4.0_vue@3.5.13_typescript@5.7.2__zod@3.23.8/node_modules/ai/core/telemetry/record-span.ts:18:22

at chatAction (C:/Bolt/bolt.diy/app/routes/api.chat.ts:66:20)

at Object.callRouteAction (C:\Bolt\bolt.diy\node_modules\.pnpm\@remix-run+server-runtime@2.15.0_typescript@5.7.2\node_modules\@remix-run\server-runtime\dist\data.js:36:16)

at C:\Bolt\bolt.diy\node_modules\.pnpm\@remix-run+router@1.21.0\node_modules\@remix-run\router\router.ts:4899:19

at callLoaderOrAction (C:\Bolt\bolt.diy\node_modules\.pnpm\@remix-run+router@1.21.0\node_modules\@remix-run\router\router.ts:4963:16)

at async Promise.all (index 0)

at defaultDataStrategy (C:\Bolt\bolt.diy\node_modules\.pnpm\@remix-run+router@1.21.0\node_modules\@remix-run\router\router.ts:4772:17)

at callDataStrategyImpl (C:\Bolt\bolt.diy\node_modules\.pnpm\@remix-run+router@1.21.0\node_modules\@remix-run\router\router.ts:4835:17)

at callDataStrategy (C:\Bolt\bolt.diy\node_modules\.pnpm\@remix-run+router@1.21.0\node_modules\@remix-run\router\router.ts:3992:19)

at submit (C:\Bolt\bolt.diy\node_modules\.pnpm\@remix-run+router@1.21.0\node_modules\@remix-run\router\router.ts:3755:21)

at queryImpl (C:\Bolt\bolt.diy\node_modules\.pnpm\@remix-run+router@1.21.0\node_modules\@remix-run\router\router.ts:3684:22)

at Object.queryRoute (C:\Bolt\bolt.diy\node_modules\.pnpm\@remix-run+router@1.21.0\node_modules\@remix-run\router\router.ts:3629:18)

at handleResourceRequest (C:\Bolt\bolt.diy\node_modules\.pnpm\@remix-run+server-runtime@2.15.0_typescript@5.7.2\node_modules\@remix-run\server-runtime\dist\server.js:402:20)

at requestHandler (C:\Bolt\bolt.diy\node_modules\.pnpm\@remix-run+server-runtime@2.15.0_typescript@5.7.2\node_modules\@remix-run\server-runtime\dist\server.js:156:18)

at C:\Bolt\bolt.diy\node_modules\.pnpm\@remix-run+dev@2.15.0_@remix-run+react@2.15.0_react-dom@18.3.1_react@18.3.1__react@18.3.1_typ_3djlhh3t6jbfog2cydlrvgreoy\node_modules\@remix-run\dev\dist\vite\cloudflare-proxy-plugin.js:70:25 {

cause: Error: connect ECONNREFUSED ::1:1234

at TCPConnectWrap.afterConnect [as oncomplete] (node:net:1615:16)

at TCPConnectWrap.callbackTrampoline (node:internal/async_hooks:130:17) {

errno: -4078,

code: 'ECONNREFUSED',

syscall: 'connect',

address: '::1',

port: 1234

},

url: 'http://localhost:1234/v1/chat/completions',

requestBodyValues: {

model: 'claude-3-5-sonnet-latest',

logit_bias: undefined,

logprobs: undefined,

top_logprobs: undefined,

user: undefined,

parallel_tool_calls: undefined,

max_tokens: 8000,

temperature: 0,

top_p: undefined,

frequency_penalty: undefined,

presence_penalty: undefined,

stop: undefined,

seed: undefined,

max_completion_tokens: undefined,

store: undefined,

metadata: undefined,

response_format: undefined,

messages: [Array],

tools: undefined,

tool_choice: undefined,

stream: true,

stream_options: undefined

},

statusCode: undefined,

responseHeaders: undefined,

responseBody: undefined,

isRetryable: true,

data: undefined,

[Symbol(vercel.ai.error)]: true,

[Symbol(vercel.ai.error.AI_APICallError)]: true

},

[Symbol(vercel.ai.error)]: true,

[Symbol(vercel.ai.error.AI_RetryError)]: true

}

turning on Serve on Local Network in LM studio doesn’t fix it in my case .

any clue ?

Same issue. Can’t connect to LMStudio.

Bolt.diy sees the list of models, but when trying to chat fails:

app-prod-1 | [WARN] Failed to get Ollama models: Network connection lost.

app-prod-1 | Error: Network connection lost.

app-prod-1 | at async postToApi (file:///app/node_modules/.pnpm/@ai-sdk+provider-utils@1.0.20_zod@3.23.8/node_modules/@ai-sdk/provider-utils/src/post-to-api.ts:65:22)

app-prod-1 | at async Object.doStream (file:///app/node_modules/.pnpm/@ai-sdk+openai@0.0.66_zod@3.23.8/node_modules/@ai-sdk/openai/src/openai-chat-language-model.ts:386:50)

app-prod-1 | at async fn (file:///app/node_modules/.pnpm/ai@3.4.33_react@18.3.1_sswr@2.1.0_svelte@5.4.0__svelte@5.4.0_vue@3.5.13_typescript@5.7.2__zod@3.23.8/node_modules/ai/core/generate-text/stream-text.ts:378:23)

app-prod-1 | at null.<anonymous> (async file:///app/.wrangler/tmp/dev-VmM9d5/functionsWorker-0.629418405007115.js:32603:22)

app-prod-1 | at async _retryWithExponentialBackoff (file:///app/node_modules/.pnpm/ai@3.4.33_react@18.3.1_sswr@2.1.0_svelte@5.4.0__svelte@5.4.0_vue@3.5.13_typescript@5.7.2__zod@3.23.8/node_modules/ai/util/retry-with-exponential-backoff.ts:37:12)

app-prod-1 | at async startStep (file:///app/node_modules/.pnpm/ai@3.4.33_react@18.3.1_sswr@2.1.0_svelte@5.4.0__svelte@5.4.0_vue@3.5.13_typescript@5.7.2__zod@3.23.8/node_modules/ai/core/generate-text/stream-text.ts:333:13)

app-prod-1 | at async fn (file:///app/node_modules/.pnpm/ai@3.4.33_react@18.3.1_sswr@2.1.0_svelte@5.4.0__svelte@5.4.0_vue@3.5.13_typescript@5.7.2__zod@3.23.8/node_modules/ai/core/generate-text/stream-text.ts:414:11)

app-prod-1 | at null.<anonymous> (async file:///app/.wrangler/tmp/dev-VmM9d5/functionsWorker-0.629418405007115.js:32603:22)

app-prod-1 | at async chatAction (file:///app/build/server/index.js:1744:20)

app-prod-1 | at async Object.callRouteAction (file:///app/node_modules/.pnpm/@remix-run+server-runtime@2.15.0_typescript@5.7.2/node_modules/@remix-run/server-runtime/dist/data.js:36:16) {

app-prod-1 | retryable: true

app-prod-1 | }

app-prod-1 | [wrangler:inf] POST /api/chat 500 Internal Server Error (306ms)

Any ideas, how to fix this?

Bolt is in docker container. In config: LMSTUDIO_API_BASE_URL=http://host.docker.internal:1234/