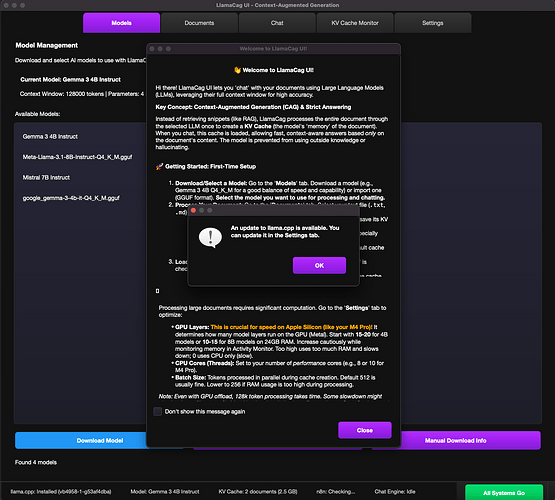

After some debugging this is Alpha. Addiction context in my other post.

Link to new Purple UI. Leaving main up in case i broke something

LlamaCag: Exploring Context-Augmented Generation for Local LLMs

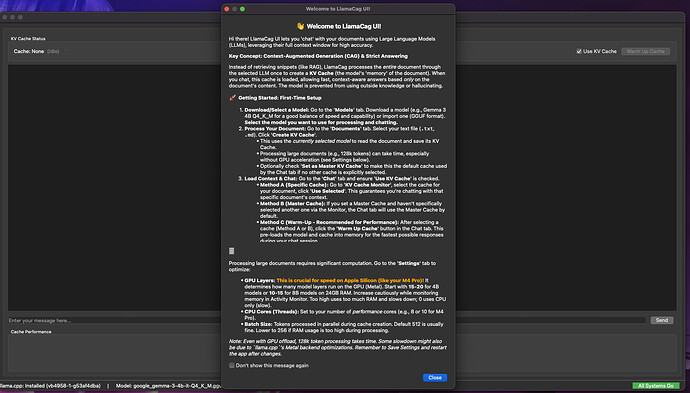

TLDR: LlamaCag isn’t just another document chat app - it’s an exploration into Context-Augmented Generation (CAG), a powerful but underutilized approach to working with documents in local LLMs. Unlike mainstream RAG systems that fragment documents into chunks, CAG leverages the full context window by maintaining the model’s internal state.

What is Context-Augmented Generation?

CAG is fundamentally different from how most systems handle documents today:

- RAG (Retrieval-Augmented Generation): Splits documents into chunks, stores in vector DBs, retrieves relevant chunks for each query

- Manual Insertion: Directly places document text into each prompt, requiring full reprocessing every time

- CAG: Processes the document ONCE to populate the model’s KV (Key-Value) cache state, then saves and reuses this state

Despite its potential advantages, CAG remains largely unexplored outside professional ML engineering circles and specialized enterprise solutions. This project aims to change that.

Why CAG Matters for Local LLM Usage

The current landscape of local LLM applications mostly ignores this approach, despite several compelling advantages:

- Efficiency: Document is processed once, not repeatedly for each query

- Context Integrity: Maintains the complete document context without fragmentation

- Speed: Dramatically faster responses after initial processing

- Privacy: Fully local operation for sensitive documents

- Accuracy: Higher factual accuracy due to complete contextual understanding

For business applications requiring high-precision document analysis (legal, technical, medical), this approach offers a significant advantage that isn’t being widely leveraged.

The Exploration Journey

LlamaCag represents both:

- An effort to build a production-ready application for practical use

- A research journey exploring an alternative paradigm for local LLM document interaction

The project investigates the practical implementation challenges, performance characteristics, and user experience possibilities of CAG in a local environment - something that typically requires more specialized development.

Beyond the Application

The longer-term vision extends beyond the standalone application:

- Validation Layer: Serving as a verification system to catch hallucinations in other AI outputs

- Integration Framework: Creating a reusable component for N8N and other workflow systems

- Architecture Pattern: Demonstrating how CAG can be integrated into larger AI ecosystems

Current Status

The project is in alpha, tested primarily on Mac with 24GB RAM using Gemma 3 4B Q4_K_M. It’s a functional exploration platform that allows observation of CAG behavior and performance across different scenarios.

This represents an important step in democratizing access to techniques that have previously remained in the domain of specialized ML engineering teams, making them accessible to broader developer and business communities.

Check out the repo for the comprehensive guide and join the exploration of this underutilized approach to local LLM document processing!