so i have been playing around with this and hit a wall. I finally just straight up asked Sonnet 3.7 to tshoot for me.

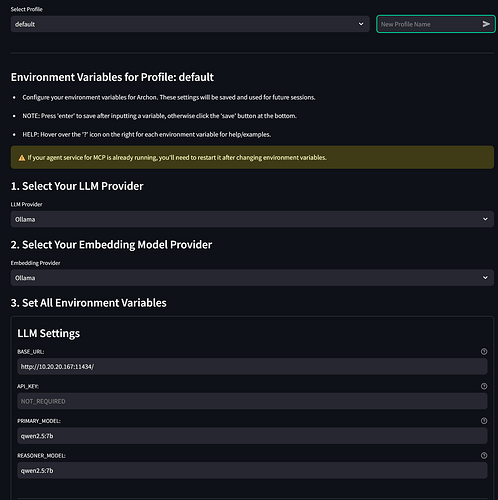

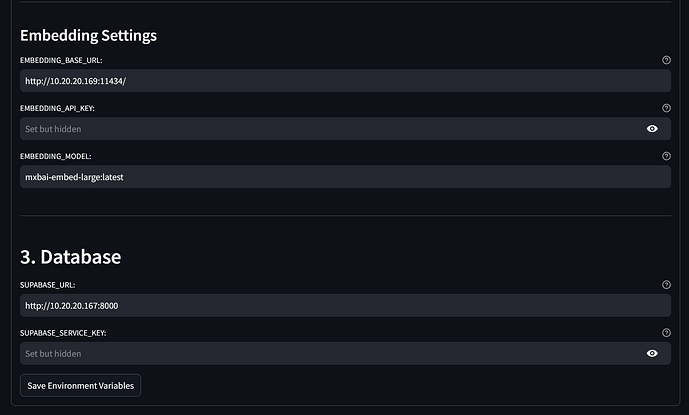

my scenario is: I am running 2 distinct ollama instances. one for text embedding and one for main LLM "stuff’ on the main ollama i am using qwen2.5:7b. supabase is vanilla.

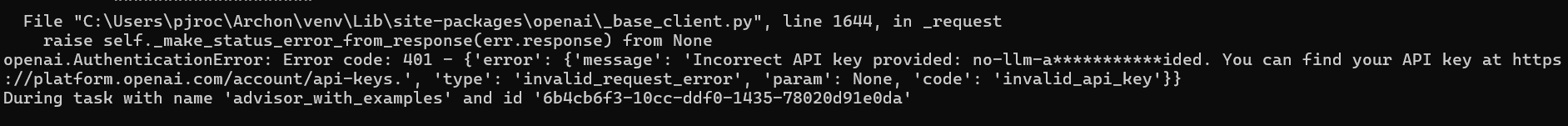

what Windsurf + Sonnet 3.7 told me kind of lined up with what my experience is. and its similar to others. when i try to use Ollama i get this error in the docker container logs.

openai.AuthenticationError: Error code: 401 - {‘error’: {‘message’: 'Incorrect API key provided: no-llm-a***********ided. You can find your API key at https://platform.openai.com/account/api-keys.', ‘type’: ‘invalid_request_error’, ‘param’: None, ‘code’: ‘invalid_api_key’}}

During task with name ‘advisor_with_examples’ and id ‘4fac2f08-825a-b456-91ae-9ad2600f7535’

i get this error even when i try and set all the api keys to hard coded ollama keys.

not sure if this helps but this is what i see. and again i am trying to 100% self host and this problem seems to only exist when i try to do it this way.

below is the full traceback 10.20.20.167 is my home server where “primary” ollama is running :

INFO:httpx:HTTP Request: GET [http://10.20.20.167](http://10.20.20.167):8000/rest/v1/site_pages?select=id&limit=1 "HTTP/1.1 200 OK"

INFO:httpx:HTTP Request: GET [http://10.20.20.167](http://10.20.20.167):8000/rest/v1/site_pages?select=%2A "HTTP/1.1 200 OK"

INFO:httpx:HTTP Request: GET [http://10.20.20.167](http://10.20.20.167):8000/rest/v1/site_pages?select=url&metadata-%3E%3Esource=eq.pydantic_ai_docs "HTTP/1.1 200 OK"

INFO:httpx:HTTP Request: POST [http://10.20.20.167](http://10.20.20.167):11434/chat/completions "HTTP/1.1 404 Not Found"

2025-04-14 02:00:03.606 Uncaught app execution

Traceback (most recent call last):

File "/usr/local/lib/python3.12/site-packages/streamlit/runtime/scriptrunner/exec_code.py", line 88, in exec_func_with_error_handling

result = func()

^^^^^^

File "/usr/local/lib/python3.12/site-packages/streamlit/runtime/scriptrunner/script_runner.py", line 579, in code_to_exec

exec(code, module.__dict__)

File "/app/streamlit_ui.py", line 114, in <module>

asyncio.run(main())

File "/usr/local/lib/python3.12/asyncio/runners.py", line 195, in run

return runner.run(main)

^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/asyncio/runners.py", line 118, in run

return self._loop.run_until_complete(task)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/asyncio/base_events.py", line 691, in run_until_complete

return future.result()

^^^^^^^^^^^^^^^

File "/app/streamlit_ui.py", line 93, in main

await chat_tab()

File "/app/streamlit_pages/chat.py", line 81, in chat_tab

async for chunk in run_agent_with_streaming(user_input):

File "/app/streamlit_pages/chat.py", line 30, in run_agent_with_streaming

async for msg in agentic_flow.astream(

File "/usr/local/lib/python3.12/site-packages/langgraph/pregel/__init__.py", line 2007, in astream

async for _ in runner.atick(

File "/usr/local/lib/python3.12/site-packages/langgraph/pregel/runner.py", line 527, in atick

_panic_or_proceed(

File "/usr/local/lib/python3.12/site-packages/langgraph/pregel/runner.py", line 619, in _panic_or_proceed

raise exc

File "/usr/local/lib/python3.12/site-packages/langgraph/pregel/retry.py", line 128, in arun_with_retry

return await task.proc.ainvoke(task.input, config)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/langgraph/utils/runnable.py", line 532, in ainvoke

input = await step.ainvoke(input, config, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/langgraph/utils/runnable.py", line 320, in ainvoke

ret = await asyncio.create_task(coro, context=context)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/app/archon/archon_graph.py", line 105, in define_scope_with_reasoner

result = await reasoner.run(prompt)

^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/pydantic_ai/agent.py", line 340, in run

end_result, _ = await graph.run(

^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/pydantic_graph/graph.py", line 187, in run

next_node = await self.next(next_node, history, state=state, deps=deps, infer_name=False)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/pydantic_graph/graph.py", line 263, in next

next_node = await node.run(ctx)

^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/pydantic_ai/_agent_graph.py", line 254, in run

model_response, request_usage = await agent_model.request(ctx.state.message_history, model_settings)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/pydantic_ai/models/openai.py", line 167, in request

response = await self._completions_create(messages, False, cast(OpenAIModelSettings, model_settings or {}))

<truncated 40 lines>

await chat_tab()

File "/app/streamlit_pages/chat.py", line 81, in chat_tab

async for chunk in run_agent_with_streaming(user_input):

File "/app/streamlit_pages/chat.py", line 36, in run_agent_with_streaming

async for msg in agentic_flow.astream(

File "/usr/local/lib/python3.12/site-packages/langgraph/pregel/__init__.py", line 2007, in astream

async for _ in runner.atick(

File "/usr/local/lib/python3.12/site-packages/langgraph/pregel/runner.py", line 527, in atick

_panic_or_proceed(

File "/usr/local/lib/python3.12/site-packages/langgraph/pregel/runner.py", line 619, in _panic_or_proceed

raise exc

File "/usr/local/lib/python3.12/site-packages/langgraph/pregel/retry.py", line 128, in arun_with_retry

return await task.proc.ainvoke(task.input, config)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/langgraph/utils/runnable.py", line 532, in ainvoke

input = await step.ainvoke(input, config, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/langgraph/utils/runnable.py", line 320, in ainvoke

ret = await asyncio.create_task(coro, context=context)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/app/archon/archon_graph.py", line 140, in advisor_with_examples

result = await advisor_agent.run(state['latest_user_message'], deps=deps)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/pydantic_ai/agent.py", line 340, in run

end_result, _ = await graph.run(

^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/pydantic_graph/graph.py", line 187, in run

next_node = await self.next(next_node, history, state=state, deps=deps, infer_name=False)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/pydantic_graph/graph.py", line 263, in next

next_node = await node.run(ctx)

^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/pydantic_ai/_agent_graph.py", line 254, in run

model_response, request_usage = await agent_model.request(ctx.state.message_history, model_settings)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/pydantic_ai/models/openai.py", line 167, in request

response = await self._completions_create(messages, False, cast(OpenAIModelSettings, model_settings or {}))

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/pydantic_ai/models/openai.py", line 203, in _completions_create

return await self.client.chat.completions.create(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/openai/resources/chat/completions.py", line 1720, in create

return await self._post(

^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/openai/_base_client.py", line 1849, in post

return await self.request(cast_to, opts, stream=stream, stream_cls=stream_cls)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/openai/_base_client.py", line 1543, in request

return await self._request(

^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.12/site-packages/openai/_base_client.py", line 1644, in _request

raise self._make_status_error_from_response(err.response) from None

openai.AuthenticationError: Error code: 401 - {'error': {'message': 'Incorrect API key provided: no-llm-a***********ided. You can find your API key at [https://platform.openai.com/account/api-keys.',](https://platform.openai.com/account/api-keys.',) 'type': 'invalid_request_error', 'param': None, 'code': 'invalid_api_key'}}

During task with name 'advisor_with_examples' and id '4fac2f08-825a-b456-91ae-9ad2600f7535'