It’s probably been right in my face the whole time but I’m not sure whether oTToDev is released for anyone to install or where to learn how to do that. Any help would be greatly appreciated. Sorry if it’s been there and I just missed it.

Here is the current repo main branch, please follow the README instructions for getting started: GitHub - coleam00/bolt.new-any-llm: Prompt, run, edit, and deploy full-stack web applications using any LLM you want!

Great and quick response Mahoney for the folks just getting started.

And…

Since I’ve already installed Bolt/Otto prior to Cole putting this great community together?

I wanted to know if I install to get the newest improvement using Docker…

Will the process delete my current work-in-progress?

Ty so much I really appreciate it… ![]()

I’ll help you install this project on Arch Linux. Based on the instructions provided, here are the step-by-step instructions specifically for Arch Linux:

- First, install the required dependencies using pacman:

sudo pacman -S git nodejs npm

- Install pnpm globally:

sudo npm install -g pnpm

- Clone the repository:

git clone https://github.com/coleam00/bolt.new-any-llm.git

cd bolt.new-any-llm

- Create and configure the environment file:

cp .env.example .env.local

- Edit the .env.local file with your preferred text editor (e.g., nano or vim):

nano .env.local

Add your API keys as needed:

GROQ_API_KEY=your_groq_key

OPENAI_API_KEY=your_openai_key

ANTHROPIC_API_KEY=your_anthropic_key

- Install project dependencies:

pnpm install

- Start the development server:

pnpm run dev

Important notes for Arch Linux users:

- If you plan to use Ollama models, you’ll need to install Ollama separately. You can install it using an AUR helper like yay:

yay -S ollama-bin

- For running with Docker (optional), install Docker and Docker Compose:

sudo pacman -S docker docker-compose

sudo systemctl enable docker

sudo systemctl start docker

sudo usermod -aG docker $USER

(Log out and back in for the group changes to take effect)

- For development with Chrome Canary (as recommended in the instructions), you can install it from the AUR:

yay -S google-chrome-canary

Err …

I’m having trouble getting this to work (installing OttoDev and running it locally on my laptop). I’m not sure if there is any other documentation but the readme seems like it leaves off short (or I’m getting confused looking at it). Perhaps it is expected that you know how docker works and the feel there is no need to explicitly state how to do every step to the end and launch the application. Also if it runs in the browser then networking comes involved so then this opens the question whether that have to be implemented in docker or if it is built into OttoDev and you are given the information (url and port) that the server is running on as well. I have some very rudimentary understand how to use docker but that has been several years and really never knew that much about it. What is the real step by step for install and running this? Is there a single source of information (source of truth) that tells it? I don’t have the skill level to understand how to piece together information from several sources (docker docs, the OttoDev readme) and understand what to do unless I were given some help to make the connections.

Any help on this would be greatly appreciated. I’m running Ubuntu 24.04 Desktop on a newish HP Envy (maybe a few years old now). Thanks

---------------------------------- EDIT ----------------------------------

I guess I figured something out. I figured out that the readme seems to instruct you in making an image (from the git repository I’m guessing?) - it does not explicitly say you have to make a container from the image and run in. That means you have to know that about docker to perform the last steps. Fortunately I remembered enough to look it up (but maybe others can’t in the future) and got the image id and ran it.

$ sudo docker run 5189b1b1b4c7

> bolt@ dev /app

> remix vite:dev "--host"

[warn] Data fetching is changing to a single fetch in React Router v7

┃ You can use the `v3_singleFetch` future flag to opt-in early.

┃ -> https://remix.run/docs/en/2.13.1/start/future-flags#v3_singleFetch

┗

➜ Local: http://localhost:5173/

➜ Network: http://172.17.0.2:5173/

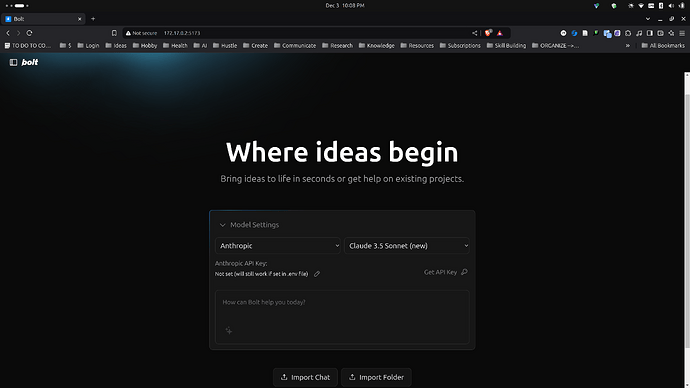

It will not load the page (standard browser error page) if I use the “Local:” address but does load if I use the “Network:” address (see screenshot below). After / upon resolving the Network address (http://172.17.0.2:5173/) I see the following additional information - concatenated to the output shown in the code block above - show together with the output shown above the way it appeared to meas as I executed the steps.

$ sudo docker run 5189b1b1b4c7

> bolt@ dev /app

> remix vite:dev "--host"

[warn] Data fetching is changing to a single fetch in React Router v7

┃ You can use the `v3_singleFetch` future flag to opt-in early.

┃ -> https://remix.run/docs/en/2.13.1/start/future-flags#v3_singleFetch

┗

➜ Local: http://localhost:5173/

➜ Network: http://172.17.0.2:5173/

Error getting Ollama models: TypeError: fetch failed

at node:internal/deps/undici/undici:13185:13

at processTicksAndRejections (node:internal/process/task_queues:95:5)

at Object.getOllamaModels [as getDynamicModels] (/app/app/utils/constants.ts:318:22)

at async Promise.all (index 0)

at Module.initializeModelList (/app/app/utils/constants.ts:389:9)

at handleRequest (/app/app/entry.server.tsx:30:3)

at handleDocumentRequest (/app/node_modules/.pnpm/@remix-run+server-runtime@2.15.0_typescript@5.7.2/node_modules/@remix-run/server-runtime/dist/server.js:340:12)

at requestHandler (/app/node_modules/.pnpm/@remix-run+server-runtime@2.15.0_typescript@5.7.2/node_modules/@remix-run/server-runtime/dist/server.js:160:18)

at /app/node_modules/.pnpm/@remix-run+dev@2.15.0_@remix-run+react@2.15.0_react-dom@18.3.1_react@18.3.1__react@18.3.1_typ_zyxju6yjkqxopc2lqyhhptpywm/node_modules/@remix-run/dev/dist/vite/cloudflare-proxy-plugin.js:70:25 {

[cause]: Error: connect ECONNREFUSED 127.0.0.1:11434

at TCPConnectWrap.afterConnect [as oncomplete] (node:net:1607:16)

at TCPConnectWrap.callbackTrampoline (node:internal/async_hooks:130:17) {

errno: -111,

code: 'ECONNREFUSED',

syscall: 'connect',

address: '127.0.0.1',

port: 11434

}

}

6:08:02 AM [vite] ✨ new dependencies optimized: remix-island, ai/react, framer-motion, node:path, diff, jszip, file-saver, @octokit/rest, react-resizable-panels, date-fns, istextorbinary, @radix-ui/react-dialog, @webcontainer/api, @codemirror/autocomplete, @codemirror/commands, @codemirror/language, @codemirror/search, @codemirror/state, @codemirror/view, @radix-ui/react-dropdown-menu, react-markdown, rehype-raw, remark-gfm, rehype-sanitize, unist-util-visit, @uiw/codemirror-theme-vscode, @codemirror/lang-javascript, @codemirror/lang-html, @codemirror/lang-css, @codemirror/lang-sass, @codemirror/lang-json, @codemirror/lang-markdown, @codemirror/lang-wast, @codemirror/lang-python, @codemirror/lang-cpp, shiki, @xterm/addon-fit, @xterm/addon-web-links, @xterm/xterm

6:08:02 AM [vite] ✨ optimized dependencies changed. reloading

Error: No route matches URL "/favicon.ico"

at getInternalRouterError (/app/node_modules/.pnpm/@remix-run+router@1.21.0/node_modules/@remix-run/router/router.ts:5505:5)

at Object.query (/app/node_modules/.pnpm/@remix-run+router@1.21.0/node_modules/@remix-run/router/router.ts:3527:19)

at handleDocumentRequest (/app/node_modules/.pnpm/@remix-run+server-runtime@2.15.0_typescript@5.7.2/node_modules/@remix-run/server-runtime/dist/server.js:275:35)

at requestHandler (/app/node_modules/.pnpm/@remix-run+server-runtime@2.15.0_typescript@5.7.2/node_modules/@remix-run/server-runtime/dist/server.js:160:24)

at /app/node_modules/.pnpm/@remix-run+dev@2.15.0_@remix-run+react@2.15.0_react-dom@18.3.1_react@18.3.1__react@18.3.1_typ_zyxju6yjkqxopc2lqyhhptpywm/node_modules/@remix-run/dev/dist/vite/cloudflare-proxy-plugin.js:70:25

Error getting Ollama models: TypeError: fetch failed

at node:internal/deps/undici/undici:13185:13

at processTicksAndRejections (node:internal/process/task_queues:95:5)

at Object.getOllamaModels [as getDynamicModels] (/app/app/utils/constants.ts:318:22)

at async Promise.all (index 0)

at Module.initializeModelList (/app/app/utils/constants.ts:389:9)

at handleRequest (/app/app/entry.server.tsx:30:3)

at handleDocumentRequest (/app/node_modules/.pnpm/@remix-run+server-runtime@2.15.0_typescript@5.7.2/node_modules/@remix-run/server-runtime/dist/server.js:340:12)

at requestHandler (/app/node_modules/.pnpm/@remix-run+server-runtime@2.15.0_typescript@5.7.2/node_modules/@remix-run/server-runtime/dist/server.js:160:18)

at /app/node_modules/.pnpm/@remix-run+dev@2.15.0_@remix-run+react@2.15.0_react-dom@18.3.1_react@18.3.1__react@18.3.1_typ_zyxju6yjkqxopc2lqyhhptpywm/node_modules/@remix-run/dev/dist/vite/cloudflare-proxy-plugin.js:70:25 {

[cause]: Error: connect ECONNREFUSED 127.0.0.1:11434

at TCPConnectWrap.afterConnect [as oncomplete] (node:net:1607:16)

at TCPConnectWrap.callbackTrampoline (node:internal/async_hooks:130:17) {

errno: -111,

code: 'ECONNREFUSED',

syscall: 'connect',

address: '127.0.0.1',

port: 11434

}

}

No routes matched location "/favicon.ico"

ErrorResponseImpl {

status: 404,

statusText: 'Not Found',

internal: true,

data: 'Error: No route matches URL "/favicon.ico"',

error: Error: No route matches URL "/favicon.ico"

at getInternalRouterError (/app/node_modules/.pnpm/@remix-run+router@1.21.0/node_modules/@remix-run/router/router.ts:5505:5)

at Object.query (/app/node_modules/.pnpm/@remix-run+router@1.21.0/node_modules/@remix-run/router/router.ts:3527:19)

at handleDocumentRequest (/app/node_modules/.pnpm/@remix-run+server-runtime@2.15.0_typescript@5.7.2/node_modules/@remix-run/server-runtime/dist/server.js:275:35)

at requestHandler (/app/node_modules/.pnpm/@remix-run+server-runtime@2.15.0_typescript@5.7.2/node_modules/@remix-run/server-runtime/dist/server.js:160:24)

at /app/node_modules/.pnpm/@remix-run+dev@2.15.0_@remix-run+react@2.15.0_react-dom@18.3.1_react@18.3.1__react@18.3.1_typ_zyxju6yjkqxopc2lqyhhptpywm/node_modules/@remix-run/dev/dist/vite/cloudflare-proxy-plugin.js:70:25

}

No routes matched location "/favicon.ico"

Is this working the way its supposed to or is there something I ought to be addressing based on this?

I also get this in the bolt terminal when I start it

Failed to spawn bolt shell

Failed to execute 'postMessage' on 'Worker': SharedArrayBuffer transfer requires self.crossOriginIsolated.

I should note I did not do any configuration to .env.local before creating the image and launching the container. I did rename it from .evn.example to .env.local though. My thinking was that you could do that and still poke around in the app to see how the UI works without it being connected to any AI.