Here’s what the expert says about itself

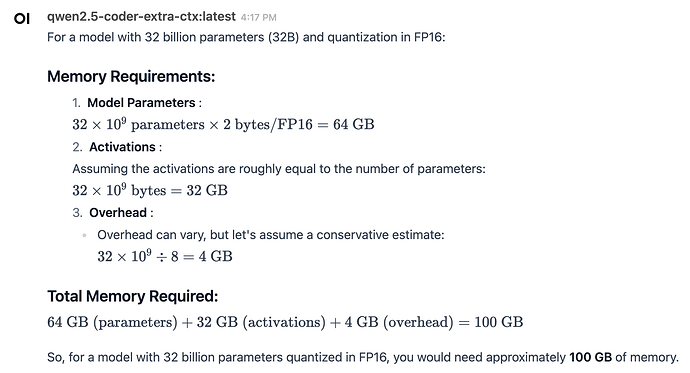

tl;dr: No consumer hardware will be able to host a model which comes close to what the commercial models can offer. Qwen32B sounds tempting, but you’d need approx. 100GB ram.

How to determine the minimum memory required to run a LLM (based upon the parameters size and quantization precision)?

ASSISTANT

Determining the minimum memory required to run a Large Language Model (LLM) involves several factors, including the model architecture, the number of parameters, quantization precision, and the specific hardware capabilities. Here’s a step-by-step guide to help you calculate or estimate the memory requirements:

1. Understand the Model Architecture

-

Number of Parameters: The number of trainable parameters in the LLM is a key factor. For example, a smaller model like GPT-3 has millions of parameters, while larger models like GPT-4 can have billions.

-

Layer Structure: Different architectures (e.g., transformer-based models) may have varying memory requirements due to their layer designs.

2. Quantization Precision

-

Quantization reduces the precision of the model weights and activations to save memory and computational resources.

-

Common quantization precisions include:

-

FP32 (Full Precision): Typically requires more memory but offers the highest accuracy.

-

FP16: Reduces memory usage by half compared to FP32.

-

INT8: Further reduces memory usage but can lead to some loss of precision.

-

If you are using quantization, multiply the number of parameters (or weights) by the precision factor.

For example:

- 1 billion parameters in FP16 would require (1 \times 10^9 \times 2 = 2 \text{ billion bytes}).

3. Memory for Activations

-

Besides the model parameters, you need memory to store activations during inference.

-

For a typical transformer-based model, the size of activations is roughly equal to the number of

parameters.

- Therefore, if your model has (N) parameters, it might require an additional (N) bytes for activations.

4. Memory Overhead

-

There will be additional memory required for overheads such as:

-

Temporary buffers used during inference.

-

Optimizer states (if training).

-

CUDA/ROCm device memory (for GPU computation).

5. Device-Specific Memory Constraints

-

Different hardware devices have different memory capacities. For example, GPUs typically offer more memory than CPUs.

-

Ensure that the total required memory does not exceed the available memory on your device.

Example Calculation

Let’s consider a hypothetical LLM with:

Memory Requirements:

- Model Parameters:

N \times 2 = 1 \times 10^9 \times 2 = 2 \text{ billion bytes} = 2 \text{ GB}

- Activations:

N \times 2 = 1 \times 10^9 \times 2 = 2 \text{ billion bytes} = 2 \text{ GB}

- Overhead:

- Assume an overhead of about (N \div 8) (a rough estimate, as this can vary):

N \div 8 = 1 \times 10^9 \div 8 = 125 \text{ million bytes} = 125 \text{ MB}

Total Memory Required:

2 \text{ GB (parameters)} + 2 \text{ GB (activations)} + 125 \text{ MB (overhead)} = 4.125 \text{ GB}

Practical Considerations

- Parallelism: If you are using parallel computing techniques like model parallelism or data parallelism,

the memory requirement per device will be lower but the overall system complexity increases.

- Inference vs. Training: The memory requirements for inference and training can differ significantly.

Training requires additional memory for gradients and optimizer states.

Conclusion

To determine the minimum memory required to run an LLM, consider the number of parameters, quantization precision, architecture, and overheads. Use this information to estimate the total memory needed based on your specific hardware constraints.

So how much would I need for 32B parameters with FP16? Sorry, I’m lazy calculating myself

ASSISTANT

Edit: As picture, as there’s no MathML-support in here

On a local machine for a singe developer, activations are probably ignorable. Still, quite some metal needed.

I decided to use a share service with transparent pricing (Open Router). More powerfull at lower TCO.