Having looked around online I’m seeing mixed options for hosting this, some where I need to install it myself and others where it appears it is fully managed. Does anyone have an easy solution which is proven to work?

Interested as well. I just started using groq the other day so I was wondering if it was an option there, or if there was a way to provide models to groq

Found this on the groq website, looks possible GroqCloud - Groq is Fast AI Inference

Is it any good generating code?

Groq is super fast, they also have free tier.

But they only host somewhat weak models for coding.

Like their best is Llama 3.1 70b I think.

So while speed is exciting I prefer Google Gemini 1.5 Flash. Its almost as fast and is better then models that Groq runs

OpenRouter has almost any model

And they also publish stats for which apps use which models how much

They have Qwen coder 32b

I gave it a try. In my view Sonnet and may be even GPT4 are still better models. May be even Gemini Flash and Pro are better.

Its cool model if you have super machine to run it fast locally for “free” as you spend a fortune on good machine to run it ![]()

So for free models I prefere Google models, and for paid best ones in town are OpenAI o1 preview and Antropic Sonnet 3.5 depending on the task.

I wonder how Haiku 3.5 compares to Google in that sense

Great suggestion, I was able to get it up and running on OR quite easily, now I can play around with different models and see how it goes. Thanks a lot

I’m considering trying novita.ai, but haven’t taken the jump yet. They seem reasonably priced.

I took a look round that yesterday and couldnt really understand what I needed to do lol, would be great to get your feedback if you do it

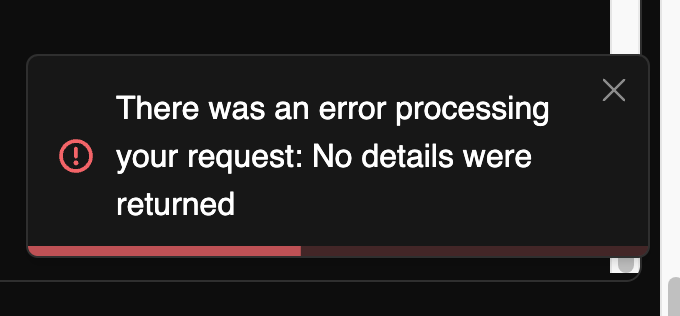

Having got this up and running on openrouter, its been working well. Fast, responses not as good as Sonnet, by quite a margin, but massive price difference! I am finding it throws this error every so often, I am wondring if it’s some kind of rate limit or network issue or timeout setting

I had to edit ‘app/utils/constants.ts’ and set the DEFAULT_MODEL to the model I’m using externally instead of the default Claude. If not, I would get the same error message you have. It seems like Ottodev has some issues connecting to an https host when the server instance is over http since it defaults to the before-mentioned DEFAULT_MODEL.

I can see that the model is working on the server, but I’m not getting any output in Ottodev. It works great when I’m using Lobe Chat. Really quick… Well, I configured 2x3090 so it should be fast ![]()

Setting up everything on novita.ai was really straight forward and easy. When I provided the Ollama port (11434) to the exposed HTTP ports, it generated an HTTPS URL for me that I added to my .env.local.

Have tried groq with Cline but it runs into rate limits immediately. Would love if groq had a paid option but is still not available.

I’ve been using it with Openrouter reliably and generally get better results with the 72B Instruct, and Llama 3.3 70B is also pretty good. I like that most models are available through Openrouter and get updated quickly (same day release for most models). I compared a bunch of options and they were also the best price (just not as fast as Groq) because it’s more of a marketplace for compute.

The only downside is that free models don’t seem to work for me through them, and it can be a bit daunting with all the options.

P.S. If anyone knows of a better price for a better service, please let me know!

I prefer google models for free ones

We have gemini 1206 and gemini 2.0 already

Both worked well in small things.

Gemini 2.0 is super fast