Inception Labs (Mercury Coder) First Impressions

Let me start by saying Inception Labs’ new AI platform (Mercury Coder) platform is amazing! – but it’s no silver bullet. While it delivers jaw-dropping performance in specific areas, the ecosystem feels a bit “beta” (which is fine for an MVP) and inconsistent (simple fix but could be a huge help).

First, the elephant in the room: ![]()

- Discovery is frustrating. Searching “Mercury Coder” yields unrelated GitHub results.

- Inconsistent branding: The UI references “inception,” the PWA installs as “MySite,” and documentation is sparse.

(That said, the PWA itself is sleek – lightweight web apps done right are always a win.)

Pros ![]()

- As Good as ChatGPT and Sonnet on one-shots

- Blazing fast responses; 5-10x Faster

- 5-10x Cheaper than traditional LLMs

- Remarkably good at one-shots (first attempts)

- Mesmerizing to see the “Diffusion Effect”

Cons ![]()

- Very beta feeling ecosystem

- Bad at making changes to the code

- Limited by lack of tools, “thinking”, prompt enhancement, etc.

- No advanced options (ability to add Custom System Prompts, etc)

- No public API Available yet

Usage ![]()

Mercury Coder was very good at one-shotting simple code, game examples, and etc. I was able to create several “functioning” games. And it completed this in SECONDS! Amazing. However, trying to get the AI to add features or fix bugs proved near impossible and simply frustrating.

Conversation was interesting though. It takes no user system prompt and uses no “reasoning”. So, it failed at the “How many R’s are in the word STRAWBERRY”, answering:

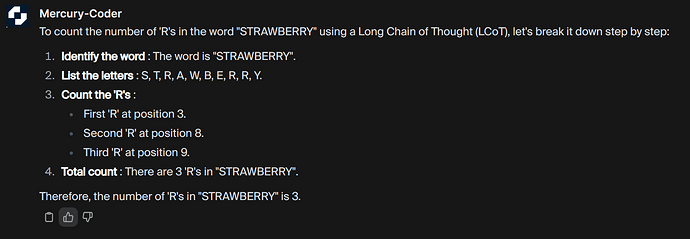

But simply telling it to Count and use “Chain of Thought” reasoning, with “COUNT the numbers of R’s in STRAWBERRY. Use LCoT (Long Chain of Thought) and confirm your answer BEFORE giving it” resulted in success:

Take from this what you will, but very interesting.

![]() Final Thoughts

Final Thoughts

I don’t think Inception Labs, aka. Mercury Coder is the “solution” but neither do I think this is the case for traditional LLM’s. I think a solution will be a MoE approach with a “system” around it. I think this tool would be useful for dumping out the initial response or re-working the baseline, whereas using “Diff’s” with a traditional LLM for iterations, small changes, or improvements would likely result in a better outcome.

The LLaDA Connection (Why This Matters) ![]()

Inception Labs appears aligned with the groundbreaking LLaDA framework – the first open-source diffusion-based LLM (8B params) rivaling LLaMA3. Their “dLLM” terminology aligns with LLaDA’s approach:

Key Context:

TL;DR: So, is it a “gimmick” or just a new AI hype? Maybe, but I personally think it’s another tool to add to the larger “system” that will lead to functional AGI. On its own though, I don’t think it’s the “solution”. So, once the API is available, I look forward to testing it with Bolt.diy (as well as Cline, etc.) and seeing it propel the project (and others) forward. Just think of the potential in using this in a type of Architect (multi-LLM) solution (initial response generation, hand off to reasoner/planner, then handed off to a model to refine). The potential is simply amazing and has my mind churning but that’s just my two cents.

![]() Try Mercury Coder: https://chat.inceptionlabs.ai/

Try Mercury Coder: https://chat.inceptionlabs.ai/