What is the easiest/best way to import and existing repo into the locally running oTToDev?

Its work in progress, not done yet, only way is to copy paste for now

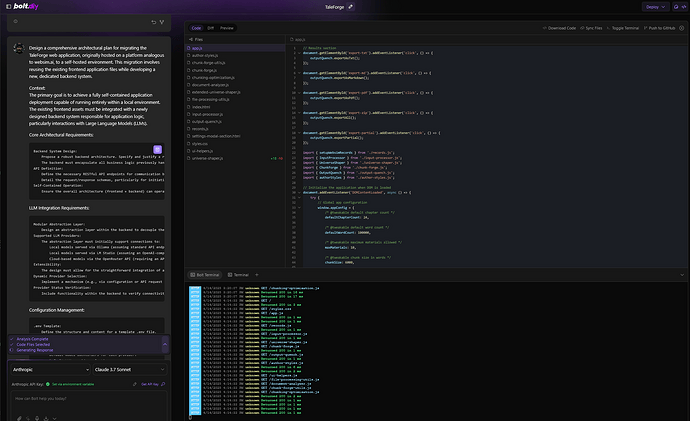

thanks I was just wondering if it copied to way bolt.new appears to do it? https://bolt.new/~/github.com/username/reponame)

thanks for this info

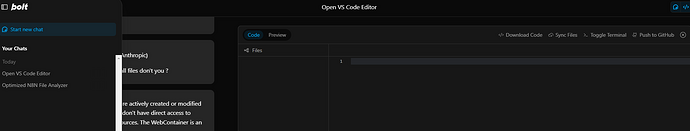

Hello,

I’m not sure to understand the feature “Sync files”.

As I could select a directory (and see the message “Bolt will see your files” ) I thought that could be sync with Github ?

Also, using the terminal, ls command returns an empty /project folder…

What am I missing here ? ![]()

What is meant by it is that it exports to folder

So its kinda like download

I am not sure if in oTToDev it outputs all the time or once, have not checked yet.

But in my fork(I plan to bring that feature over if needed) with file sync I constantly output as AI changes along with version to folder.

So its one way sync, from the app to the folder, but not from the folder to app.

But syncing back in to the app is coming

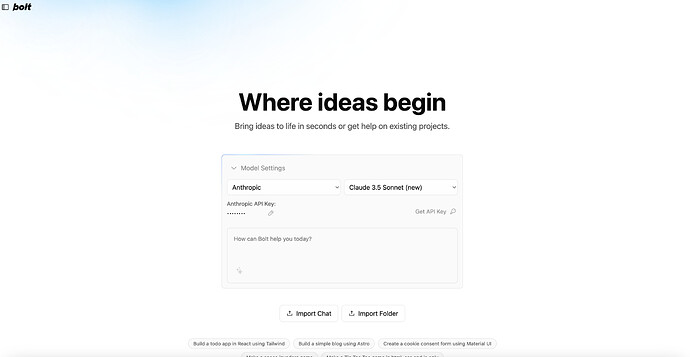

I’m very interested in allowing for this functionality, it will show up in the roadmap for sure but may not be immediate. Commercial bolt seems to be integrating their StackBlitz interface when public github repos are imported like this, and private access will require secure access via GitHub OAuth before it would be possible.

Yeah, I think two way sync with github is more desirable for me then two way sync with folder.

It makes more sense for us.

So now that I have PRs/code for import/export chats and import export folders, next step is pull/push github repos.

There are PRs exploring variations of it, I kinda like that one with device flow.

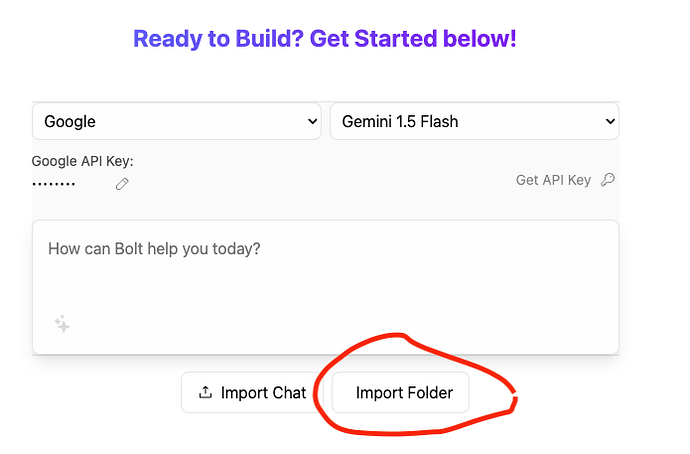

This feature “Load local projects into the app (@wonderwhy-er)” seems to have been completed but I’m not sure how to find/use it. Any ideas?

Thanks for the info and quick reply. I just updated to the newest version of bolt but can’t see that so I think I need to try again.

Is there a limit on the size of the project you can upload e.g. 20 - 25 MB?

Depends on the model, its about tokens and model context size.

We do not have context size management features in place.

One of next things to work on.

Thanks. Any model suggestions for working with a project of that size.

Google models have biggest context, if they don’t work, none will yet

Awesome, thanks again. I’ll test them out and let you know.

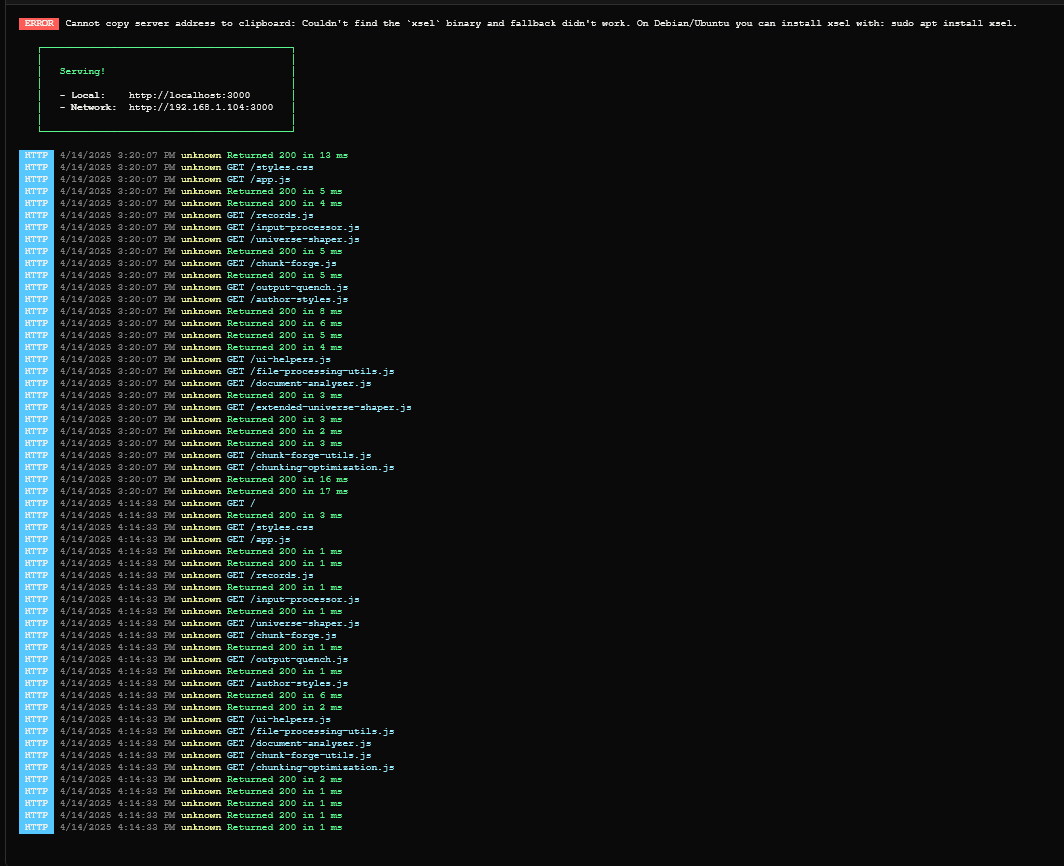

Sorry guys, a newbie here, where does the uploaded files go? I see in the terminal ~/project path but I cannot find where it is on my mac? Thank you…

Hi, The files are stored in memory in the web container, you can only access them in the editor via the tree view. You can download or sync them to a local folder.

Changes made in the local folder will not update the files in the web container, in other words the sync does not work in two ways only one way for the moment the team is working on it, but we need time.

I hope this helps you a bit.

Br,

Stijnus