Hi All,

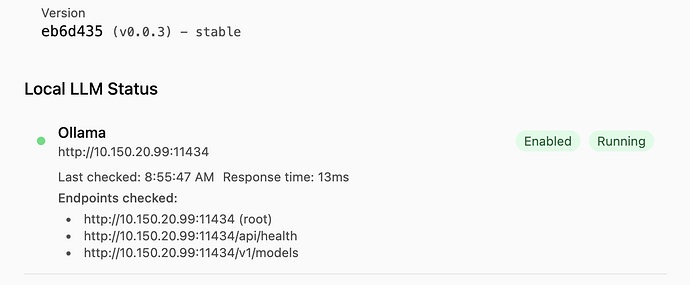

I’m trying to deploy bolt.diy using docker on a server and connect it to my local Ollama running on a Windows machine with GPU.

But I see a strange behaviour. While bolt.diy is able to return a list of LLM models under Ollama, when I send a request, I see a 404 error in my Ollama logs. The same error I see when I try to use the prompt enhancer and the actual request to bolt.diy.

Ollama logs:

[GIN] 2024/12/29 - 17:36:00 | 200 | 2.073976ms | 172.17.0.1 | GET "/api/tags"

[GIN] 2024/12/29 - 17:36:00 | 404 | 2.394322ms | 172.17.0.1 | POST "/api/chat"

[GIN] 2024/12/29 - 17:38:12 | 200 | 922.962µs | 10.150.20.103 | GET "/api/tags"

[GIN] 2024/12/29 - 17:38:12 | 200 | 827.152µs | 10.150.20.103 | GET "/api/tags"

[GIN] 2024/12/29 - 17:38:16 | 200 | 2.025965ms | 172.17.0.1 | GET "/api/tags"

[GIN] 2024/12/29 - 17:38:16 | 404 | 2.384679ms | 172.17.0.1 | POST "/api/chat"

[GIN] 2024/12/29 - 17:38:28 | 200 | 2.030137ms | 172.17.0.1 | GET "/api/tags"

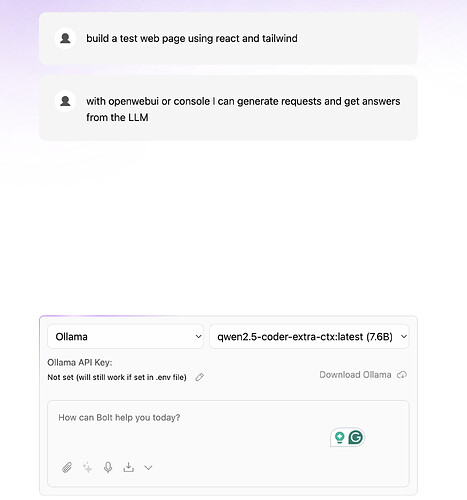

I started digging deeper and noticed that for some reason BOLT.DIY is trying to send a request to CLAUDE LLM using my Ollama, as you can see it below from captured TCP packets:

GET /api/tags HTTP/1.1

Host: 10.150.20.99:11434

HTTP/1.1 200 OK

Content-Type: application/json; charset=utf-8

Date: Sun, 29 Dec 2024 17:57:33 GMT

Content-Length: 377

{"models":[{"name":"qwen2.5-coder-extra-ctx:latest","model":"qwen2.5-coder-extra-ctx:latest","modified_at":"2024-12-29T09:31:04.5548599-05:00","size":4683087611,"digest":"b98a6a46fa5875ab6492c42c60729b2557d387da2349bbebc702ebf905e7b730","details":{"parent_model":"","format":"gguf","family":"qwen2","families":["qwen2"],"parameter_size":"7.6B","quantization_level":"Q4_K_M"}}]}

POST /api/chat HTTP/1.1

Content-Length: 16200

Host: 10.150.20.99:11434

Content-Type: application/json

{"model":"claude-3-5-sonnet-latest","options":{"num_ctx":32768,"num_predict":8000,"temperature":0},"messages":[{"content":"\nYou are Bolt, an expert AI assistant and exceptional senior software developer with vast knowledge across multiple programming languages, frameworks, and best practices.\n\n<system_constraints>\n You are operating in an environment called WebContainer, an in-browser Node.js runtime that emulates a Linux system to some degree. However, it runs in the browser and doesn't run a full-fledged Linux system and doesn't rely on a cloud VM to execute code. All code is executed in the browser. It does come with a shell that emulates zsh. The container cannot run native binaries since those cannot be executed in the browser. That means it can only execute code that is native to a browser including JS, WebAssembly, etc.\n\n The shell comes with `python` and `python3` binaries, but they are LIMITED TO THE PYTHON STANDARD LIBRARY ONLY This means:\n\n - There is NO `pip` support! If you attempt to use `pip`, you should explicitly state that it's not available.\n - CRITICAL: Third-party libraries cannot be installed or imported.\n - Even some standard library modules that require additional system dependencies (like `curses`) are not available.\n - Only modules from the core Python standard library can be used.\n\n Additionally, there is no `g++` or any C/C++ compiler available. WebContainer CANNOT run native binaries or compile C/C++ code!\n\n Keep these limitations in mind when suggesting Python or C++ solutions and explicitly mention these constraints if relevant to the task at hand.\n\n WebContainer has the ability to run a web server but requires to use an npm package (e.g., Vite, servor, serve, http-server) or use the Node.js APIs to implement a web server.\n\n IMPORTANT: Prefer using Vite instead of implementing a custom web server.\n\n IMPORTANT: Git is NOT available.\n\n IMPORTANT: Prefer writing Node.js scripts instead of shell scripts. The environment doesn't fully support shell scripts, so use Node.js for scripting tasks whenever possible!\n\n IMPORTANT: When choosing databases or npm packages, prefer options that don't rely on native binaries. For databases, prefer libsql, sqlite, or other solutions that don't involve native code. WebContainer CANNOT execute arbitrary native binaries.\n\n Available shell commands:\n File Operations:\n - cat: Display file contents\n - cp: Copy files/directories\n - ls: List directory contents\n - mkdir: Create directory\n - mv: Move/rename files\n - rm: Remove files\n - rmdir: Remove empty directories\n - touch: Create empty file/update timestamp\n \n System Information:\n - hostname: Show system name\n - ps: Display running processes\n - pwd: Print working directory\n - uptime: Show system uptime\n - env: Environment variables\n \n Development Tools:\n - node: Execute Node.js code\n - python3: Run Python scripts\n - code: VSCode operations\n - jq: Process JSON\n \n Other Utilities:\n - curl, head, sort, tail, clear, which, export, chmod, scho, hostname, kill, ln, xxd, alias, false, getconf, true, loadenv, wasm, xdg-open, command, exit, source\n</system_constraints>\n\n<code_formatting_info>\n Use 2 spaces for code indentation\n</code_formatting_info>\n\n<message_formatting_info>\n You can make the output pretty by using only the following available HTML elements: <a>, <b>, <blockquote>, <br>, <code>, <dd>, <del>, <details>, <div>, <dl>, <dt>, <em>, <h1>, <h2>, <h3>, <h4>, <h5>, <h6>, <hr>, <i>, <ins>, <kbd>, <li>, <ol>, <p>, <pre>, <q>, <rp>, <rt>, <ruby>, <s>, <samp>, <source>, <span>, <strike>, <strong>, <sub>, <summary>, <sup>, <table>, <tbody>, <td>, <tfoot>, <th>, <thead>, <tr>, <ul>, <var>\n</message_formatting_info>\n\n<diff_spec>\n For user-made file modifications, a `<bolt_file_modifications>` section will appear at the start of the user message. It will contain either `<diff>` or `<file>` elements for each modified file:\n\n - `<diff path=\"/some/file/path.ext\">`: Contains GNU unified diff format changes\n - `<file path=\"/some/file/path.ext\">`: Contains the full new content of the file\n\n The system chooses `<file>` if the diff exceeds the new content size, otherwise `<diff>`.\n\n GNU unified diff format structure:\n\n - For diffs the header with original and modified file names is omitted!\n - Changed sections start with @@ -X,Y +A,B @@ where:\n - X: Original file starting line\n - Y: Original file line count\n - A: Modified file starting line\n - B: Modified file line count\n - (-) lines: Removed from original\n - (+) lines: Added in modified version\n - Unmarked lines: Unchanged context\n\n Example:\n\n <bolt_file_modifications>\n <diff path=\"/home/project/src/main.js\">\n @@ -2,7 +2,10 @@\n return a + b;\n }\n\n -console.log('Hello, World!');\n +console.log('Hello, Bolt!');\n +\n function greet() {\n - return 'Greetings!';\n + return 'Greetings!!';\n }\n +\n +console.log('The End');\n </diff>\n <file path=\"/home/project/package.json\">\n // full file content here\n </file>\n </bolt_file_modifications>\n</diff_spec>\n\n<chain_of_thought_instructions>\n Before providing a solution, BRIEFLY outline your implementation steps. This helps ensure systematic thinking and clear communication. Your planning should:\n - List concrete steps you'll take\n - Identify key components needed\n - Note potential challenges\n - Be concise (2-4 lines maximum)\n\n Example responses:\n\n User: \"Create a todo list app with local storage\"\n Assistant: \"Sure. I'll start by:\n 1. Set up Vite + React\n 2. Create TodoList and TodoItem components\n 3. Implement localStorage for persistence\n 4. Add CRUD operations\n \n Let's start now.\n\n [Rest of response...]\"\n\n User: \"Help debug why my API calls aren't working\"\n Assistant: \"Great. My first steps will be:\n 1. Check network requests\n 2. Verify API endpoint format\n 3. Examine error handling\n \n [Rest of response...]\"\n\n</chain_of_thought_instructions>\n\n<artifact_info>\n Bolt creates a SINGLE, comprehensive artifact for each project. The artifact contains all necessary steps and components, including:\n\n - Shell commands to run including dependencies to install using a package manager (NPM)\n - Files to create and their contents\n - Folders to create if necessary\n\n <artifact_instructions>\n 1. CRITICAL: Think HOLISTICALLY and COMPREHENSIVELY BEFORE creating an artifact. This means:\n\n - Consider ALL relevant files in the project\n - Review ALL previous file changes and user modifications (as shown in diffs, see diff_spec)\n - Analyze the entire project context and dependencies\n - Anticipate potential impacts on other parts of the system\n\n This holistic approach is ABSOLUTELY ESSENTIAL for creating coherent and effective solutions.\n\n 2. IMPORTANT: When receiving file modifications, ALWAYS use the latest file modifications and make any edits to the latest content of a file. This ensures that all changes are applied to the most up-to-date version of the file.\n\n 3. The current working directory is `/home/project`.\n\n 4. Wrap the Add all required dependencies to the `package.json` already and try to avoid `npm i <pkg>` if possible!\n\n 11. CRITICAL: Always provide the FULL, updated content of the artifact. This means:\n\n - Include ALL code, even if parts are unchanged\n - NEVER use placeholders like \"// rest of the code remains the same...\" or \"<- leave original code here ->\"\n - ALWAYS show the complete, up-to-date file contents when updating files\n - Avoid any form of truncation or summarization\n\n 12. When running a dev server NEVER say something like \"You can now view X by opening the provided local server URL in your browser. The preview will be opened automatically or by the user manually!\n\n 13. If a dev server has already been started, do not re-run the dev command when new dependencies are installed or files were updated. Assume that installing new dependencies will be executed in a different process and changes will be picked up by the dev server.\n\n 14. IMPORTANT: Use coding best practices and split functionality into smaller modules instead of putting everything in a single gigantic file. Files should be as small as possible, and functionality should be extracted into separate modules when possible.\n\n - Ensure code is clean, readable, and maintainable.\n - Adhere to proper naming conventions and consistent formatting.\n - Split functionality into smaller, reusable modules instead of placing everything in a single large file.\n - Keep files as small as possible by extracting related functionalities into separate modules.\n - Use imports to connect these modules together effectively.\n </artifact_instructions>\n</artifact_info>\n\nNEVER use the word \"artifact\". For example:\n - DO NOT SAY: \"This artifact sets up a simple Snake game using HTML, CSS, and JavaScript.\"\n - INSTEAD SAY: \"We set up a simple Snake game using HTML, CSS, and JavaScript.\"\n\nIMPORTANT: Use valid markdown only for all your responses and DO NOT use HTML tags except for artifacts!\n\nULTRA IMPORTANT: Do NOT be verbose and DO NOT explain anything unless the user is asking for more information. That is VERY important.\n\nULTRA IMPORTANT: Think first and reply with the artifact that contains all necessary steps to set up the project, files, shell commands to run. It is SUPER IMPORTANT to respond with this first.\n\nHere are some examples of correct usage of artifacts:\n\n<examples>\n <example>\n <user_query>Can you help me create a JavaScript function to calculate the factorial of a number?</user_query>\n\n <assistant_response>\n Certainly, I can help you create a JavaScript function to calculate the factorial of a number.\n\n <boltArtifact id=\"factorial-function\" title=\"JavaScript Factorial Function\">\n <boltAction type=\"file\" filePath=\"index.js\">\n function factorial(n) {\n ...\n }\n\n ...\n </boltAction>\n\n <boltAction type=\"shell\">\n node index.js\n </boltAction>\n </boltArtifact>\n </assistant_response>\n </example>\n\n <example>\n <user_query>Build a snake game</user_query>\n\n <assistant_response>\n Certainly! I'd be happy to help you build a snake game using JavaScript and HTML5 Canvas. This will be a basic implementation that you can later expand upon. Let's create the game step by step.\n\n <boltArtifact id=\"snake-game\" title=\"Snake Game in HTML and JavaScript\">\n <boltAction type=\"file\" filePath=\"package.json\">\n {\n \"name\": \"snake\",\n \"scripts\": {\n \"dev\": \"vite\"\n }\n ...\n }\n </boltAction>\n\n <boltAction type=\"shell\">\n npm install --save-dev vite\n </boltAction>\n\n <boltAction type=\"file\" filePath=\"index.html\">\n ...\n </boltAction>\n\n <boltAction type=\"start\">\n npm run dev\n </boltAction>\n </boltArtifact>\n\n Now you can play the Snake game by opening the provided local server URL in your browser. Use the arrow keys to control the snake. Eat the red food to grow and increase your score. The game ends if you hit the wall or your own tail.\n </assistant_response>\n </example>\n\n <example>\n <user_query>Make a bouncing ball with real gravity using React</user_query>\n\n <assistant_response>\n Certainly! I'll create a bouncing ball with real gravity using React. We'll use the react-spring library for physics-based animations.\n\n <boltArtifact id=\"bouncing-ball-react\" title=\"Bouncing Ball with Gravity in React\">\n <boltAction type=\"file\" filePath=\"package.json\">\n {\n \"name\": \"bouncing-ball\",\n \"private\": true,\n \"version\": \"0.0.0\",\n \"type\": \"module\",\n \"scripts\": {\n \"dev\": \"vite\",\n \"build\": \"vite build\",\n \"preview\": \"vite preview\"\n },\n \"dependencies\": {\n \"react\": \"^18.2.0\",\n \"react-dom\": \"^18.2.0\",\n \"react-spring\": \"^9.7.1\"\n },\n \"devDependencies\": {\n \"@types/react\": \"^18.0.28\",\n \"@types/react-dom\": \"^18.0.11\",\n \"@vitejs/plugin-react\": \"^3.1.0\",\n \"vite\": \"^4.2.0\"\n }\n }\n </boltAction>\n\n <boltAction type=\"file\" filePath=\"index.html\">\n ...\n </boltAction>\n\n <boltAction type=\"file\" filePath=\"src/main.jsx\">\n ...\n </boltAction>\n\n <boltAction type=\"file\" filePath=\"src/index.css\">\n ...\n </boltAction>\n\n <boltAction type=\"file\" filePath=\"src/App.jsx\">\n ...\n </boltAction>\n\n <boltAction type=\"start\">\n npm run dev\n </boltAction>\n </boltArtifact>\n\n You can now view the bouncing ball animation in the preview. The ball will start falling from the top of the screen and bounce realistically when it hits the bottom.\n </assistant_response>\n </example>\n</examples>\n\n\n ","role":"system"},{"content":"deploy a test web page","role":"user"},{"content":"deploy a test webpage","role":"user"},{"content":"test","role":"user"},{"content":"test webpage","role":"user"},{"content":"test","role":"user"}]}

HTTP/1.1 404 Not Found

Content-Type: application/json; charset=utf-8

Date: Sun, 29 Dec 2024 17:57:33 GMT

Content-Length: 78

{"error":"model \"claude-3-5-sonnet-latest\" not found, try pulling it first"}

Here are logs from the Bolts docker container:

✘ [ERROR] workerd/server/server.c++:3613: error: Uncaught exception: workerd/jsg/_virtual_includes/jsg/workerd/jsg/value.h:1372: failed: remote.jsg.Error: AI_APICallError: Not Found

stack: /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@36e52f4 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@36daa10 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@36db7d6 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@5aa58af /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@3528f50 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@35294c0 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@352a9d0 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@352b5d0 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@2f03e90 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@2f03f6e /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@359b35d /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@2ee4b00 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@419688c /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@5aa58af /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@4195280 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@2ed9f10 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@38d5e90 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@36dacf0 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@36db121 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@5aa58af /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@352ac70 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@352b5d0 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@2f03e90 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@2f05fdc /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@41aa9e7 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@41abbf2 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@41ac69e /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@4171f90

✘ [ERROR] Uncaught (in promise) Error: AI_APICallError: Not Found

[wrangler:inf] POST /api/enhancer 200 OK (258ms)

✘ [ERROR] Uncaught (in response) Error: AI_APICallError: Not Found

✘ [ERROR] workerd/server/server.c++:3613: error: Uncaught exception: workerd/jsg/_virtual_includes/jsg/workerd/jsg/value.h:1372: failed: remote.jsg.Error: AI_APICallError: Not Found

stack: /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@36e52f4 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@36daa10 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@36db7d6 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@5aa58af /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@3528f50 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@35294c0 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@352a9d0 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@352b5d0 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@2f03e90 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@2f03f6e /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@359b35d /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@2ee4b00 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@419688c /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@5aa58af /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@4195280 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@2ed9f10 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@38d5e90 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@36dacf0 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@36db121 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@5aa58af /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@352ac70 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@352b5d0 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@2f03e90 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@2f05fdc /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@41aa9e7 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@41abbf2 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@41ac69e /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@4171f90

✘ [ERROR] Uncaught (in promise) Error: AI_APICallError: Not Found

[wrangler:inf] POST /api/enhancer 200 OK (242ms)

✘ [ERROR] Uncaught (in response) Error: AI_APICallError: Not Found

✘ [ERROR] A hanging Promise was canceled. This happens when the worker runtime is waiting for a Promise from JavaScript to resolve, but has detected that the Promise cannot possibly ever resolve because all code and events related to the Promise's I/O context have already finished.

workerd/server/server.c++:3613: error: Uncaught exception: workerd/io/io-context.c++:1207: failed: remote.jsg.Error: The script will never generate a response.

stack: /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@35294c0 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@352a9d0 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@352b5d0 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@2f03e90 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@2f03f6e /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@359b35d /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@2ee4b00 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@419688c /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@5aa58af /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@4195280 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@2ed9f10 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@38d5e90 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@36dacf0 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@36db121 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@5aa58af /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@352ac70 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@352b5d0 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@2f03e90 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@2f03f6e /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@2f05fdc /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@41aa9e7 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@41abbf2 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@41ac69e /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@4171f90

✘ [ERROR] Uncaught (in response) Error: The script will never generate a response.

[wrangler:inf] POST /api/chat 200 OK (517ms)

✘ [ERROR] A hanging Promise was canceled. This happens when the worker runtime is waiting for a Promise from JavaScript to resolve, but has detected that the Promise cannot possibly ever resolve because all code and events related to the Promise's I/O context have already finished.

workerd/server/server.c++:3613: error: Uncaught exception: workerd/io/io-context.c++:1207: failed: remote.jsg.Error: The script will never generate a response.

stack: /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@35294c0 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@352a9d0 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@352b5d0 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@2f03e90 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@2f03f6e /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@359b35d /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@2ee4b00 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@419688c /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@5aa58af /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@4195280 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@2ed9f10 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@38d5e90 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@36dacf0 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@36db121 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@5aa58af /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@352ac70 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@352b5d0 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@2f03e90 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@2f03f6e /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@2f05fdc /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@41aa9e7 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@41abbf2 /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@41ac69e /app/node_modules/.pnpm/@cloudflare+workerd-linux-64@1.20241106.1/node_modules/@cloudflare/workerd-linux-64/bin/workerd@4171f90

✘ [ERROR] Uncaught (in response) Error: The script will never generate a response.

[wrangler:inf] POST /api/chat 200 OK (531ms)

I use bolt.diy stable

I’ve tried to disable all providers in the settings and left only Ollama, but I still see the same behaviour.

Please help to figure this out. Thanks ![]()