I’m looking to set up DeepSeek R1 for my latest project, but I’m running into some challenges. Could anyone provide a step-by-step guide or share their experience with the setup process?

I did not test it, but just saw this video: https://www.youtube.com/watch?v=hruG-JlLeQg

Looks straight forward. Just use LM-Studio Provider then in bolt.diy with the R1-Model

Are you using bolt.diy to build your project? If so, Cole’s videos work as a great reference point. Also could reference Vincibits step by step to get it working… https://www.youtube.com/watch?v=Sry6m4mbcac&t=611s

Hope this helps.

Thanks for chiming in here @leex279 and @TimothiousAI!

I am also going to be testing out R1 for agents and with bolt.diy soon here. Super curious to see if it lives up to the hype!

I’ve already setup everything needed to test R1 on my machine, but I’m running into a very small issue and I think you guys can help me figure it out. All I need it so increase the Timeout on the fetch request to the Model as my computer cannot finish processing before it times out (Over 5 minutes). But getting as close as 98% finished.

I’m really eager to be able to test this ![]()

Deepseek R1 is also available via OpenRouter ![]()

Context is limited and the app I’m testing with already requires 100k tokens

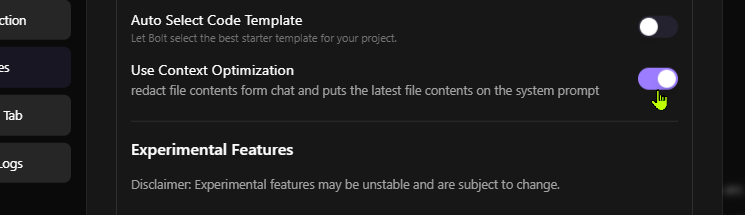

use this PR with context optimization turned on.

you wont be needing more than 20k context length

I’ll check it out. Thank you ![]()

can it be made configurable ? thanks intrested in keeping 32k 40k context to have more context. i was testing the context a couple of day ago and it worked with 32k . the test that i done was personal it did give better performance.

can you please guide how can we use this PR, I have deployed stable version on cloudflare, Thank you

its now merged with main branch.

current stable version already has this merged (0.0.6)

you need this option enabled to activate this optimization

there is an even another improvement done on top of this, which provides even better outputs while maintaining lower tokens feat: enhanced Code Context and Project Summary Features by thecodacus · Pull Request #1191 · stackblitz-labs/bolt.diy · GitHub