The only related thing I could find was this post but it doesn’t look like there’s a solution there and I’m not entirely sure its a match to what my problem is - I don’t know what my problem is.

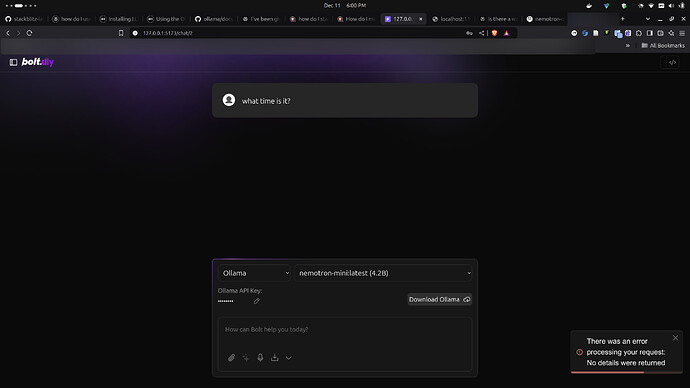

I managed to get OttoDev installed (I also have docker and ollama installed as well). OttoDev was installed via docker container. I see Ollama as a selection in the first (left hand side) dropdown but no entries in the dropdown next to it (to its right). When I created / ran the container for OttoDev I did not edit the contents of the .env.local file (I only renamed .env.example to .env.local and then created and ran the container). I’m seeing everywhere that you do not need an api key to use ollama in OttoDev so does that mean leave it blank? Make something up but put something in there? Where do you get what to put in there? Why isn’t it working and how do I make it work?

I go the following output when running the container. Only the part up to giving the server url that it is running on at first then everything starting wtih the first error printed to the terminal (was appended to the first part) after I resolved the sever url in my browser. It mentions something about not being able to find ollam or something like that.

$ sudo docker run 5189b1b1b4c7

> bolt@ dev /app

> remix vite:dev "--host"

[warn] Data fetching is changing to a single fetch in React Router v7

┃ You can use the `v3_singleFetch` future flag to opt-in early.

┃ -> https://remix.run/docs/en/2.13.1/start/future-flags#v3_singleFetch

┗

➜ Local: http://localhost:5173/

➜ Network: http://172.17.0.2:5173/

Error getting Ollama models: TypeError: fetch failed

at node:internal/deps/undici/undici:13185:13

at processTicksAndRejections (node:internal/process/task_queues:95:5)

at Object.getOllamaModels [as getDynamicModels] (/app/app/utils/constants.ts:318:22)

at async Promise.all (index 0)

at Module.initializeModelList (/app/app/utils/constants.ts:389:9)

at handleRequest (/app/app/entry.server.tsx:30:3)

at handleDocumentRequest (/app/node_modules/.pnpm/@remix-run+server-runtime@2.15.0_typescript@5.7.2/node_modules/@remix-run/server-runtime/dist/server.js:340:12)

at requestHandler (/app/node_modules/.pnpm/@remix-run+server-runtime@2.15.0_typescript@5.7.2/node_modules/@remix-run/server-runtime/dist/server.js:160:18)

at /app/node_modules/.pnpm/@remix-run+dev@2.15.0_@remix-run+react@2.15.0_react-dom@18.3.1_react@18.3.1__react@18.3.1_typ_zyxju6yjkqxopc2lqyhhptpywm/node_modules/@remix-run/dev/dist/vite/cloudflare-proxy-plugin.js:70:25 {

[cause]: Error: connect ECONNREFUSED 127.0.0.1:11434

at TCPConnectWrap.afterConnect [as oncomplete] (node:net:1607:16)

at TCPConnectWrap.callbackTrampoline (node:internal/async_hooks:130:17) {

errno: -111,

code: 'ECONNREFUSED',

syscall: 'connect',

address: '127.0.0.1',

port: 11434

}

}

6:08:02 AM [vite] ✨ new dependencies optimized: remix-island, ai/react, framer-motion, node:path, diff, jszip, file-saver, @octokit/rest, react-resizable-panels, date-fns, istextorbinary, @radix-ui/react-dialog, @webcontainer/api, @codemirror/autocomplete, @codemirror/commands, @codemirror/language, @codemirror/search, @codemirror/state, @codemirror/view, @radix-ui/react-dropdown-menu, react-markdown, rehype-raw, remark-gfm, rehype-sanitize, unist-util-visit, @uiw/codemirror-theme-vscode, @codemirror/lang-javascript, @codemirror/lang-html, @codemirror/lang-css, @codemirror/lang-sass, @codemirror/lang-json, @codemirror/lang-markdown, @codemirror/lang-wast, @codemirror/lang-python, @codemirror/lang-cpp, shiki, @xterm/addon-fit, @xterm/addon-web-links, @xterm/xterm

6:08:02 AM [vite] ✨ optimized dependencies changed. reloading

Error: No route matches URL "/favicon.ico"

at getInternalRouterError (/app/node_modules/.pnpm/@remix-run+router@1.21.0/node_modules/@remix-run/router/router.ts:5505:5)

at Object.query (/app/node_modules/.pnpm/@remix-run+router@1.21.0/node_modules/@remix-run/router/router.ts:3527:19)

at handleDocumentRequest (/app/node_modules/.pnpm/@remix-run+server-runtime@2.15.0_typescript@5.7.2/node_modules/@remix-run/server-runtime/dist/server.js:275:35)

at requestHandler (/app/node_modules/.pnpm/@remix-run+server-runtime@2.15.0_typescript@5.7.2/node_modules/@remix-run/server-runtime/dist/server.js:160:24)

at /app/node_modules/.pnpm/@remix-run+dev@2.15.0_@remix-run+react@2.15.0_react-dom@18.3.1_react@18.3.1__react@18.3.1_typ_zyxju6yjkqxopc2lqyhhptpywm/node_modules/@remix-run/dev/dist/vite/cloudflare-proxy-plugin.js:70:25

Error getting Ollama models: TypeError: fetch failed

at node:internal/deps/undici/undici:13185:13

at processTicksAndRejections (node:internal/process/task_queues:95:5)

at Object.getOllamaModels [as getDynamicModels] (/app/app/utils/constants.ts:318:22)

at async Promise.all (index 0)

at Module.initializeModelList (/app/app/utils/constants.ts:389:9)

at handleRequest (/app/app/entry.server.tsx:30:3)

at handleDocumentRequest (/app/node_modules/.pnpm/@remix-run+server-runtime@2.15.0_typescript@5.7.2/node_modules/@remix-run/server-runtime/dist/server.js:340:12)

at requestHandler (/app/node_modules/.pnpm/@remix-run+server-runtime@2.15.0_typescript@5.7.2/node_modules/@remix-run/server-runtime/dist/server.js:160:18)

at /app/node_modules/.pnpm/@remix-run+dev@2.15.0_@remix-run+react@2.15.0_react-dom@18.3.1_react@18.3.1__react@18.3.1_typ_zyxju6yjkqxopc2lqyhhptpywm/node_modules/@remix-run/dev/dist/vite/cloudflare-proxy-plugin.js:70:25 {

[cause]: Error: connect ECONNREFUSED 127.0.0.1:11434

at TCPConnectWrap.afterConnect [as oncomplete] (node:net:1607:16)

at TCPConnectWrap.callbackTrampoline (node:internal/async_hooks:130:17) {

errno: -111,

code: 'ECONNREFUSED',

syscall: 'connect',

address: '127.0.0.1',

port: 11434

}

}

No routes matched location "/favicon.ico"

ErrorResponseImpl {

status: 404,

statusText: 'Not Found',

internal: true,

data: 'Error: No route matches URL "/favicon.ico"',

error: Error: No route matches URL "/favicon.ico"

at getInternalRouterError (/app/node_modules/.pnpm/@remix-run+router@1.21.0/node_modules/@remix-run/router/router.ts:5505:5)

at Object.query (/app/node_modules/.pnpm/@remix-run+router@1.21.0/node_modules/@remix-run/router/router.ts:3527:19)

at handleDocumentRequest (/app/node_modules/.pnpm/@remix-run+server-runtime@2.15.0_typescript@5.7.2/node_modules/@remix-run/server-runtime/dist/server.js:275:35)

at requestHandler (/app/node_modules/.pnpm/@remix-run+server-runtime@2.15.0_typescript@5.7.2/node_modules/@remix-run/server-runtime/dist/server.js:160:24)

at /app/node_modules/.pnpm/@remix-run+dev@2.15.0_@remix-run+react@2.15.0_react-dom@18.3.1_react@18.3.1__react@18.3.1_typ_zyxju6yjkqxopc2lqyhhptpywm/node_modules/@remix-run/dev/dist/vite/cloudflare-proxy-plugin.js:70:25

}

No routes matched location "/favicon.ico"

Error getting Ollama models: TypeError: fetch failed

at node:internal/deps/undici/undici:13185:13

at processTicksAndRejections (node:internal/process/task_queues:95:5)

at Object.getOllamaModels [as getDynamicModels] (/app/app/utils/constants.ts:318:22)

at async Promise.all (index 0)

at Module.initializeModelList (/app/app/utils/constants.ts:389:9)

at handleRequest (/app/app/entry.server.tsx:30:3)

at handleDocumentRequest (/app/node_modules/.pnpm/@remix-run+server-runtime@2.15.0_typescript@5.7.2/node_modules/@remix-run/server-runtime/dist/server.js:340:12)

at requestHandler (/app/node_modules/.pnpm/@remix-run+server-runtime@2.15.0_typescript@5.7.2/node_modules/@remix-run/server-runtime/dist/server.js:160:18)

at /app/node_modules/.pnpm/@remix-run+dev@2.15.0_@remix-run+react@2.15.0_react-dom@18.3.1_react@18.3.1__react@18.3.1_typ_zyxju6yjkqxopc2lqyhhptpywm/node_modules/@remix-run/dev/dist/vite/cloudflare-proxy-plugin.js:70:25 {

[cause]: Error: connect ECONNREFUSED 127.0.0.1:11434

at TCPConnectWrap.afterConnect [as oncomplete] (node:net:1607:16)

at TCPConnectWrap.callbackTrampoline (node:internal/async_hooks:130:17) {

errno: -111,

code: 'ECONNREFUSED',

syscall: 'connect',

address: '127.0.0.1',

port: 11434

}

}

Error: No route matches URL "/favicon.ico"

at getInternalRouterError (/app/node_modules/.pnpm/@remix-run+router@1.21.0/node_modules/@remix-run/router/router.ts:5505:5)

at Object.query (/app/node_modules/.pnpm/@remix-run+router@1.21.0/node_modules/@remix-run/router/router.ts:3527:19)

at handleDocumentRequest (/app/node_modules/.pnpm/@remix-run+server-runtime@2.15.0_typescript@5.7.2/node_modules/@remix-run/server-runtime/dist/server.js:275:35)

at requestHandler (/app/node_modules/.pnpm/@remix-run+server-runtime@2.15.0_typescript@5.7.2/node_modules/@remix-run/server-runtime/dist/server.js:160:24)

at processTicksAndRejections (node:internal/process/task_queues:95:5)

at /app/node_modules/.pnpm/@remix-run+dev@2.15.0_@remix-run+react@2.15.0_react-dom@18.3.1_react@18.3.1__react@18.3.1_typ_zyxju6yjkqxopc2lqyhhptpywm/node_modules/@remix-run/dev/dist/vite/cloudflare-proxy-plugin.js:70:25

Error getting Ollama models: TypeError: fetch failed

at node:internal/deps/undici/undici:13185:13

at processTicksAndRejections (node:internal/process/task_queues:95:5)

at Object.getOllamaModels [as getDynamicModels] (/app/app/utils/constants.ts:318:22)

at async Promise.all (index 0)

at Module.initializeModelList (/app/app/utils/constants.ts:389:9)

at handleRequest (/app/app/entry.server.tsx:30:3)

at handleDocumentRequest (/app/node_modules/.pnpm/@remix-run+server-runtime@2.15.0_typescript@5.7.2/node_modules/@remix-run/server-runtime/dist/server.js:340:12)

at requestHandler (/app/node_modules/.pnpm/@remix-run+server-runtime@2.15.0_typescript@5.7.2/node_modules/@remix-run/server-runtime/dist/server.js:160:18)

at /app/node_modules/.pnpm/@remix-run+dev@2.15.0_@remix-run+react@2.15.0_react-dom@18.3.1_react@18.3.1__react@18.3.1_typ_zyxju6yjkqxopc2lqyhhptpywm/node_modules/@remix-run/dev/dist/vite/cloudflare-proxy-plugin.js:70:25 {

[cause]: Error: connect ECONNREFUSED 127.0.0.1:11434

at TCPConnectWrap.afterConnect [as oncomplete] (node:net:1607:16)

at TCPConnectWrap.callbackTrampoline (node:internal/async_hooks:130:17) {

errno: -111,

code: 'ECONNREFUSED',

syscall: 'connect',

address: '127.0.0.1',

port: 11434

}

}

No routes matched location "/favicon.ico"

ErrorResponseImpl {

status: 404,

statusText: 'Not Found',

internal: true,

data: 'Error: No route matches URL "/favicon.ico"',

error: Error: No route matches URL "/favicon.ico"

at getInternalRouterError (/app/node_modules/.pnpm/@remix-run+router@1.21.0/node_modules/@remix-run/router/router.ts:5505:5)

at Object.query (/app/node_modules/.pnpm/@remix-run+router@1.21.0/node_modules/@remix-run/router/router.ts:3527:19)

at handleDocumentRequest (/app/node_modules/.pnpm/@remix-run+server-runtime@2.15.0_typescript@5.7.2/node_modules/@remix-run/server-runtime/dist/server.js:275:35)

at requestHandler (/app/node_modules/.pnpm/@remix-run+server-runtime@2.15.0_typescript@5.7.2/node_modules/@remix-run/server-runtime/dist/server.js:160:24)

at processTicksAndRejections (node:internal/process/task_queues:95:5)

at /app/node_modules/.pnpm/@remix-run+dev@2.15.0_@remix-run+react@2.15.0_react-dom@18.3.1_react@18.3.1__react@18.3.1_typ_zyxju6yjkqxopc2lqyhhptpywm/node_modules/@remix-run/dev/dist/vite/cloudflare-proxy-plugin.js:70:25

}

No routes matched location "/favicon.ico"

^C^X

^TError getting Ollama models: TypeError: fetch failed

at node:internal/deps/undici/undici:13185:13

at processTicksAndRejections (node:internal/process/task_queues:95:5)

at Object.getOllamaModels [as getDynamicModels] (/app/app/utils/constants.ts:318:22)

at async Promise.all (index 0)

at Module.initializeModelList (/app/app/utils/constants.ts:389:9)

at handleRequest (/app/app/entry.server.tsx:30:3)

at handleDocumentRequest (/app/node_modules/.pnpm/@remix-run+server-runtime@2.15.0_typescript@5.7.2/node_modules/@remix-run/server-runtime/dist/server.js:340:12)

at requestHandler (/app/node_modules/.pnpm/@remix-run+server-runtime@2.15.0_typescript@5.7.2/node_modules/@remix-run/server-runtime/dist/server.js:160:18)

at /app/node_modules/.pnpm/@remix-run+dev@2.15.0_@remix-run+react@2.15.0_react-dom@18.3.1_react@18.3.1__react@18.3.1_typ_zyxju6yjkqxopc2lqyhhptpywm/node_modules/@remix-run/dev/dist/vite/cloudflare-proxy-plugin.js:70:25 {

[cause]: Error: connect ECONNREFUSED 127.0.0.1:11434

at TCPConnectWrap.afterConnect [as oncomplete] (node:net:1607:16)

at TCPConnectWrap.callbackTrampoline (node:internal/async_hooks:130:17) {

errno: -111,

code: 'ECONNREFUSED',

syscall: 'connect',

address: '127.0.0.1',

port: 11434

}

}

I’d also like to add that when resolving OttoDev in my browser I can use the “Network” address and it works but I can not use the “Local” address I get a browser error and the page will not resolve.

Any help will be greatly appreciated

Thanks