Hi, I have managed to get the docker image running following the instructions on Github, but now I’m stuck at how to get ollama and qwen up an running.

I reviewed the readme in ottodev and watched a number of the videos but couldnt find info on how to sset this up. I am new to all this, so probably missing something obvious, but if someone could point me to existing resources I’d be gratedul.

Thanks

UPDATE: I found instructions on downloading and running Ollama and even 32b seems to be running quite well on my M3 Mac so that’s great. Next up I’m trying to figure out how to get OttoDev to talk to it - I edited the .env file (sub question: should this be renamed .env or .env.local?) to add the ollama URL, but I don’t know what URL to use. I tried the one in the notes in the .env file http://localhost:11434 and it shows up in the ottodev UI, but when sending a request it does not get a response from ollama. I presume this is becuase my local ollama is not running in an environment which is serving it to localhost or is on a different port - so Im now stuck here.

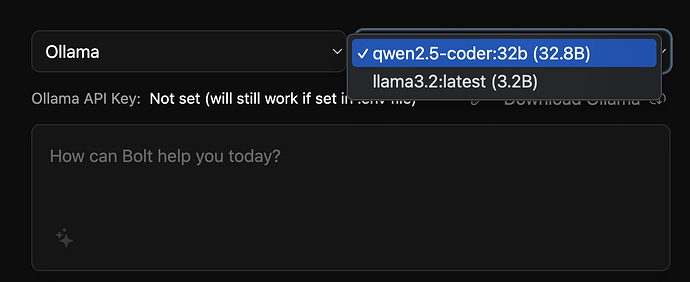

UPDATE: I just realised that I didnt specify the model in the .env file and yet the ottodev UI can see the available models

so it’s definitely there and working.

It seems to have sprung to life now, so problem solved. Performance calling from ottodev is terrible, so time to look for a hosted solution or try it on my other machine.

4 Likes

Glad you got it figured out @pdbappoo! Thank you for coming back and giving updates as you worked through it!

Oh sure, no point having a user group if we don’t help each other  thanks for making a pretty awesome fork and creating a vibrant community around it. These things live and die by their community helpers, take replit as an example. Great software but terrible comms and zero development that we know of.

thanks for making a pretty awesome fork and creating a vibrant community around it. These things live and die by their community helpers, take replit as an example. Great software but terrible comms and zero development that we know of.

1 Like

You bet man! It’s been my pleasure and I totally agree it’s crucial for me and the other maintainers to be super active, involved, and communicative!

This product is good once it is setup as expected. I have a 9 years old GPU, NVidia M40 and I am amaze with the result with Ottodev. If a local installation is running slow, the first thing to check is “Ollama ps” to see if the model is loaded on the GPU or not. As for accessing it remotely it seems you can tweak a file to get it to published the Models remotely. ! am able to access all my Ollama models from any computer on my network. I gave my workaround in another post but its best to let the team give a permanent solution.

1 Like

Absolutely fantastic, thanks for sharing @gngglobetech!

I had some drama getting this right. It looks like the model selector directly queries Ollama for the list of models straight from the browser (I could be wrong about this, but that’s what it looks like). But the oTToDev backend (within the Docker container) can’t see it (on localhost).

I had to modify the .env file so that OLLAMA_API_BASE_URL=http://<my_ip>:11434

and also configure Ollama to listen on all IPs (see: Allow listening on all local interfaces · Issue #703 · ollama/ollama · GitHub ) to get this to work.

Hope this helps anyone else having the same problem.