Hi experts,

I don’t have very fancy GPU powered machine but would like to use best free models through APIs.

Currently only using Mistral models as they provide good free tier API to use. Please suggest the way to use other models API who are better than mistral or atleast perform same.

I am not very technical person but need to use these API’s for some project I am working on.

Also need help on some good vision models API too as currently limited with Pixtral large model.

you open ottodev, you can select any provider and then you wil see an option to get api keys. it will redirect you to the page to get the api keys.

lots of them are free so you can use them

Thanks .Actually I am looking to use the API for inside my AI project not in a chat version (Through Ottodev as you suggested).

Also please help me with the reliable model (other than claude/open AI) that can be used for practical AI application (both text and vision).

I’ve been trying to use Ottodev using Mistral and Open AI 4o mini but after few prompts it starts hallucinating and screen gets freeze and have to restart the application. Any solution for this?

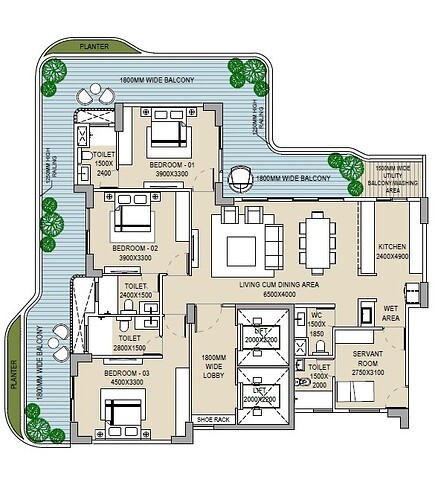

Actually I am trying to create a floor plan analyzer app that can read the dimensions from the given floor plan image (eg: 3bedroom apartment) and provide the users in a summarized version on the details of sizes and area.

Claude 3.5 sonnet version is working perfectly but I need to use free model for the same. Tried Pixtral but not giving 100% correct and reliable results.

Attached test image

Anyone try out QwQ? It’s actually very good. It one-shotted a prompt that Qwen2.5-Coder-32B-Instruct had problems with. It just fails to acknowledge it has access to the terminal or files, lol.

P.S. And it’s free to use through HuggingFace.

Thanks for the suggestion @aliasfox! Yeah @jayantsingla2005 I would suggest using QwQ outside of oTToDev for a free model (running locally or through HuggingFace’s inference API).

Inside oTToDev for what you are trying to build Jay, I’m not sure free models would be able to build something that complex. I would try giving Qwen 2.5 Coder 32b a shot though!

Grok or is it Groq the one that ISNT run by X/Musk

They provide API access to local models at a limited per day usage, I am not sure if they offer paid models with limits on the free plan or not. I will drop a link to the service when I get home and have better internet

If this helps, here’s a link that lists all the warm models on Hugging Face. These are actively used models that free users can access via the free Inference API.

You can try deepseek v3.

It costs very less, 0.01 $ per day. You can topup and use the token.

Also, you can use qwen 7b model, which is also very good.

It’s a hard claim to make that it uses $0.01 per day. That really depends on token usage. It’s more accurate to say it costs 14¢ (28¢ out) per Million Tokens, which is incredibly cheap.

Saying you only pay 1¢ per day means you are using between 35-71K tokens (combined input/output). And while that is a bit, I’ve used over 1 million tokens in a day. Crazy how far a buck will get you though.

And I personally wouldn’t suggest any model lower than 32B.

- S Tier: Claude 3.5 Sonnet, DeepSeek-V3, Gemini 2.0 Flash, o1

- A Tier: Llama3.3 70B, Phi-4, QwQ, QvQ, Qwen2.5 70B,

- B Tier: Llama-3.1, Qwen 2.5 Coder 32B, Grok-2, Codestral

- C Tier: Mistral (all variations), 3.5 Haiku,

- D Tier: Llama-3.2, GPT-4o

- E Tier: Gemma-2

- F Tier: Too many to list.

Google Gemini 2.0 Flash is the best FREE model through Google AI Studio. DeepSeek-v3 is very good, the best Open-Source model in fact, and very cheap at 14¢ (28¢ out) per Million Tokens.

As a note, only Instruct models work with Artifacts and QwQ/QvQ don’t work with Bolt.diy right out of the box.