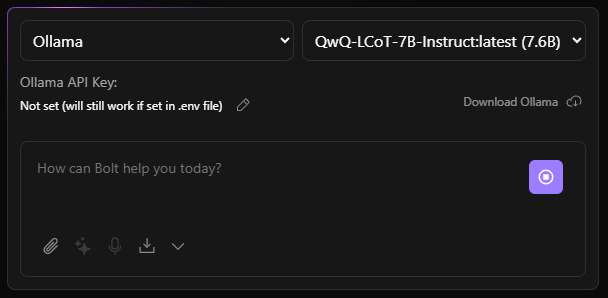

It’ll be hard to help with such a vague post, but right off the bat I can tell you something that is wrong… for local models running through LMStudio or Ollama, there is no need to set an API Key. But it looks like it’s at least being detected by Bolt, which is good.

Another note is that such a small model will likely not have the ability to produce artifacts and therefor will not generate code, modify files, or interact with the terminal.

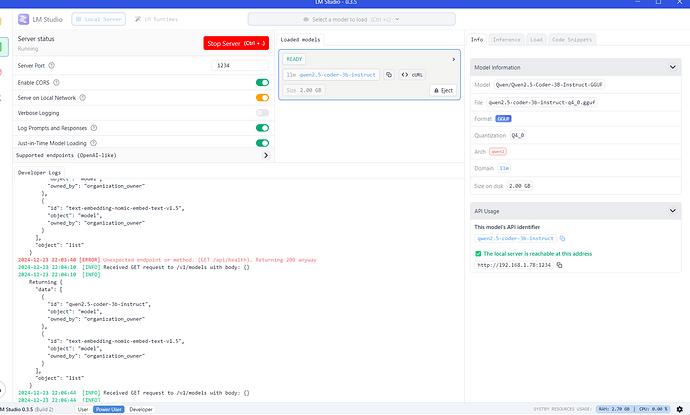

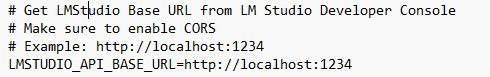

Check that LMStudio returns a response (in the UI), remove the API Key and get rid of any Custom URL (baseUrl) you might have put in the .env.local (or Bolt.diy settings) because the default is assumed. Also, make sure the server is running (you have to start it in LMStudio).

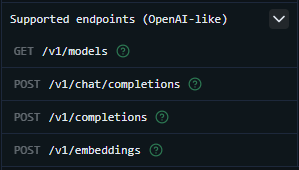

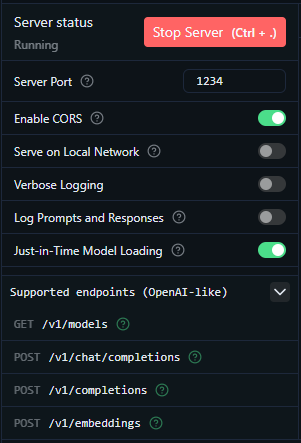

Expand “Supported endpoints (OpenAI-like)” to see the settings:

These are my settings:

I am have the “stable” Bolt.diy branch cloned and have LMStudio running.

But it does look like I did have to set the LMSTUDIO_API_BASE_URL:

Note: I thought this was checked by default, but I was wrong.

all set but no luck but i changer to gemini api key and worked well i think the problem is with lmstudio

Curious but idk. You’ll prabalby have better luck using HuggingFace, Google Studio (Gemini Exp-1206) and Azure (GitHub) ChatGPT 4o models for free anyways. Otherwise, I mostly use OpenRouter.

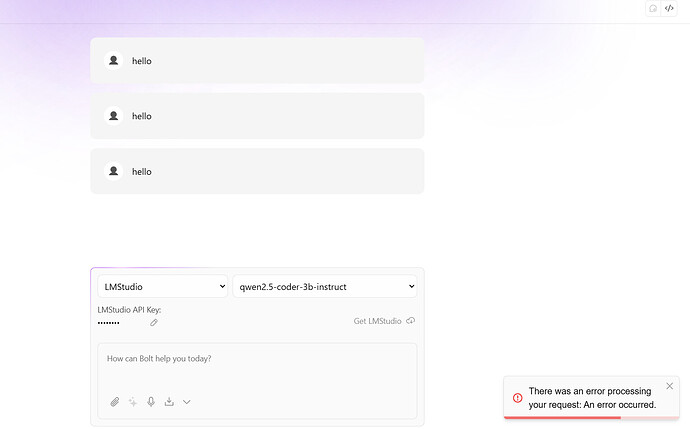

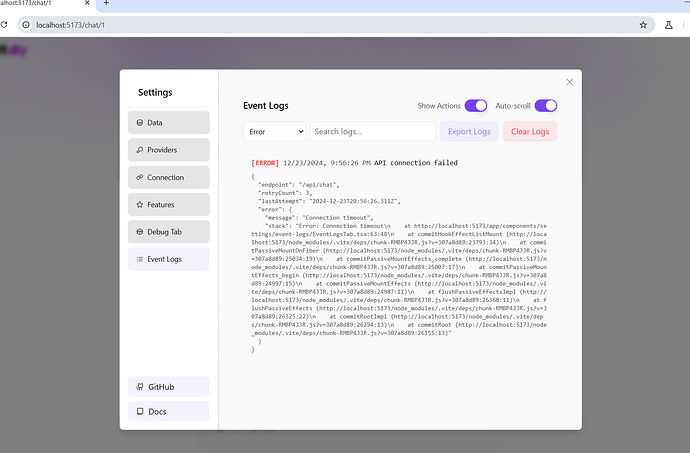

In am having the same issue. I always get an error on the bottom right as if the model is not correct. While the LM Studio loaded model its actually detected properly. Any ideas?

@Fer can you quickly describe what you are trying todo and post screenshot of your setup. Then I can take a look.

Hi there, I think I got it working, thank you! Docker has given me quite some nightmares.

Quick question, are there any plans or way to have all chats saved on the server side so we can open a project from anywhere on Bolt.diy? Currently it seems everything is only stored on the browser instance?

Thank you! ![]()

Nice, there are some more feature requests like this, so yes, I something like this will come in the future. but we will see. a lot of ideas and feature requests are there and we still try to sort everything, planning a new roadmap, etc.