I have tried for the last few days to connect Cerebras api to try Bolt with a 2000 token per second inference speed using llamba 3.3 70B and for the life of me I can not get it to connect. I put the api in the .env and modified the constants, I installed cerebras cloud sdk what am I missing? Cheers if anyone has a moment to take a peek into this would be much appreciated

1 Like

There’s a re-factor in place that will make adding new Providers a lot easier, but right now you have to modify a bunch of files, see this thread for more details.

1 Like

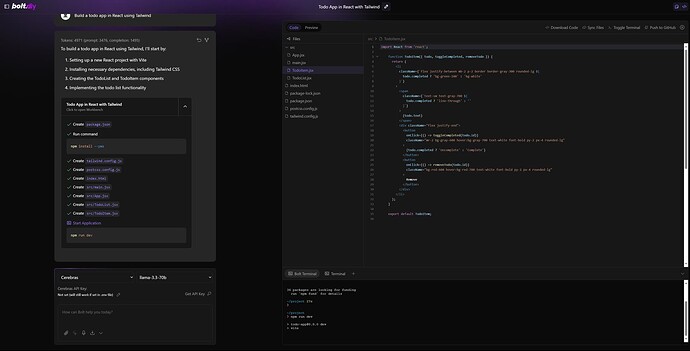

I just tested this and got the API to work:

Add to .\bolt.diy\app\utils\constants.ts

{

name: 'Cerebras',

staticModels: [

{ name: 'llama-3.1-8b', label: 'llama-3.1-8b', provider: 'Cerebras', maxTokenAllowed: 8192 },

{ name: 'llama-3.1-70b', label: 'llama-3.1-70b', provider: 'Cerebras', maxTokenAllowed: 8192 },

{ name: 'llama-3.3-70b', label: 'llama-3.3-70b', provider: 'Cerebras', maxTokenAllowed: 8192 },

],

getApiKeyLink: 'https://inference-docs.cerebras.ai/quickstart',

},

Add to .\bolt.diy\app\lib\.server\llm\api-key.ts

// Fall back to environment variables

switch (provider) {

...

case 'Cerebras':

return env.CEREBRAS_API_KEY;

Add to .\bolt.diy\app\lib\.server\llm\model.ts

export function getCerebrasModel(apiKey: OptionalApiKey, model: string) {

const openai = createOpenAI({

baseURL: 'https://api.cerebras.ai/v1',

apiKey,

});

return openai(model);

}

And…

switch (provider) {

...

case 'Cerebras':

return getCerebrasModel(apiKey, model);

Added CEREBRAS_API_KEY to .env.local

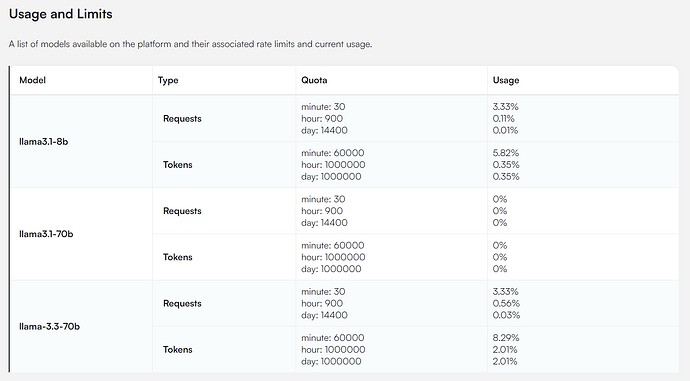

Maybe it’s because I just have the free tier, but with how much they boast speed, it seemed a little slow:

2 Likes

Killer! thanks alot!

1 Like