Just played with this yestereday!

But has anyone checked out the updates to Cline (VS Code Plugin) recently, with GitHub Codespaces? It’s pretty cool, I’m just not a big fan having to re-start them when they go idle.

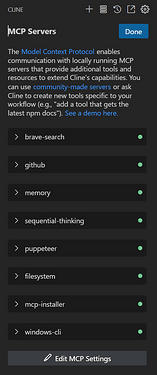

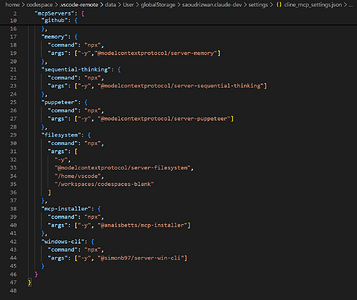

It’s similar to Bolt.diy but allows for integration of tools through MCP’s which is pretty awesome. But unlike bolt, it’s a bit heavier and can’t run in Cloudflare Pages… it basically requires a dedicated Cloud VM instance. And sadly, the 'Cline (prev. Claude Dev)' extension is not available in Code for the Web. Learn Why.

We need like all of these features, and RAG integration like AnythingLLM.

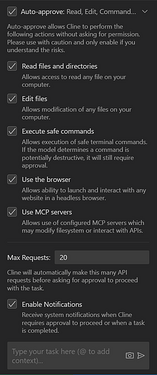

And it uses credits like crazy as it’s system prompt is absurdly long. And this makes it work horribly with Ollama/LMStudio. I had issues with getting local models through Ollama/LMStudio working with GitHub Codespaces. I kind of ran into a catch 22. MCP’s worked well through the GitHub Codespace, but no locally (still not sure why, need to troubleshoot further; likely mixed content and/or security related). But while MCP’s worked in the Codespace, local models would not (without some DNS trickery through a Cloudflare Proxy). And even then the response was very slow and the system prompt is definitely not designed for other models. I often got errors that the model (even set to 8,000 max tokens) did not have enough tokens to process the request (had to bump this to 16,384).

I think we could use a reasoning model (QwQ to dynamically build contextually aware system prompts, concise to the thing you are trying to solve with the “cheap” model and probably reduce initial token usage by like 75% and get better responses).

Just thought this was pretty cool!