Hi all! I’ve been following Cole’s videos for a while now and finally decided to pull the trigger and try prototyping an agent by following along on some of the videos. I’m working on the Flowise + n8n combo because it is a great combination! I got everything installed and was following along, but I ran into an error when building the agent.

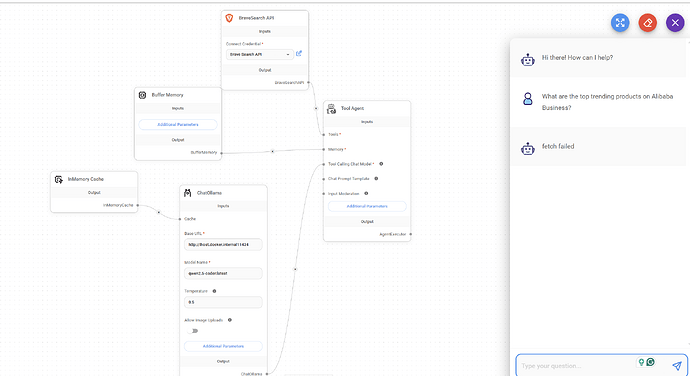

After adding my Bravesearch API credentials and trying a search, I keep getting a message that says I failed to fetch from the chat feature. Does anyone have any idea why this might be happening, what I may be doing wrong, and some suggestions to fix it?

Any advice would be greatly appreciated!

1 Like

Hey @blsllcofficial, thanks for following along with my videos and congrats on diving in and building an agent yourself!

Would you be able to share your workflow? And where/when does this error happen exactly?

Wow I didn’t expect a response from the founder! Thanks for taking the time to respond. I’ve followed the workflow up until the Brave Search API is connected but then when I go to test the chat it fails. I’ve attached some screenshots to show what I mean. My goal here is to eventually hook up AirTable and use this as the foundation for a research agent that can search the web and input results into Airtable/Notion. I’ll then consider making an n8n workflow to use perplexity to follow up on what this agent finds. Just experimenting overall!

1 Like

Hmm that error message isn’t helpful unfortunately. One of the gripes I have with Flowise though I do like the platform for prototyping. Are there logs or can you click into any node to see a more helpful error message?

Unfortunately no. Is the docker host internal correct for the LLM Node? From what I’m reading in different forums both for Flowise and n8n the issue seems to be with the agent calling Ollama. Its not connecting properly; most people fix the issue by switching back to the docker container but I’m trying to avoid doing that for the time being.

Is there a reason you are trying to avoid that? I think that would be a good thing to try! You mean changing from host.docker.internal to just “ollama” to reference the container, right?

Yeah, I’d prefer to run Ollama locally, i.e., not in the container, since I already have all the models I want to be installed and don’t want to eat up storage space by redownloading everything. My current issue is that host.docker.internal is not working, but I can’t find a workaround, and I’ve looked everywhere.

Gotcha, that makes sense! So your URL is http://host.docker.internal:11434? I’m not sure what else the issue could be if that is the case…