Initial Setup

Docker does not seem to work at all on linux. Instructions followed:

git clone https://github.com/coleam00/bolt.new-any-llm.git

cd bolt.new-any-llm

vim .env.local # Add API keys

cp .env.local .env

docker build . --target bolt-ai-production -t bolt-ai:production

docker-compose --profile production -f docker-compose.yaml up -d

- I have tested this by adding (so far), Ollama, Mistral, Google. None of them are detected or working.

The following logs are produced by the docker container initially:

2024-11-23 19:22:33 bolt-ai-1 |

2024-11-23 19:22:33 bolt-ai-1 | > bolt@ dockerstart /app

2024-11-23 19:22:33 bolt-ai-1 | > bindings=$(./bindings.sh) && wrangler pages dev ./build/client $bindings --ip 0.0.0.0 --port 5173 --no-show-interactive-dev-session

2024-11-23 19:22:33 bolt-ai-1 |

2024-11-23 19:22:33 bolt-ai-1 | ./bindings.sh: line 12: .env.local: No such file or directory

2024-11-23 19:22:36 bolt-ai-1 |

2024-11-23 19:22:36 bolt-ai-1 | ⛅️ wrangler 3.63.2 (update available 3.90.0)

2024-11-23 19:22:36 bolt-ai-1 | ---------------------------------------------

2024-11-23 19:22:36 bolt-ai-1 |

2024-11-23 19:22:37 bolt-ai-1 | ✨ Compiled Worker successfully

2024-11-23 19:22:38 bolt-ai-1 | [wrangler:inf] Ready on http://0.0.0.0:5173

[wrangler:inf] - http://127.0.0.1:5173

[wrangler:inf] - http://172.18.0.2:5173

⎔ Starting local server...

- Note: I have tested with both

localhostandhost.docker.internalas the base url for Ollama. Neither worked.

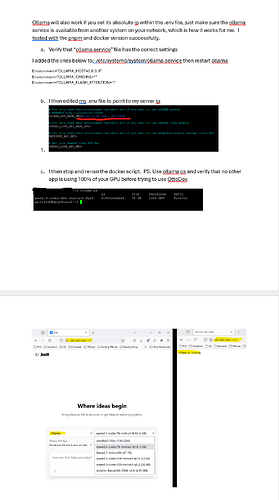

Trying to Fix Environment Variables

Because the .env.local file is not being detected, I figured I would try to create a bind mount volume in the docker compose file. This fixes the issue, but gave me mixed results on PC and Laptop (both same OS).

The following was added to the docker compose file for the production profile:

volumes:

- type: bind

source: .env.local

target: /app/.env.local

This changed the logs to the following:

2024-11-23 19:39:41 bolt-ai-1 | > bolt@ dockerstart /app

2024-11-23 19:39:41 bolt-ai-1 | > bindings=$(./bindings.sh) && wrangler pages dev ./build/client $bindings --ip 0.0.0.0 --port 5173 --no-show-interactive-dev-session

2024-11-23 19:39:41 bolt-ai-1 |

2024-11-23 19:39:44 bolt-ai-1 |

2024-11-23 19:39:44 bolt-ai-1 | ⛅️ wrangler 3.63.2 (update available 3.90.0)

2024-11-23 19:39:44 bolt-ai-1 | ---------------------------------------------

2024-11-23 19:39:44 bolt-ai-1 |

2024-11-23 19:39:44 bolt-ai-1 | ✨ Compiled Worker successfully

2024-11-23 19:39:44 bolt-ai-1 | Your worker has access to the following bindings:

2024-11-23 19:39:44 bolt-ai-1 | - Vars:

2024-11-23 19:39:44 bolt-ai-1 | - GROQ_API_KEY: "(hidden)"

2024-11-23 19:39:44 bolt-ai-1 | - HuggingFace_API_KEY: "(hidden)"

2024-11-23 19:39:44 bolt-ai-1 | - OPENAI_API_KEY: "(hidden)"

2024-11-23 19:39:44 bolt-ai-1 | - ANTHROPIC_API_KEY: "(hidden)"

2024-11-23 19:39:44 bolt-ai-1 | - OPEN_ROUTER_API_KEY: "(hidden)"

2024-11-23 19:39:44 bolt-ai-1 | - GOOGLE_GENERATIVE_AI_API_KEY: "(hidden)"

2024-11-23 19:39:44 bolt-ai-1 | - OLLAMA_API_BASE_URL: "(hidden)"

2024-11-23 19:39:44 bolt-ai-1 | - OPENAI_LIKE_API_BASE_URL: "(hidden)"

2024-11-23 19:39:44 bolt-ai-1 | - DEEPSEEK_API_KEY: "(hidden)"

2024-11-23 19:39:44 bolt-ai-1 | - OPENAI_LIKE_API_KEY: "(hidden)"

2024-11-23 19:39:44 bolt-ai-1 | - MISTRAL_API_KEY: "(hidden)"

2024-11-23 19:39:44 bolt-ai-1 | - COHERE_API_KEY: "(hidden)"

2024-11-23 19:39:44 bolt-ai-1 | - LMSTUDIO_API_BASE_URL: "(hidden)"

2024-11-23 19:39:44 bolt-ai-1 | - XAI_API_KEY: "(hidden)"

2024-11-23 19:39:44 bolt-ai-1 | - VITE_LOG_LEVEL: "(hidden)"

2024-11-23 19:39:44 bolt-ai-1 | [wrangler:inf] Ready on http://0.0.0.0:5173

[wrangler:inf] - http://127.0.0.1:5173

[wrangler:inf] - http://172.18.0.2:5173

⎔ Starting local server...

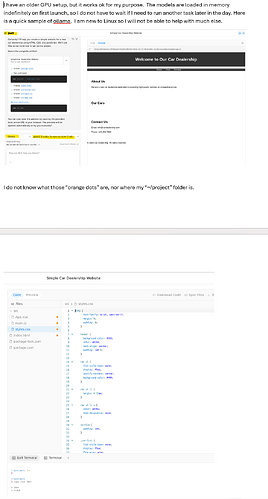

This had a different effect on each platform:

- PC: The environment variables were detected; the models were not.

- Laptop: The environment variables were detected; the models were detected.

However, on both platforms, I could not send a single message. They all failed with an error:

There was an error processing your request: No details were returned

Because nothing was working, I decided to just try a simple mixtral api key and it actually did work. However, I immediately got this error in the bolt terminal:

Failed to spawn bolt shell

Failed to execute 'postMessage' on 'Worker': SharedArrayBuffer transfer requires self.crossOriginIsolated.

I’m not sure how so many people are using this, but it’s not working in the slightest for me.